Table of contents

- Overview:

- Skills measured

- I. Module 1 Discover data analysis

- II. Module 2 Get started building with Power BI

- III. Module 3 Get data in Power BI

- 1. Introduction

- 2. Get data from files

- 3. Get data from relational data sources

- 4. Create dynamic reports with parameters

- 5. Create dynamic reports for multiple values

- 6. Get data from a NoSQL database

- 7. Get data from online services

- 8. Select a storage mode

- 9. Get data from Azure Analysis Services

- 10. Fix performance issues

- 11. Resolve data import errors

- 12. Exercise - Prepare data in Power BI Desktop

- 13. Check your knowledge

- 14. Summary

- Learning objectives

- IV. Module 4 Clean, transform, and load data in Power BI

- 1. Introduction

- 2. Shape the initial data

- 3. Simplify the data structure

- 4. Evaluate and change column data types

- 5. Combine multiple tables into a single table

- 6. Profile data in Power BI

- 7. Use Advanced Editor to modify M code

- 8. Exercise - Load data in Power BI Desktop

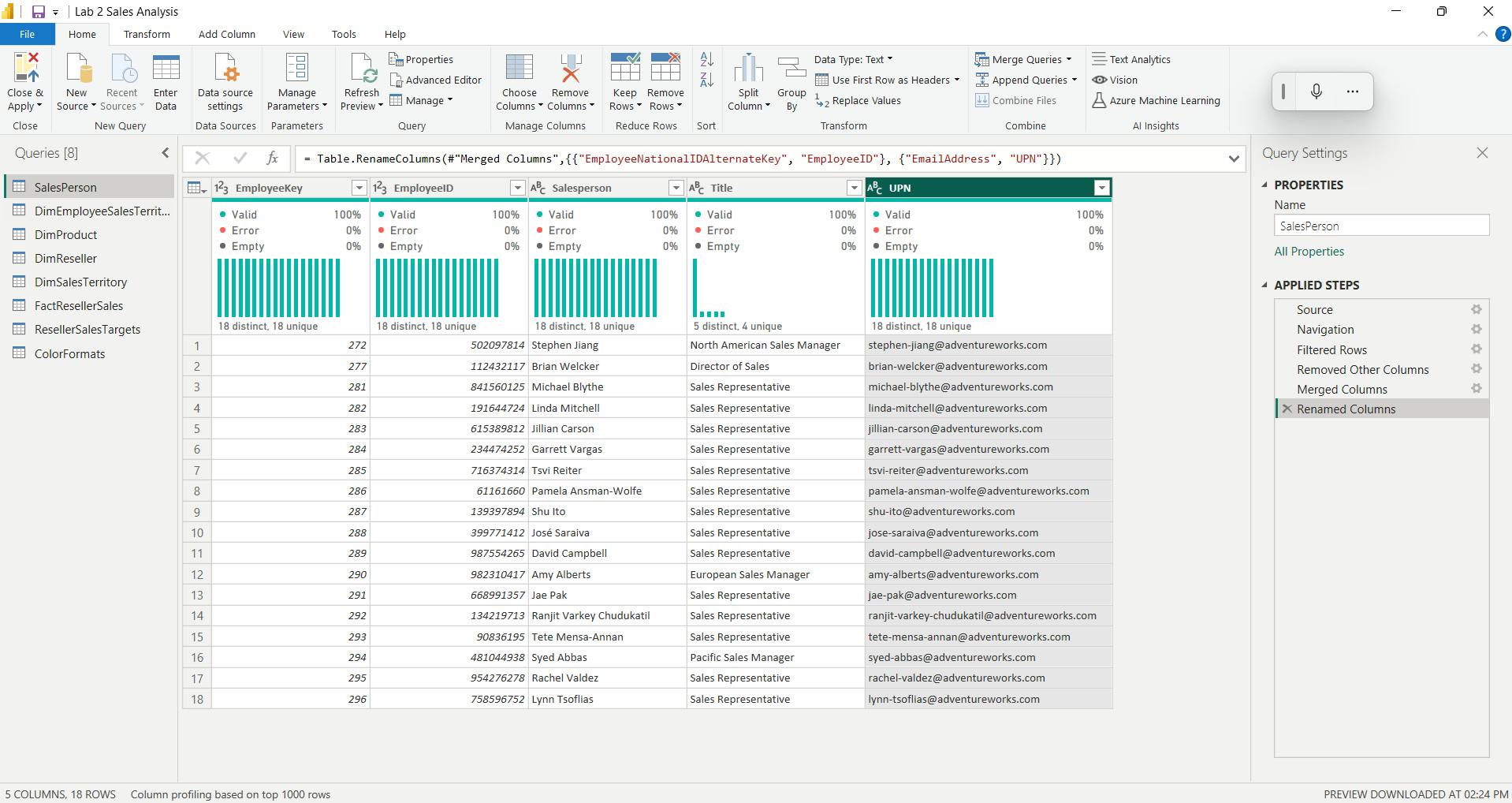

- i. Configure the Salesperson query

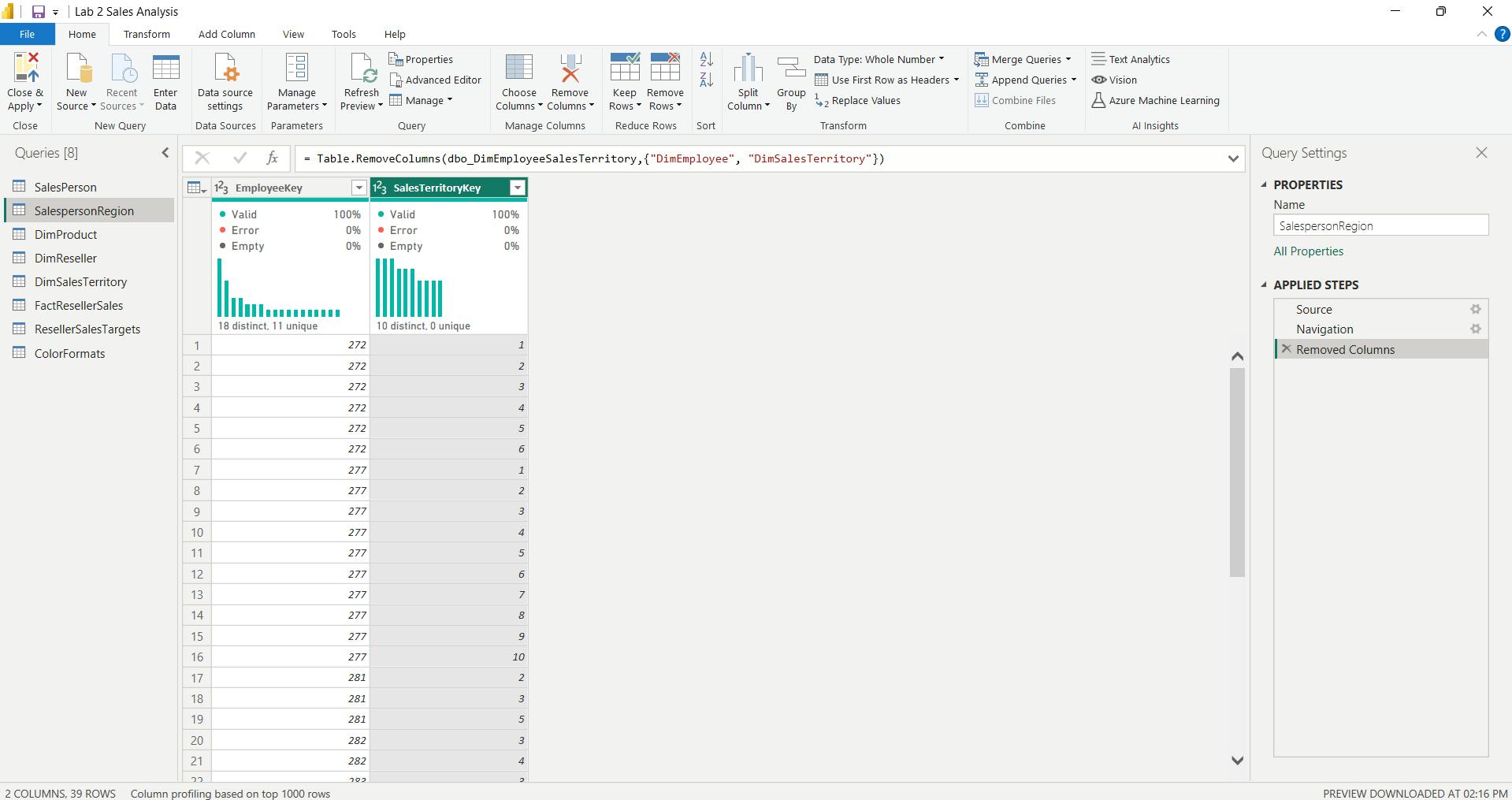

- ii. Configure the SalespersonRegion query

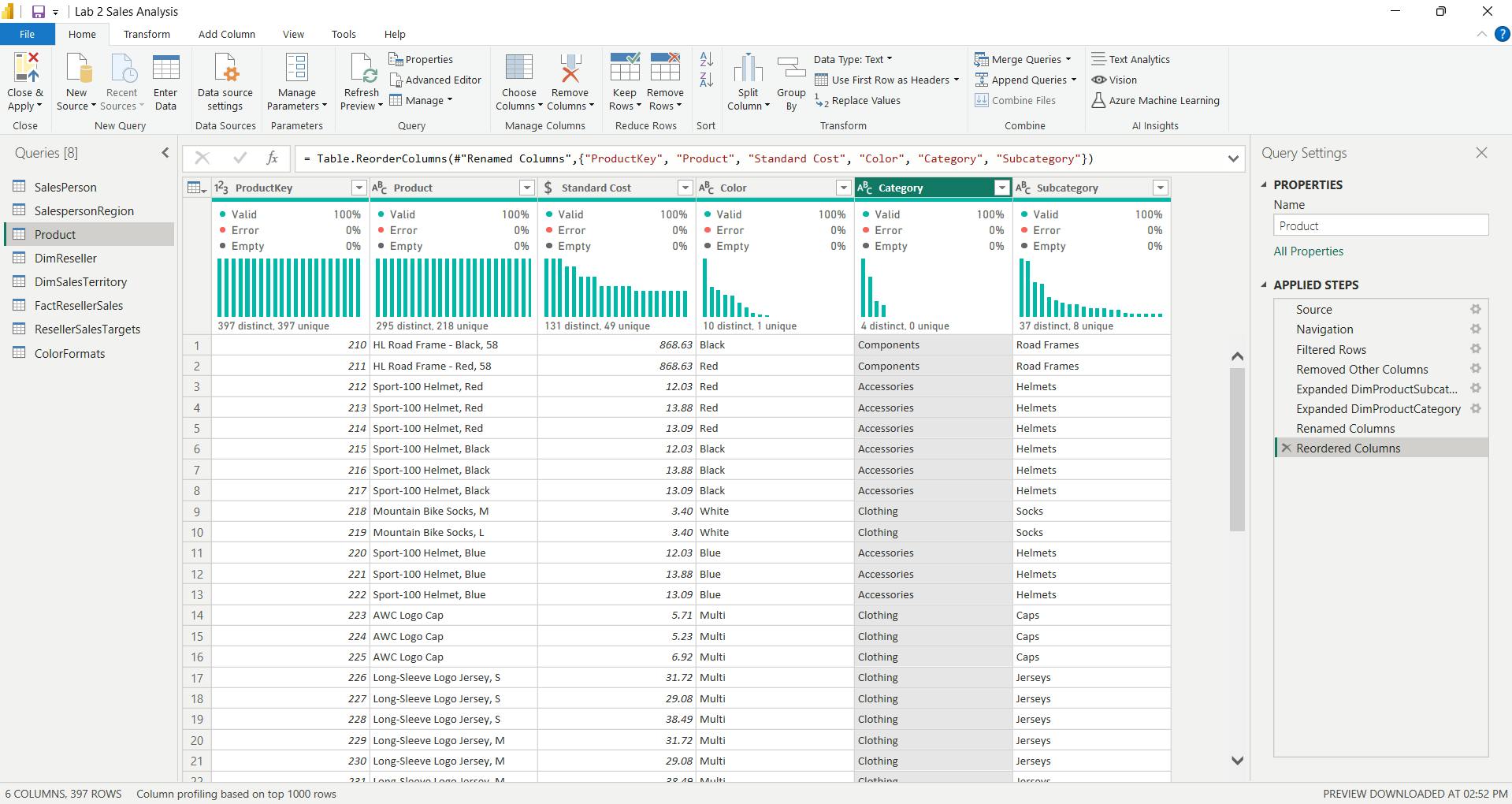

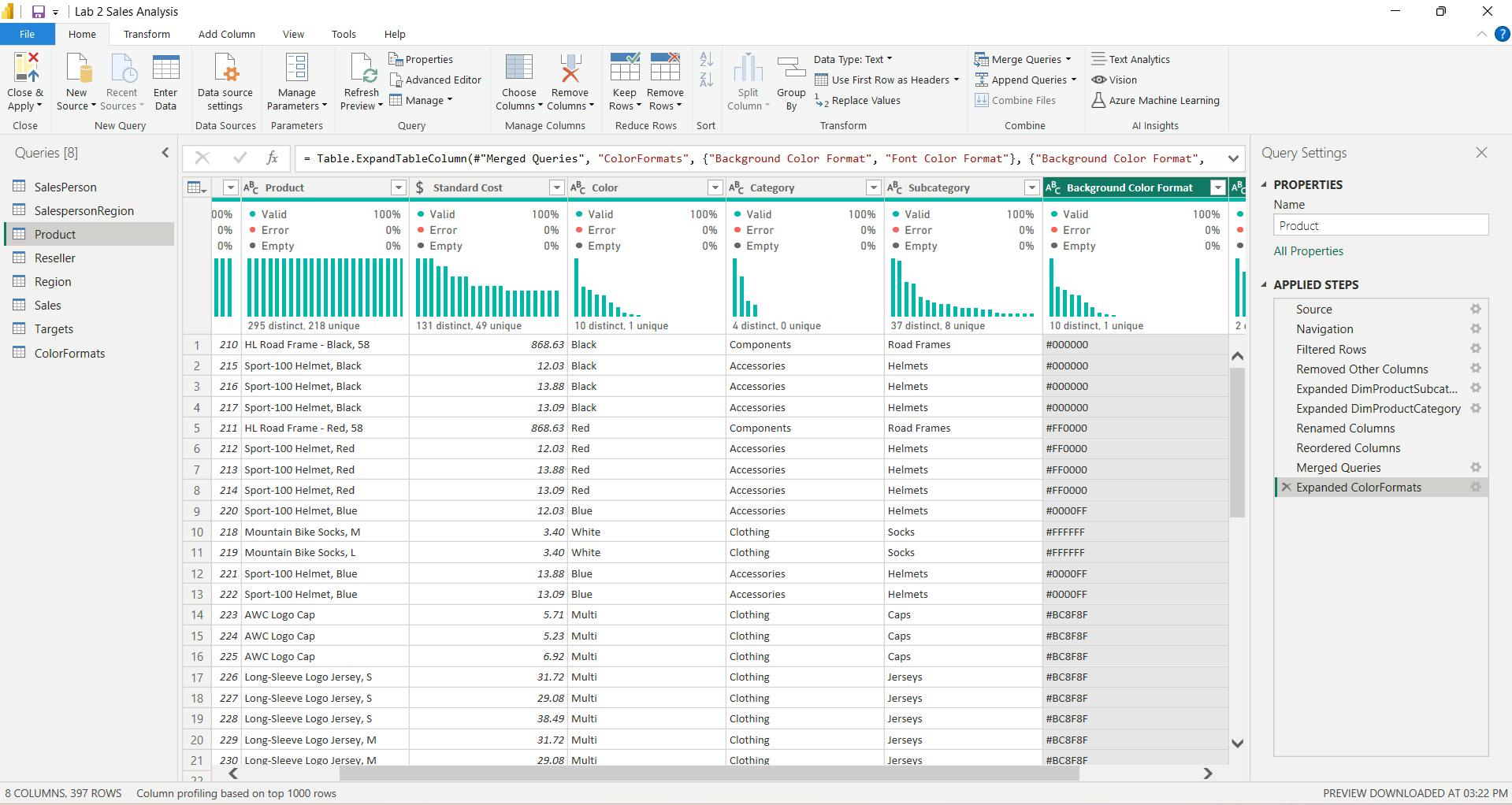

- iii. Configure the Product query

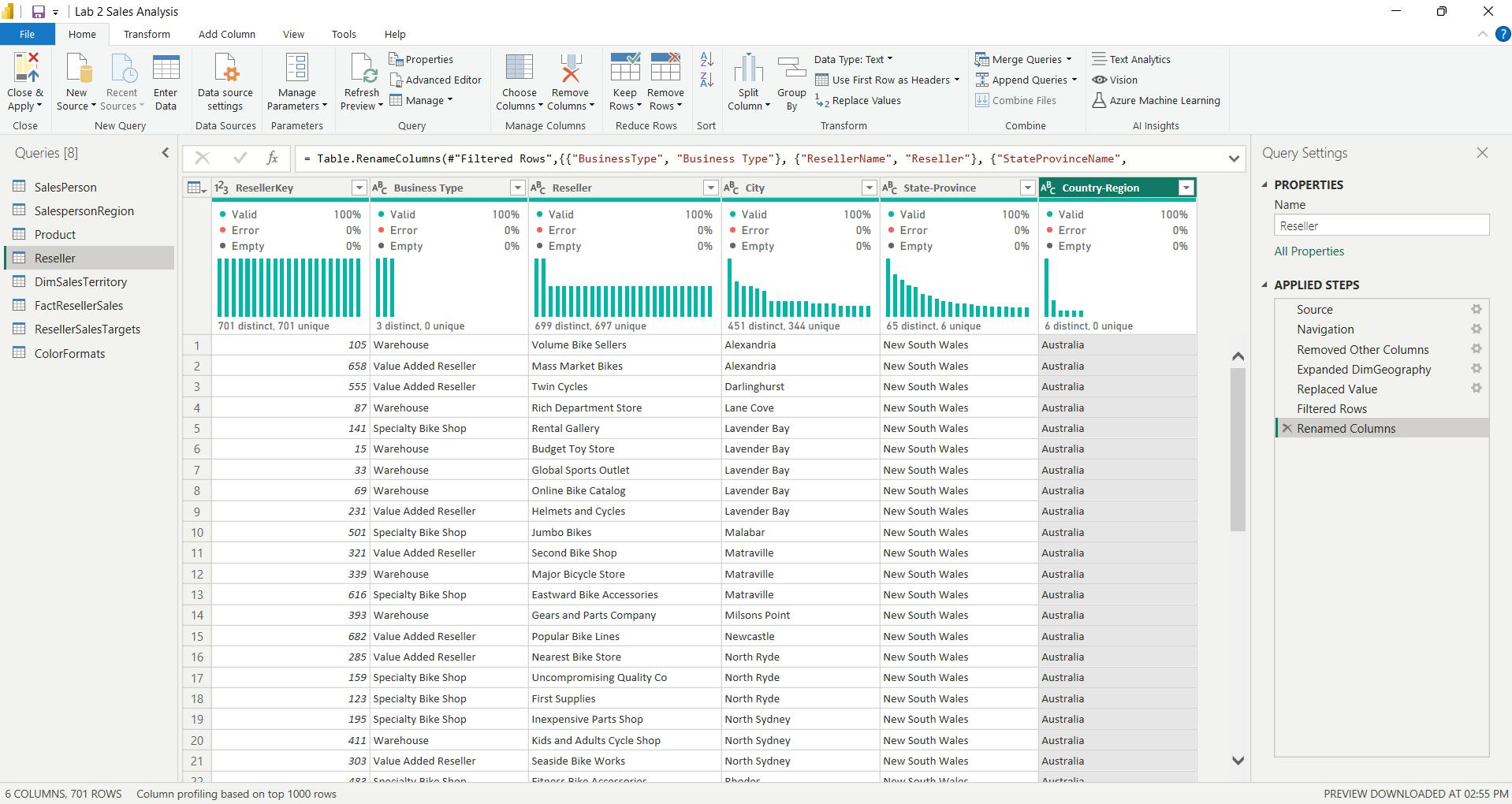

- iv. Configure the Reseller query

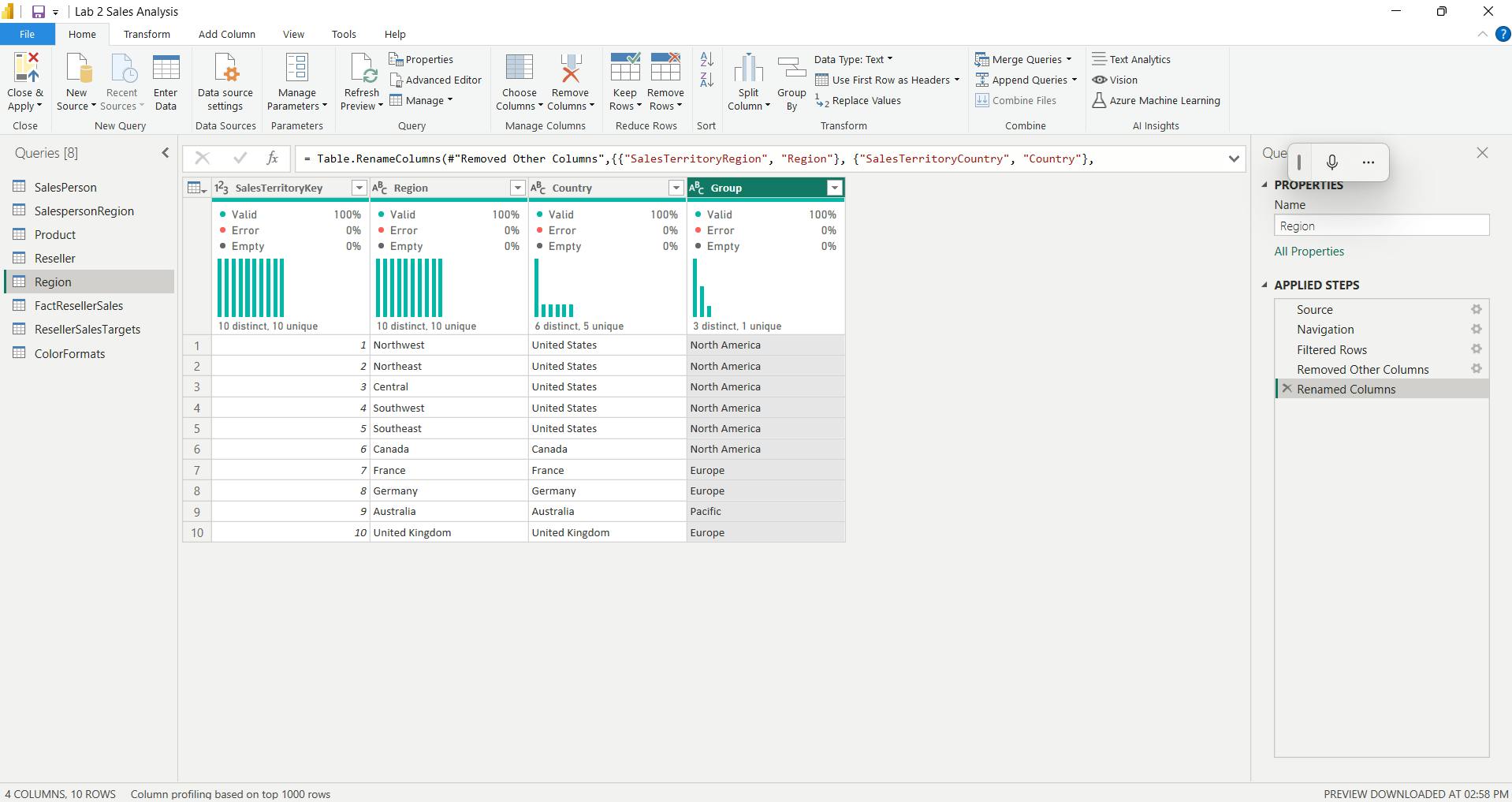

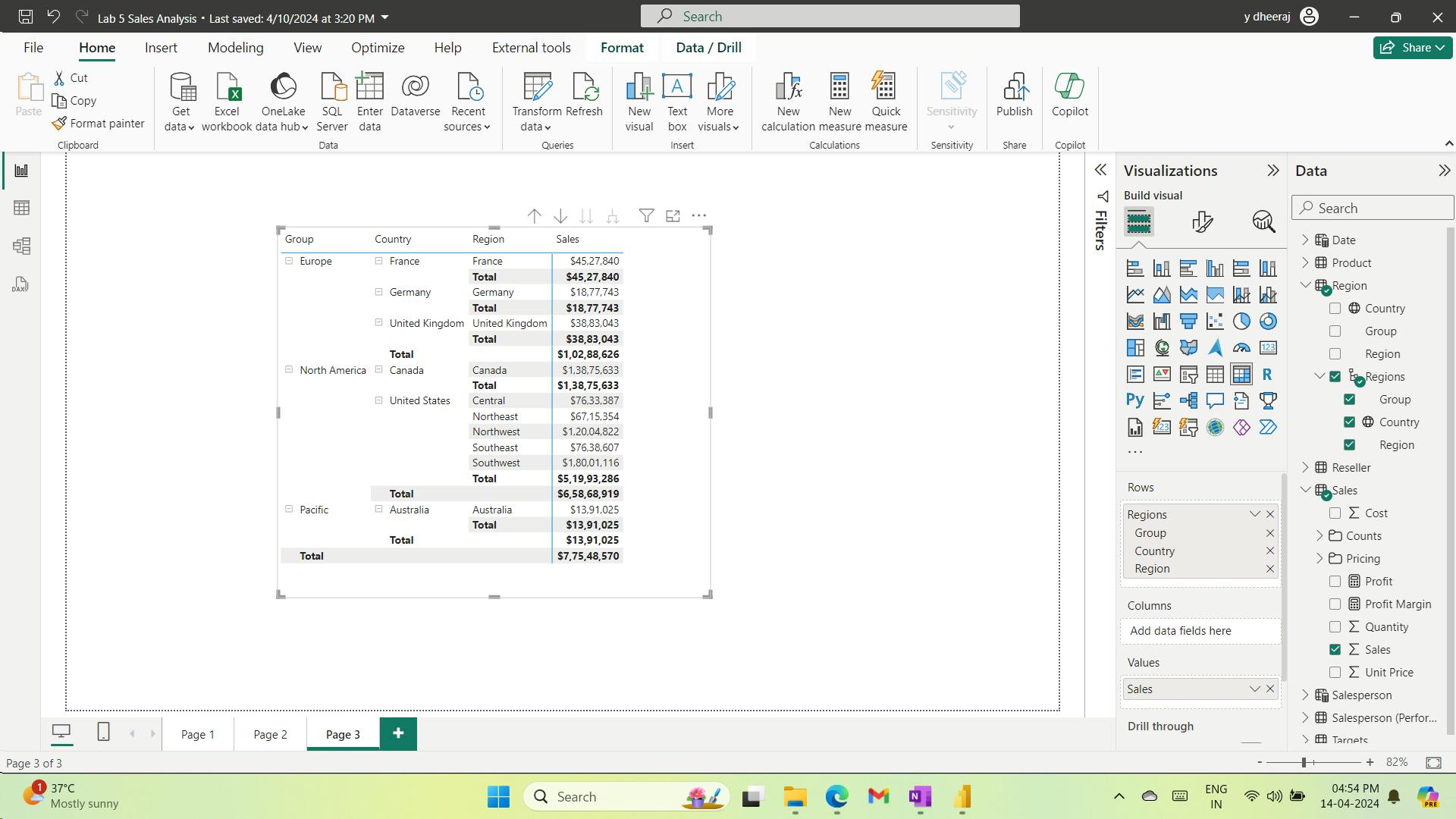

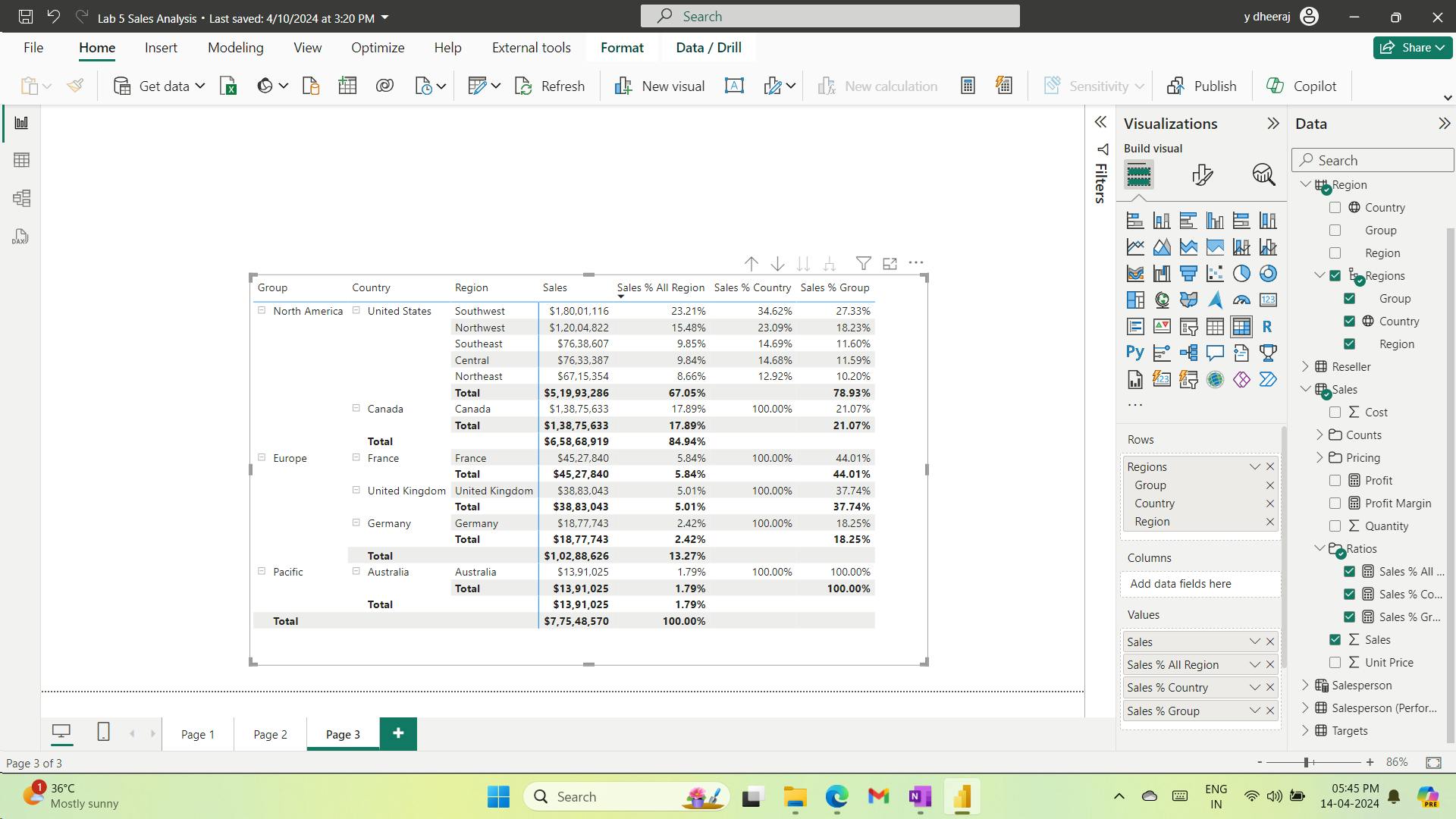

- v. Configure the Region query

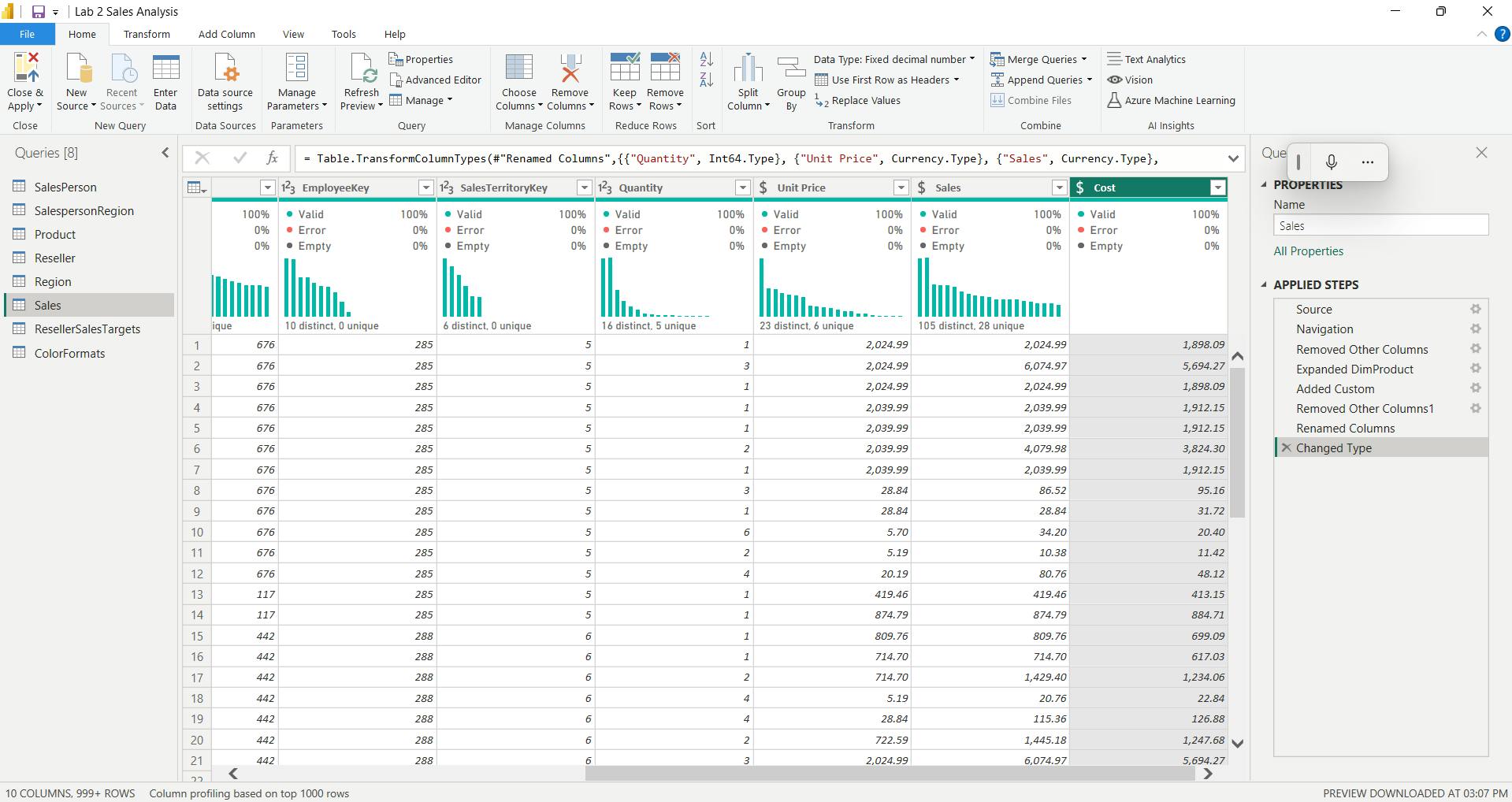

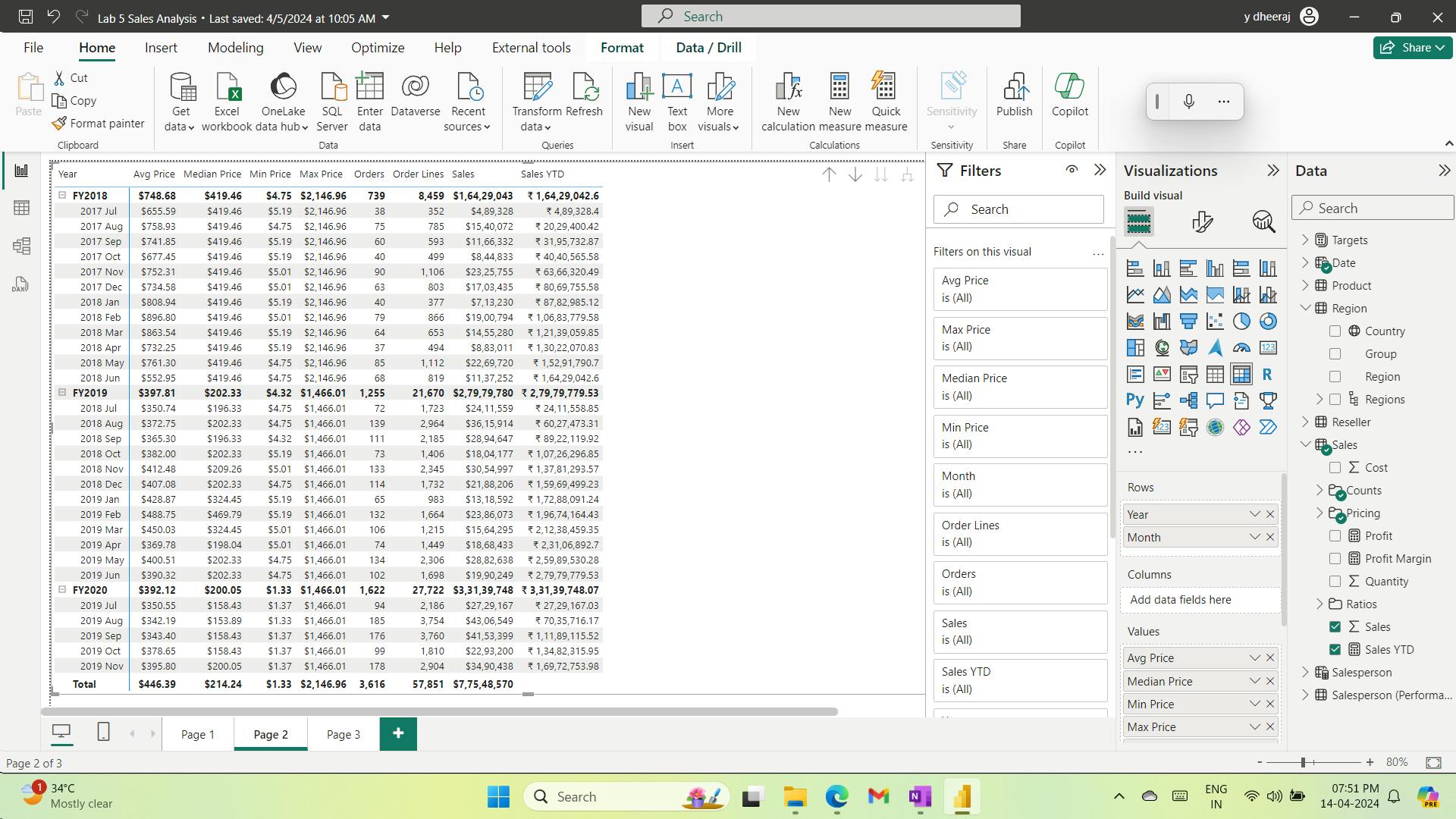

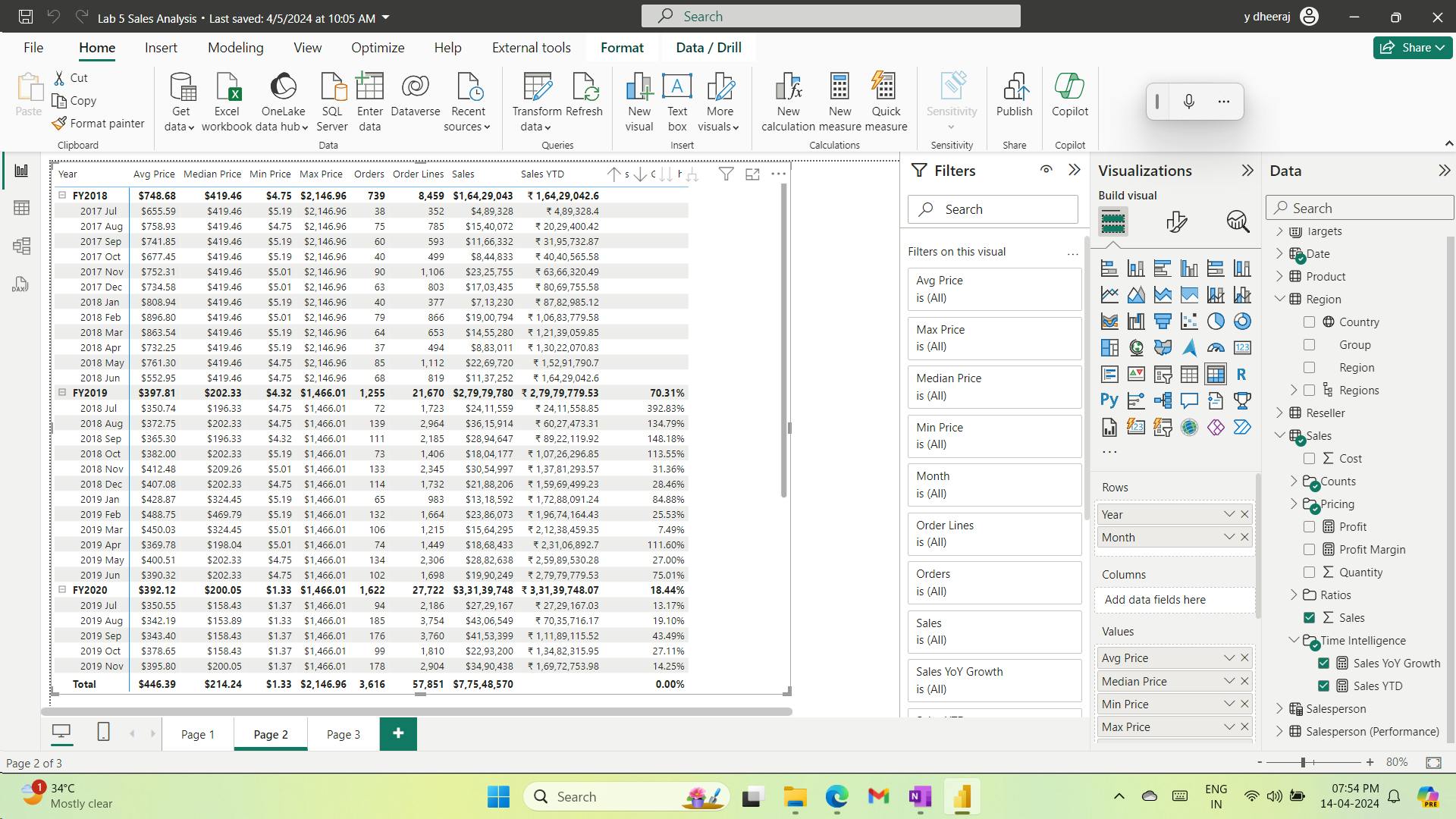

- vi. Configure the Sales query

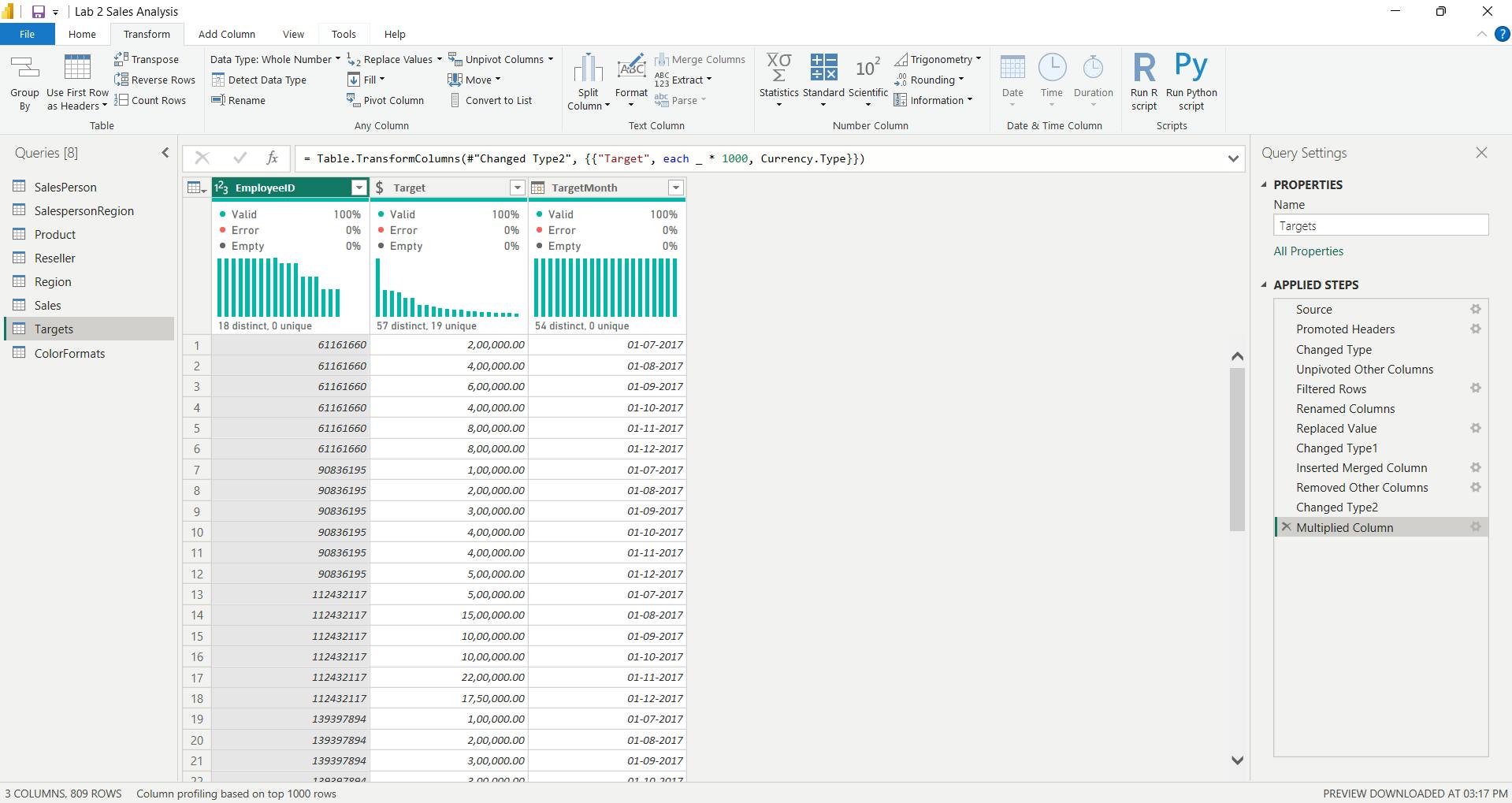

- vii. Configure the Targets query

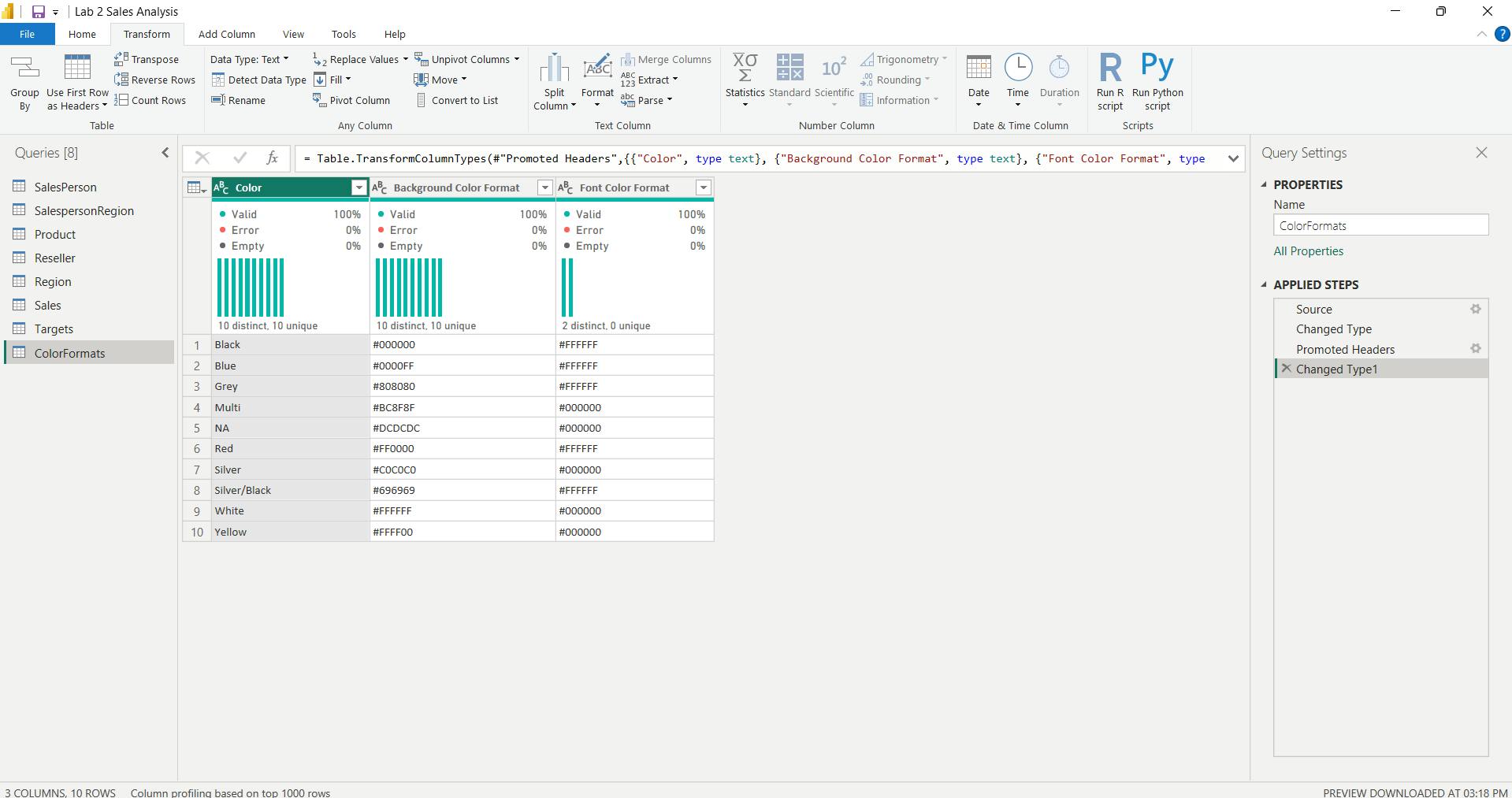

- viii. Configure the ColorFormats query

- ix. Update the Product query

- x. Update the ColorFormats query

- xi. Finish up

- 9. Check your knowledge

- 10. Summary

- Learning objectives

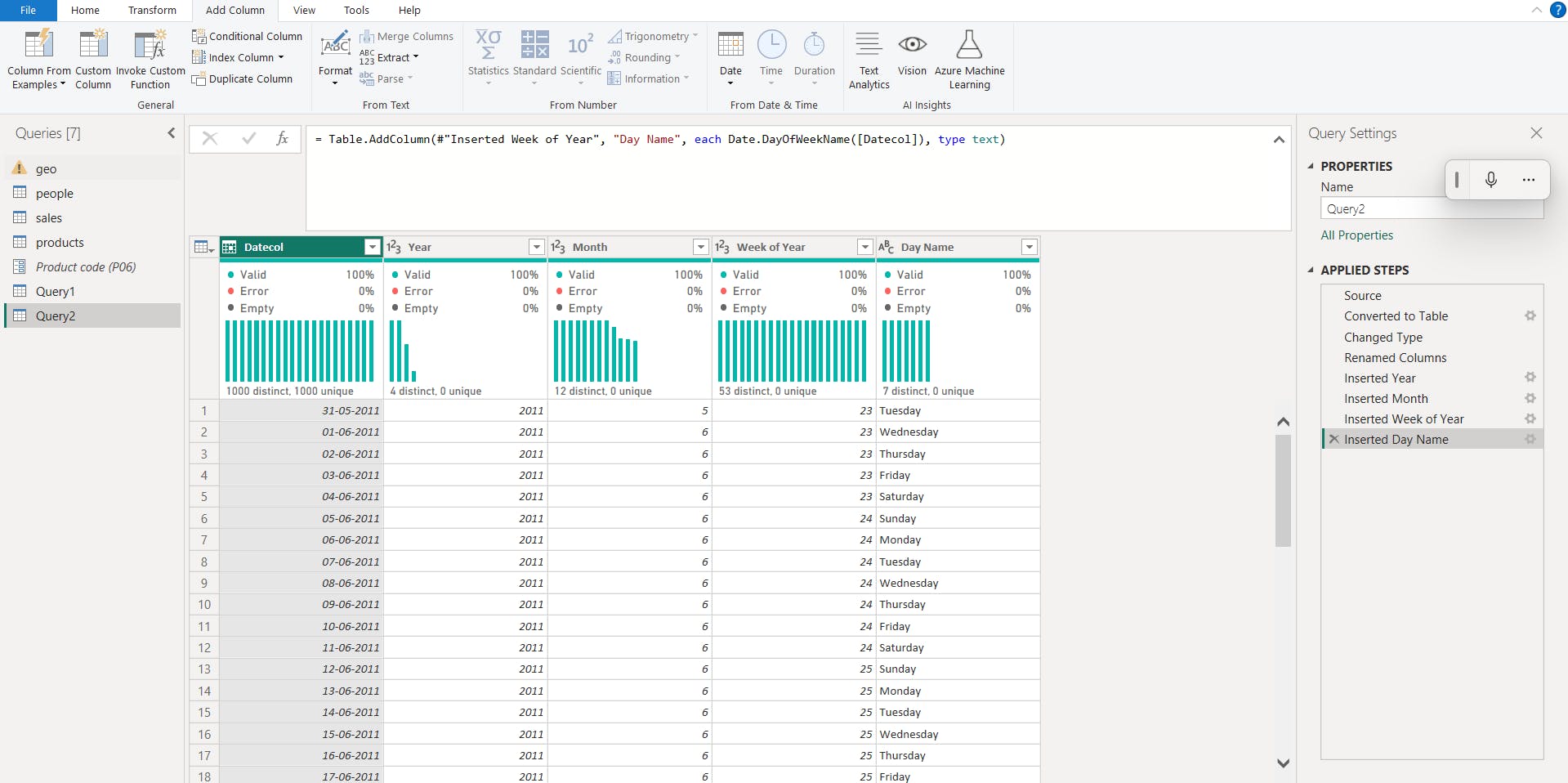

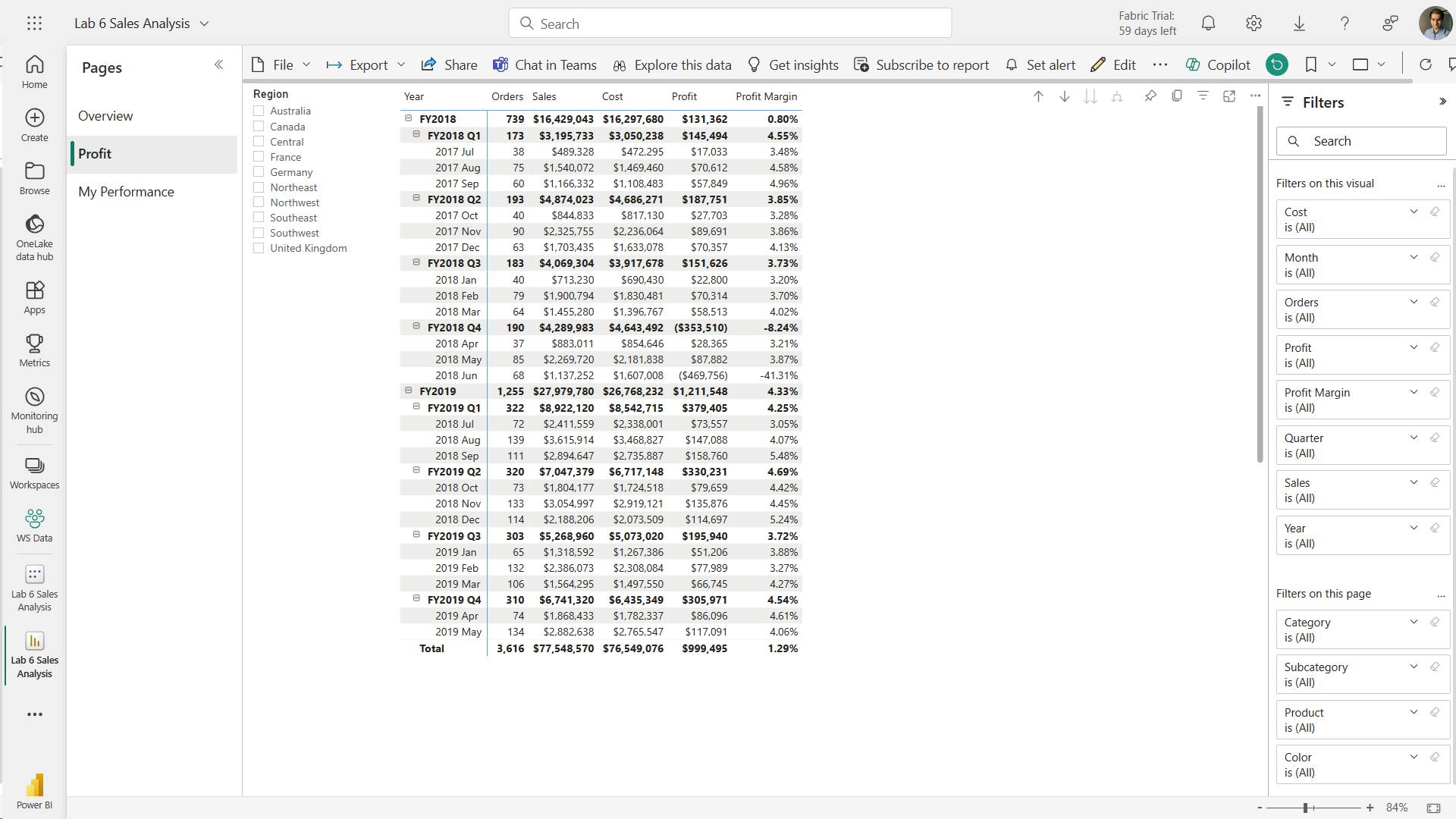

- V. Module 5 Design a semantic model in Power BI

- 1. Introduction

- 2. Work with tables

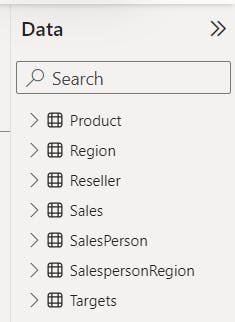

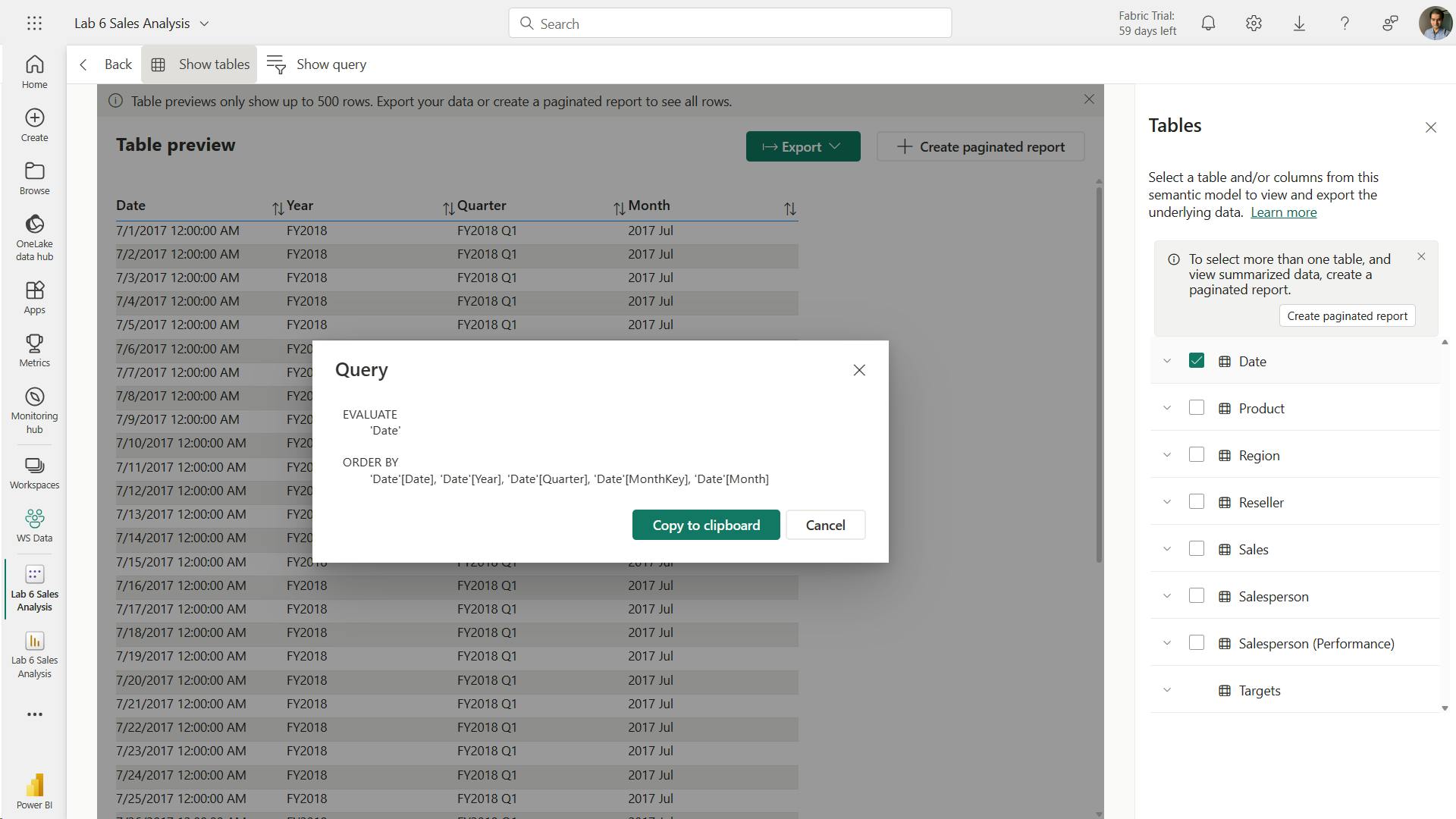

- 3. Create a date table

- 4. Work with dimensions

- 5. Define data granularity

- 6. Work with relationships and cardinality

- 7. Resolve modeling challenges

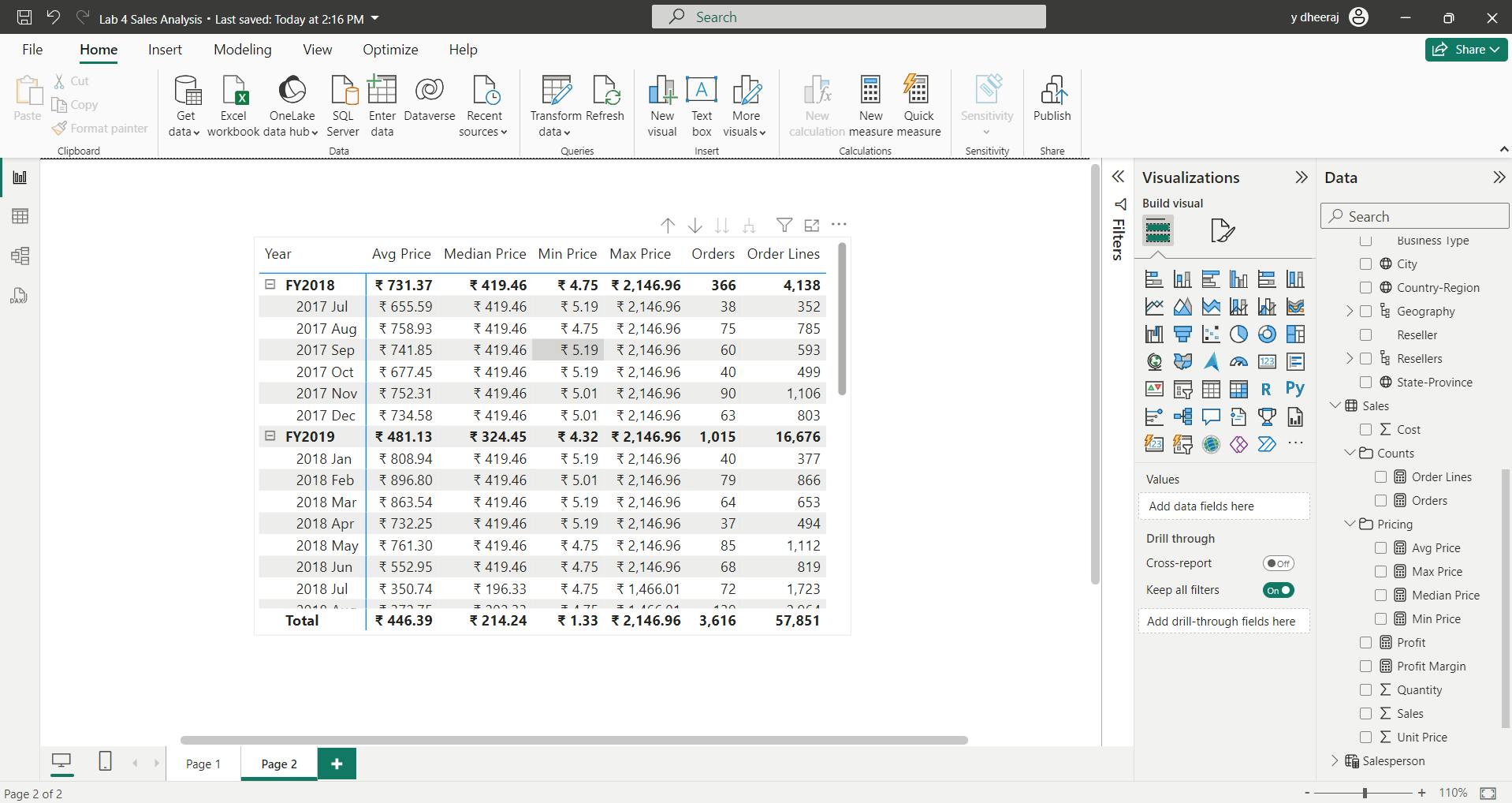

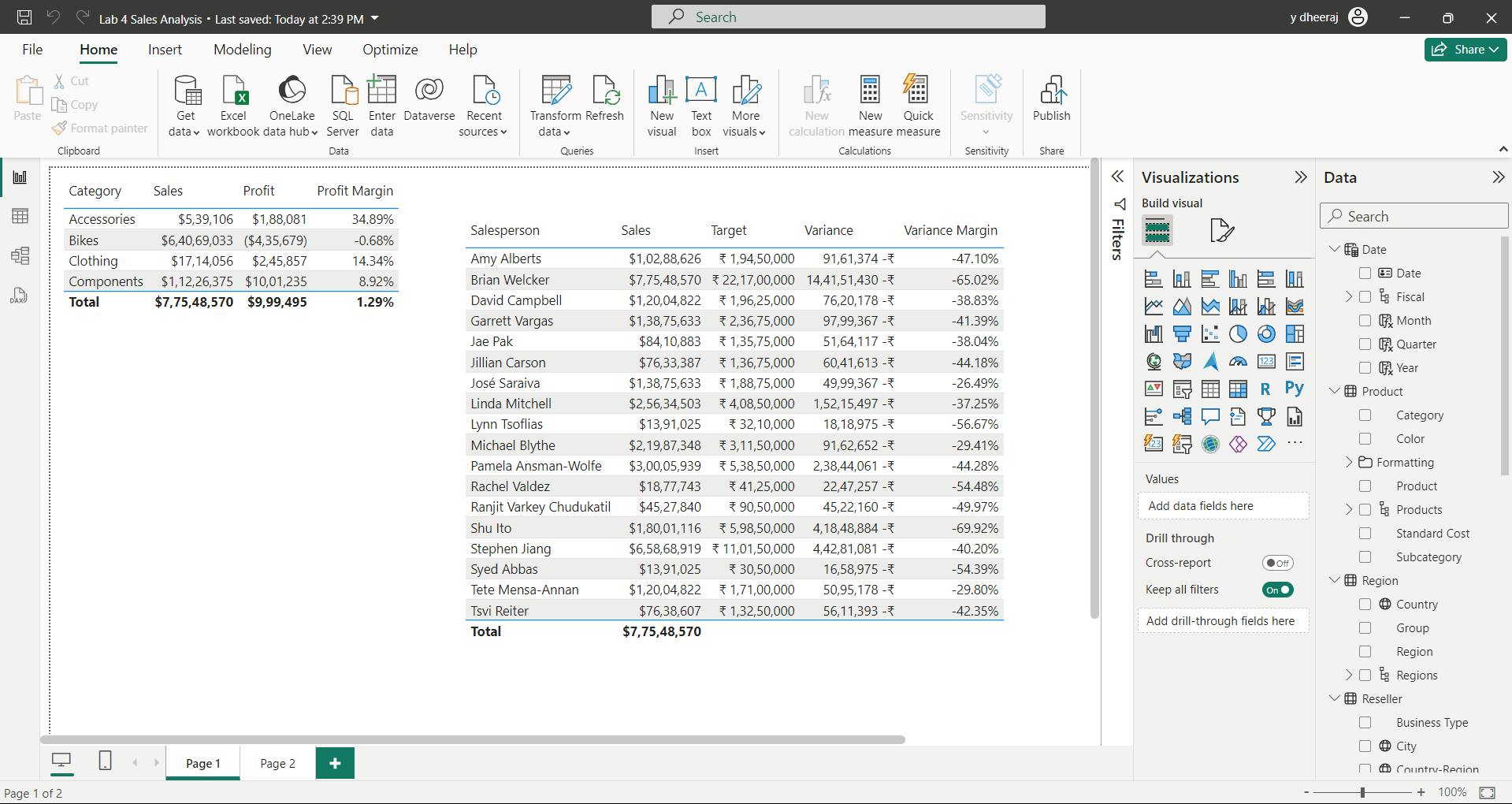

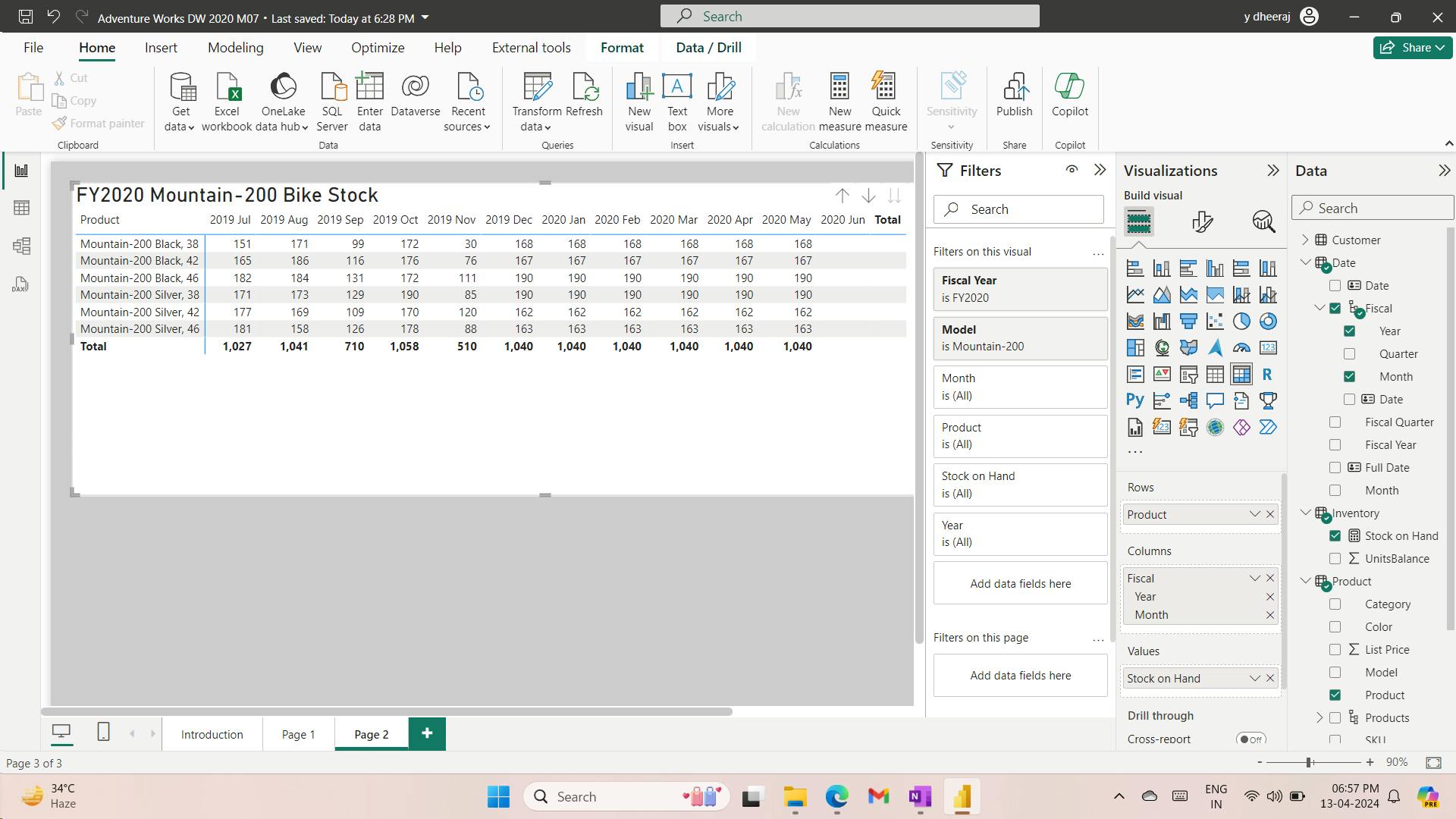

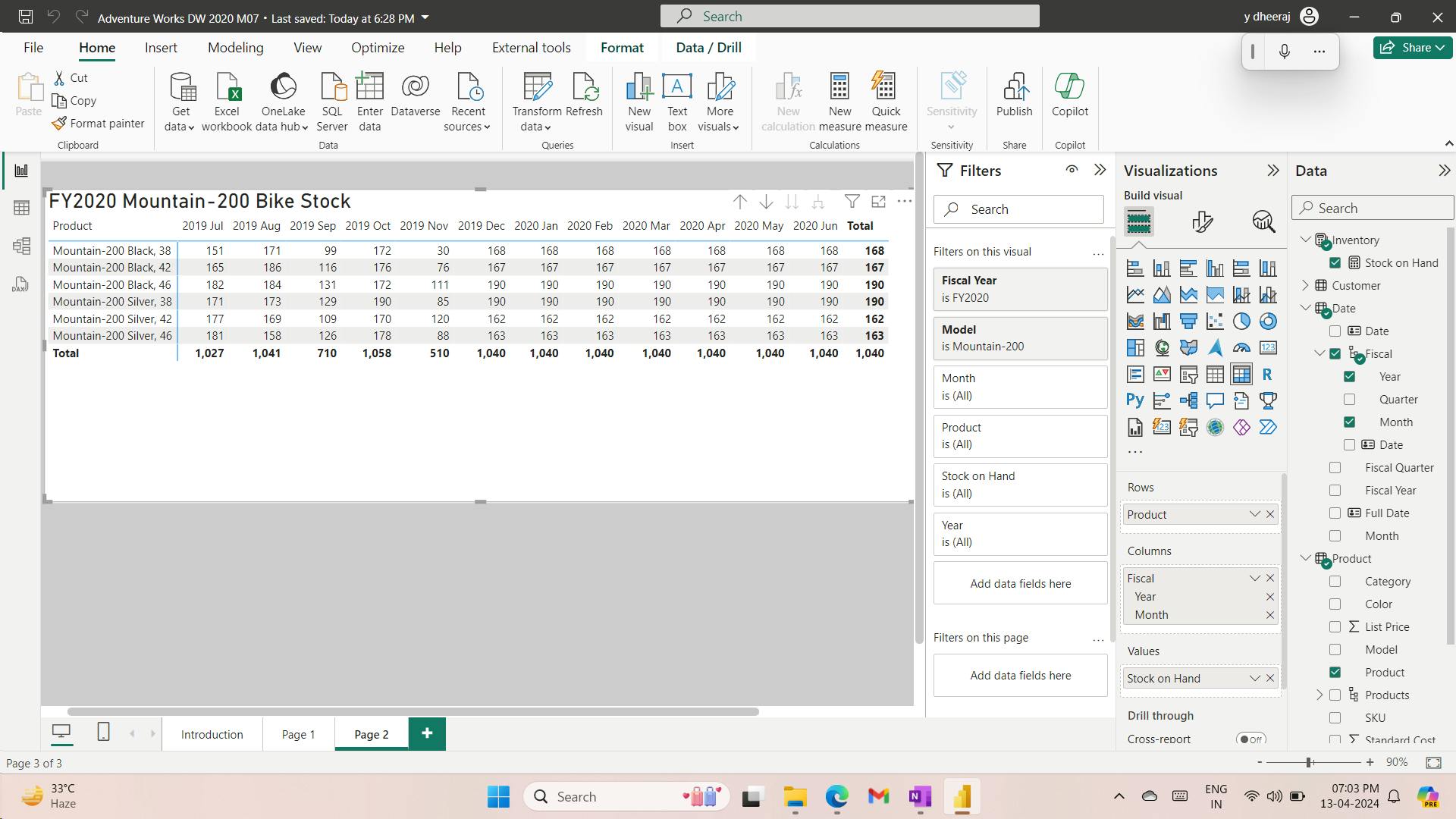

- 8. Exercise - Model data in Power BI Desktop

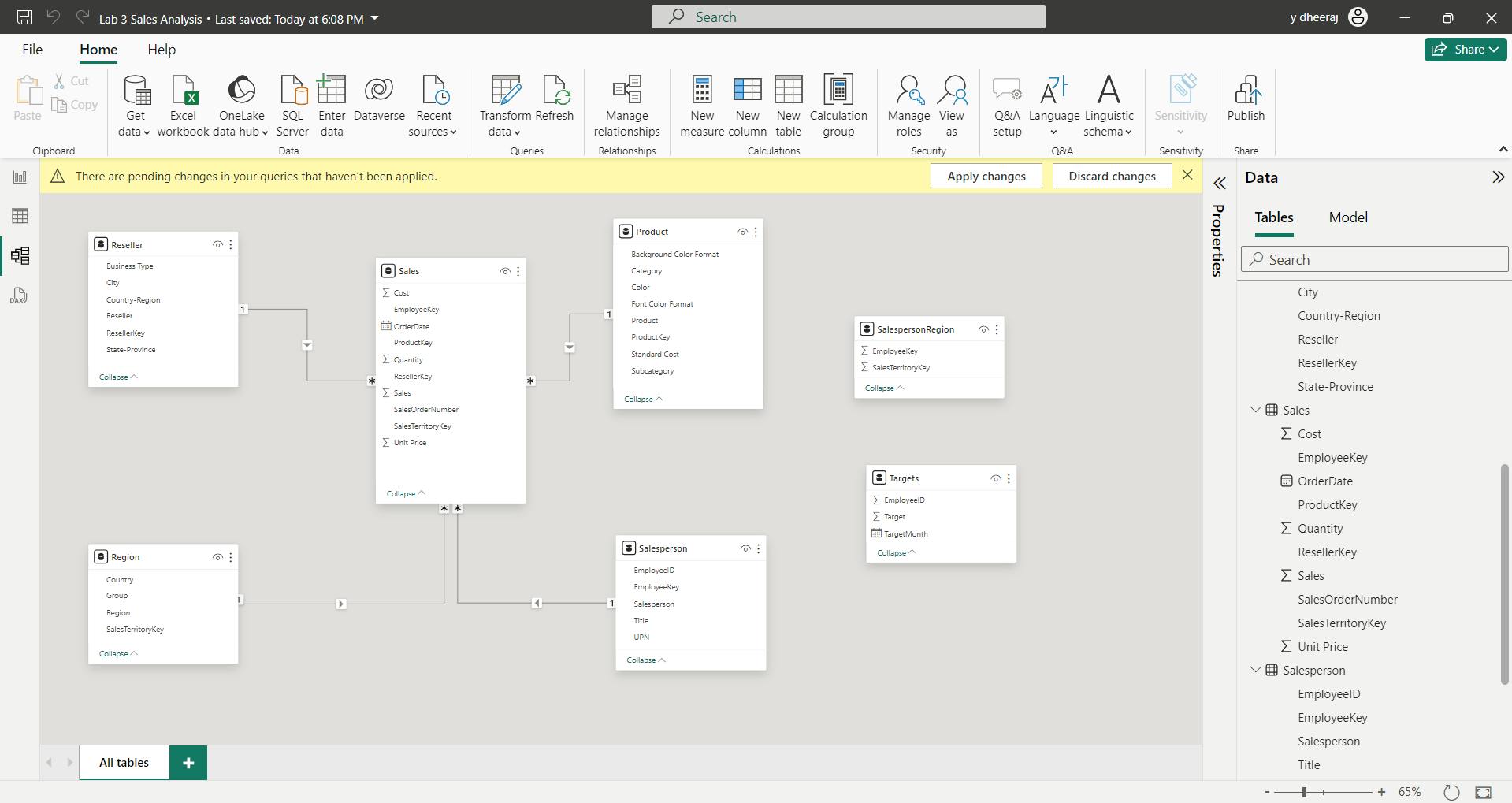

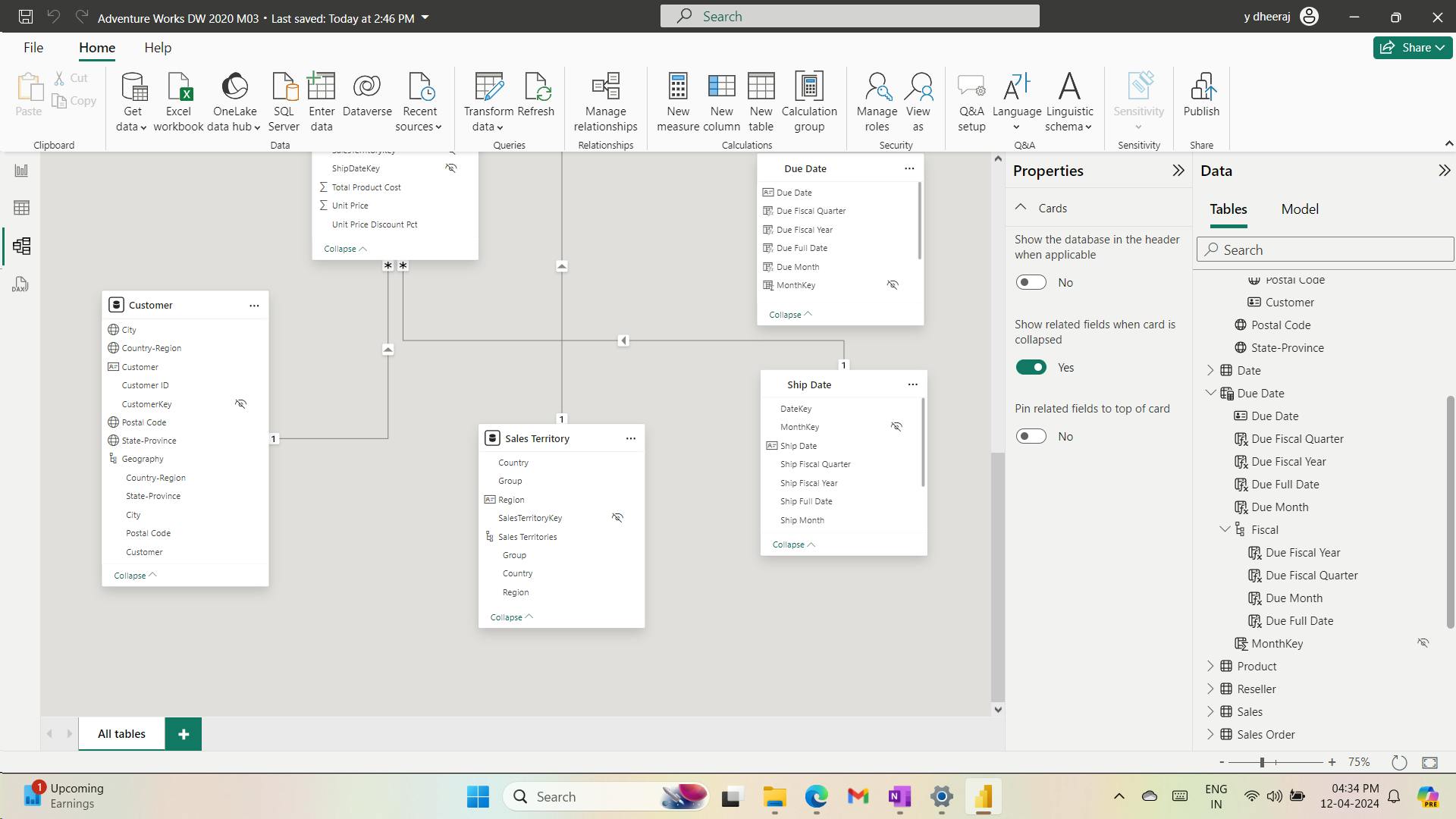

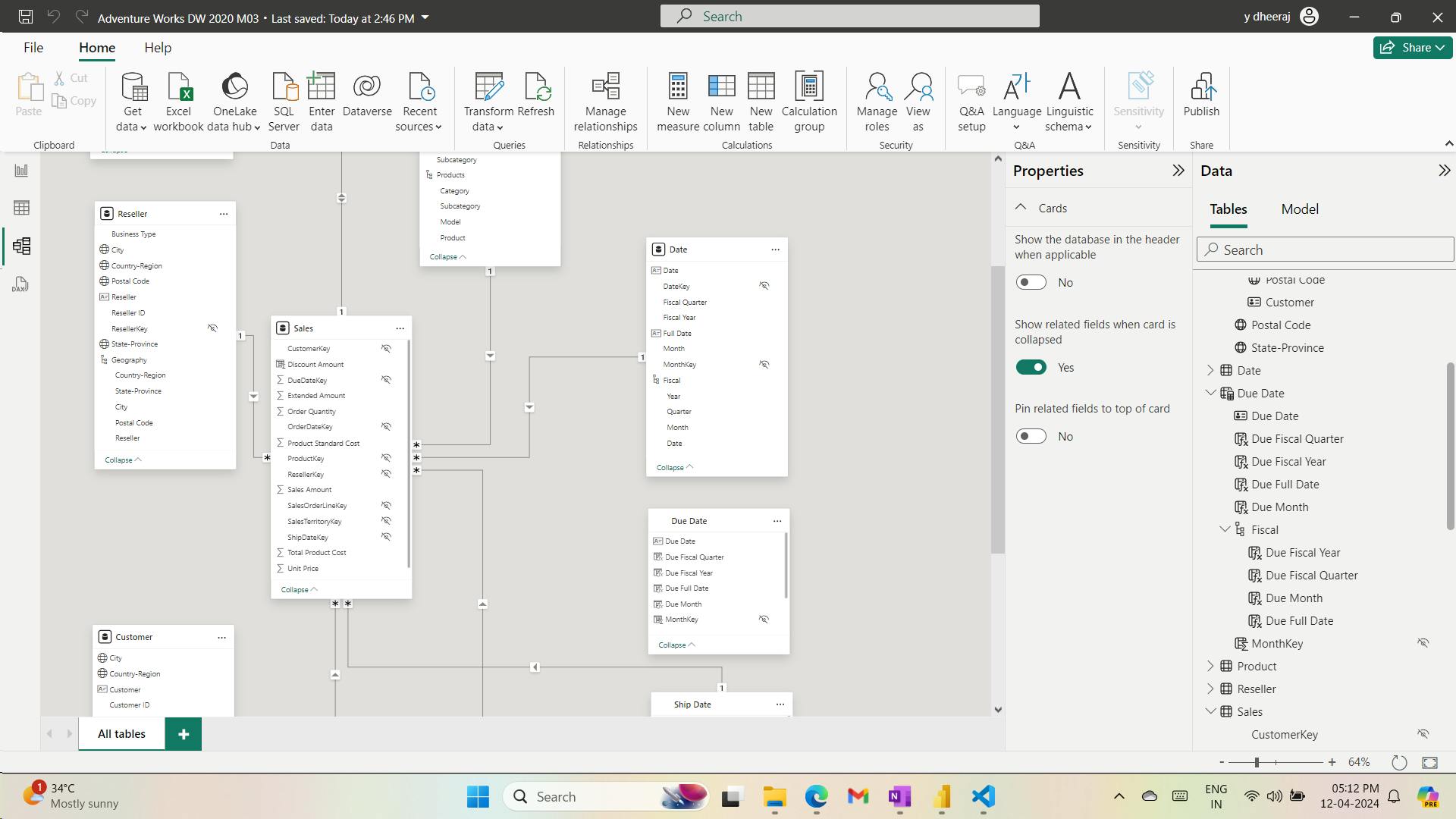

- i. Create model relationships

- ii. Configure Tables

- iii. Configure the Product table

- iv. Configure the Region table

- v. Configure the Reseller table

- vi. Configure the Sales table

- vii. Bulk update properties

- viii. Review the Model Interface

- ix. Review the model interface

- x. Create Quick Measures

- xi. Create quick measures

- xii. Create a many-to-many relationship

- xiii. Relate the Targets table

- 9. Check your knowledge

- 10. Summary

- Learning objectives

- VI. Module 6 Add measures to Power BI Desktop models

- VII. Module 7 Add calculated tables and columns to Power BI Desktop models

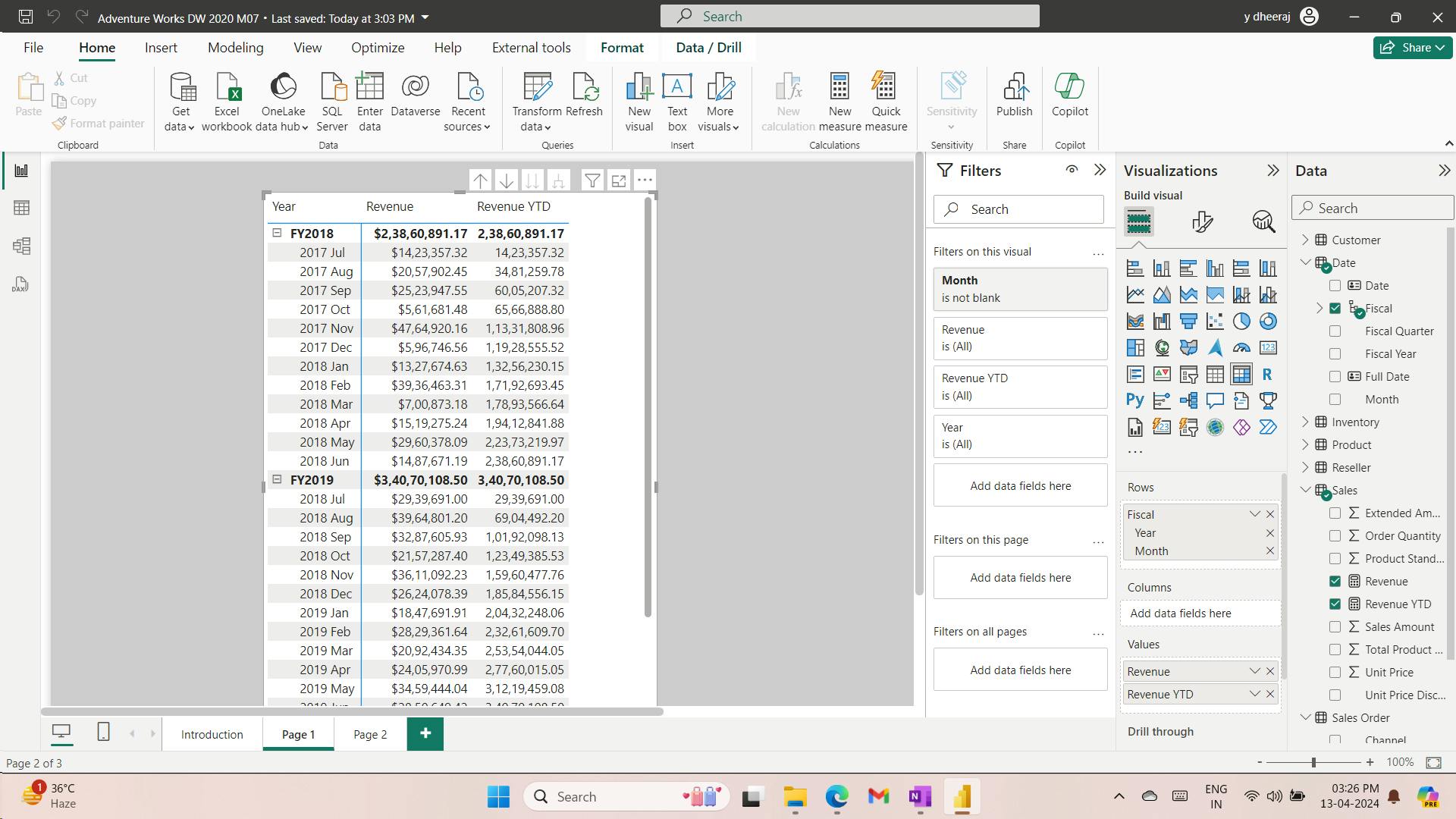

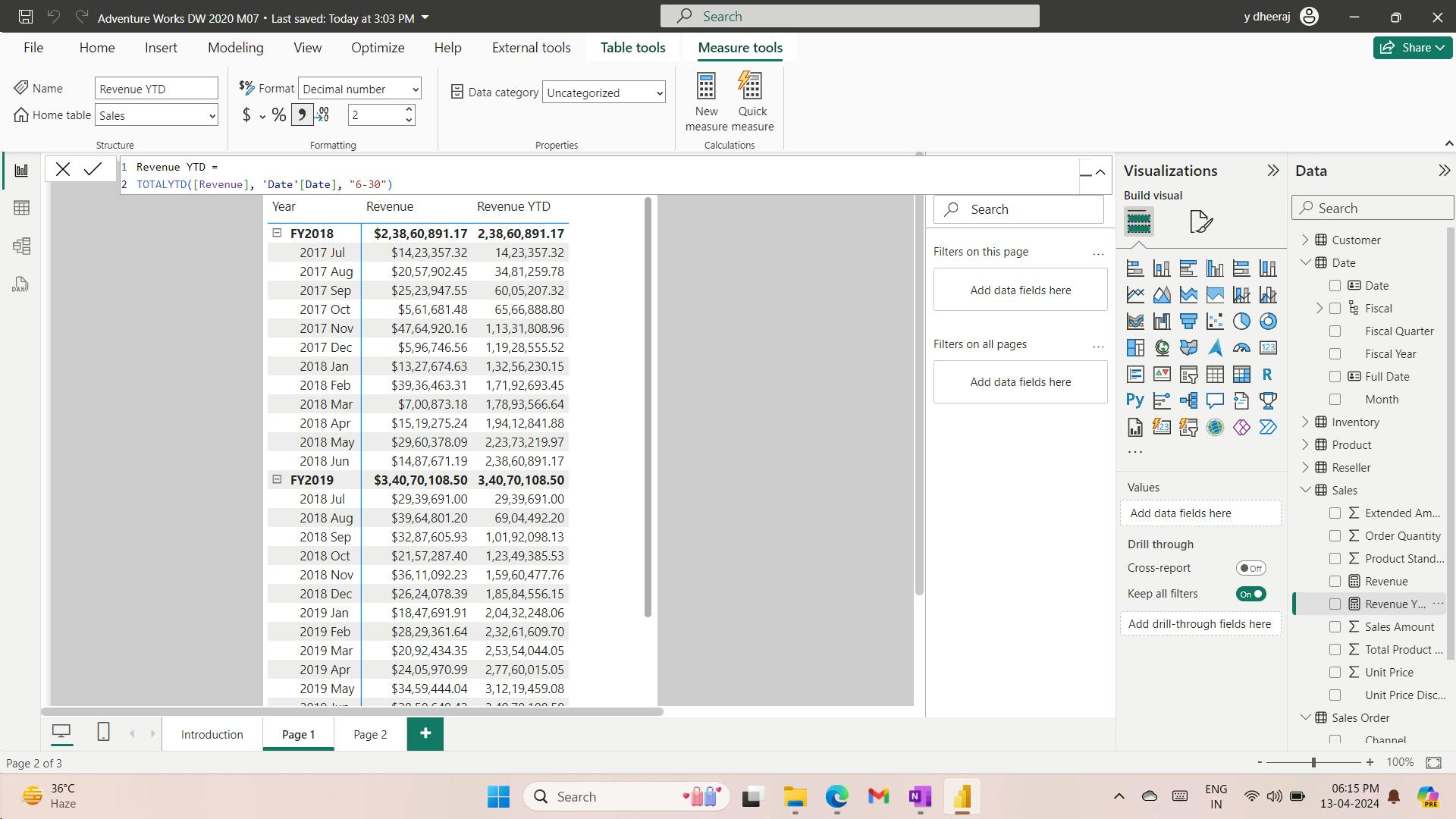

- VIII. Module 8 Use DAX time intelligence functions in Power BI Desktop models

- IX. Module 9 Optimize a model for performance in Power BI

- 1. Introduction to performance optimization

- 2. Review performance of measures, relationships, and visuals

- 3. Use variables to improve performance and troubleshooting

- 4. Reduce cardinality

- 5. Optimize DirectQuery models with table level storage

- 6. Create and manage aggregations

- 7. Check your knowledge

- 8. Summary

- Learning objectives

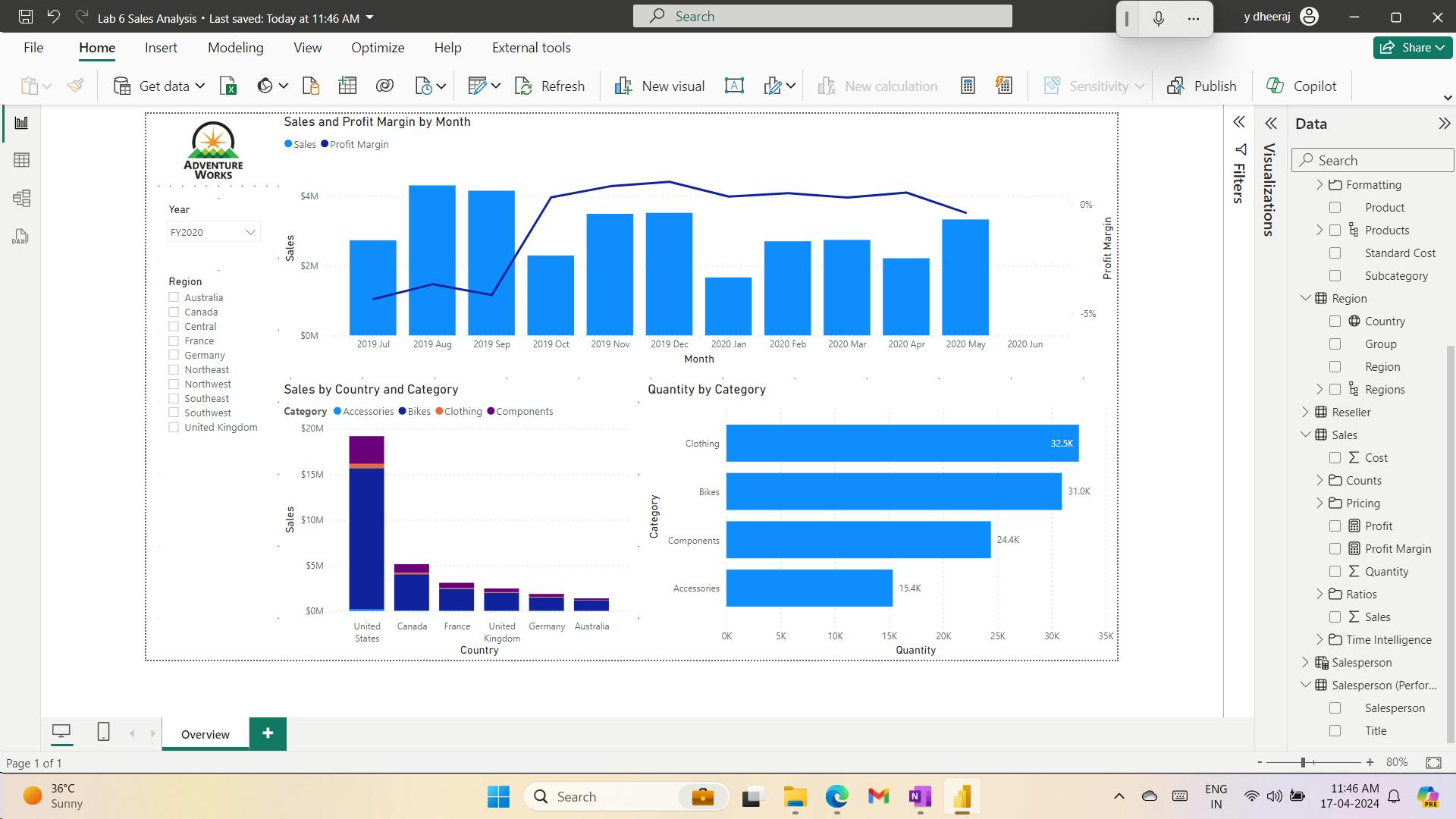

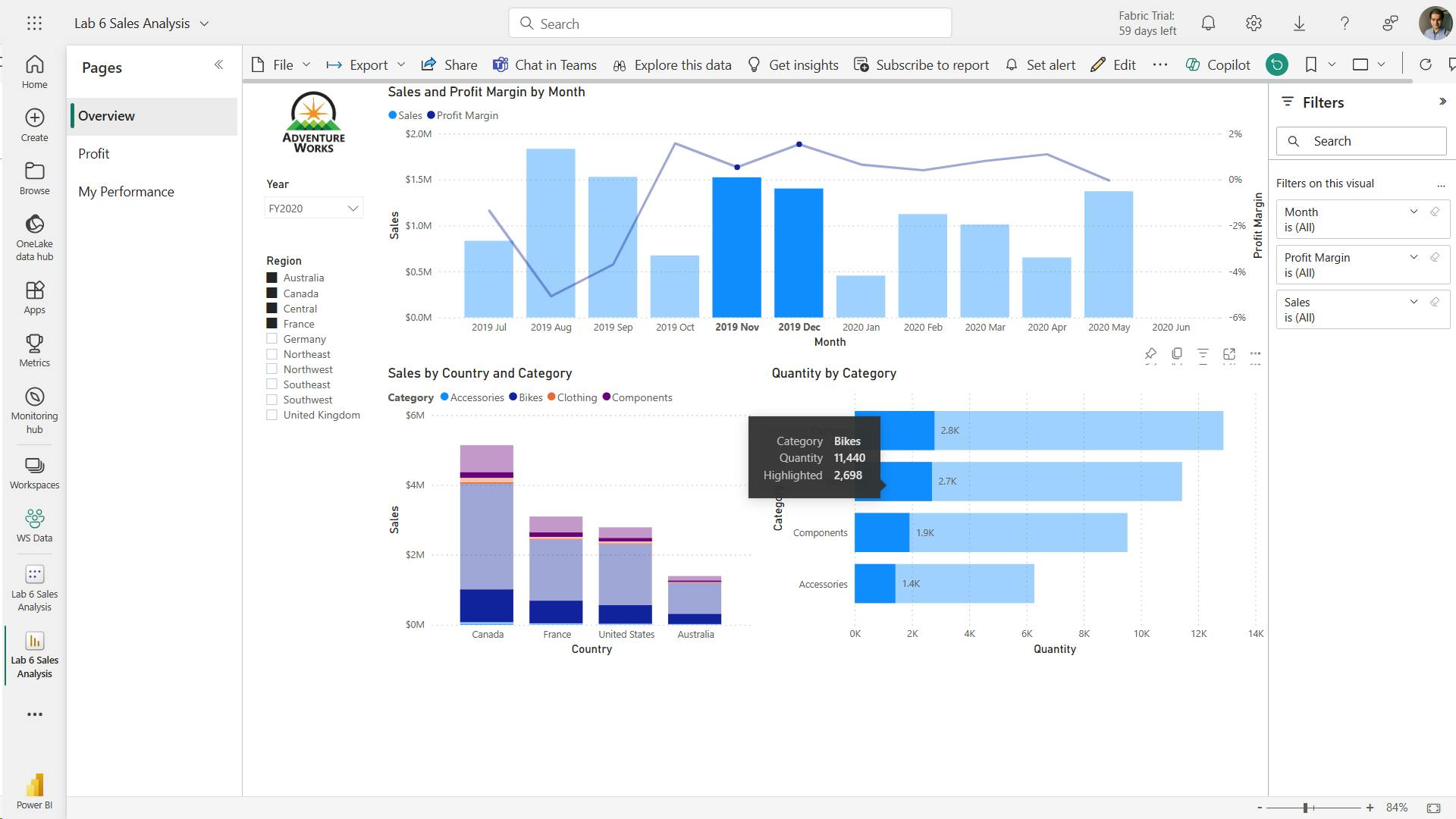

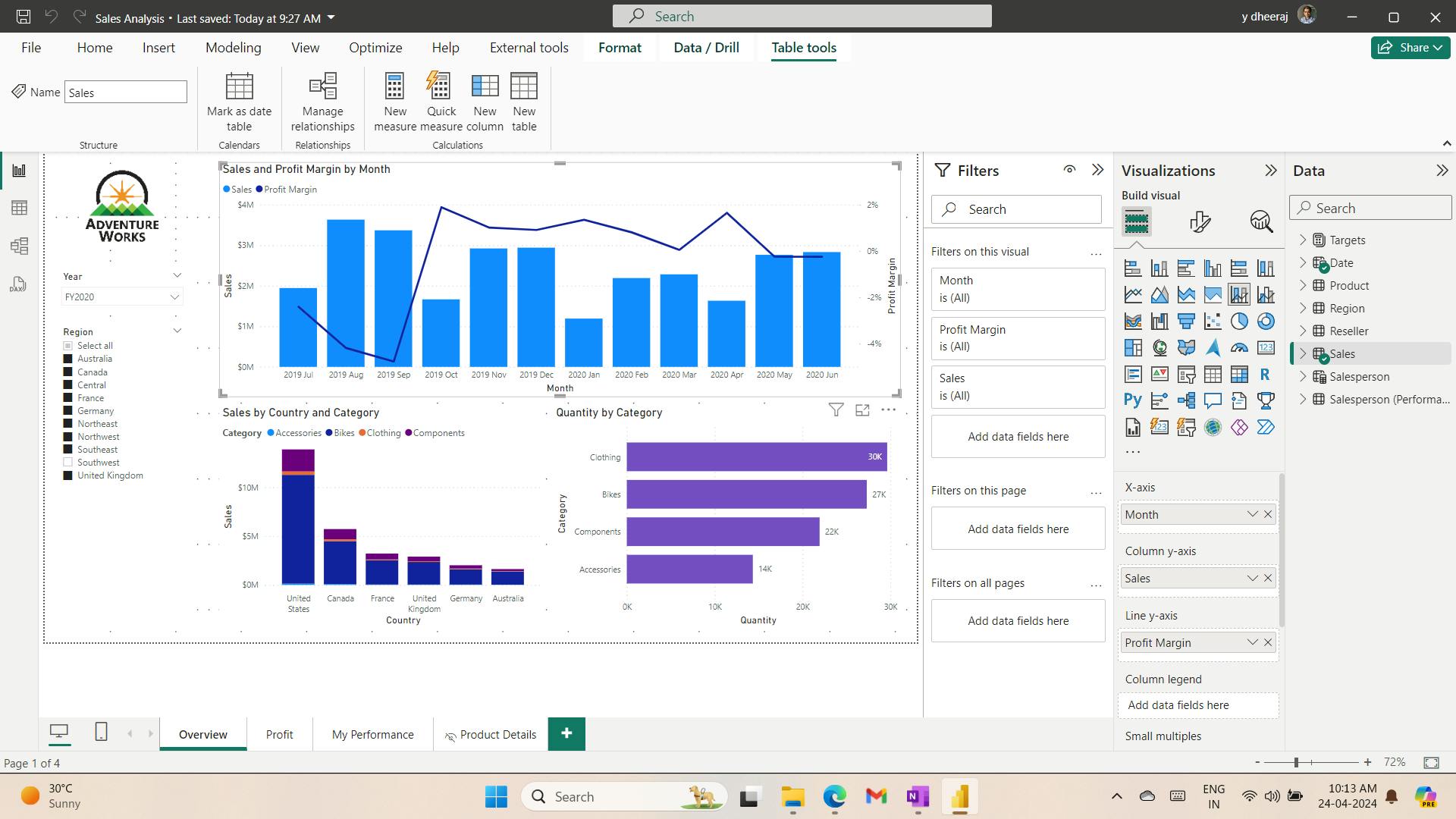

- X. Module 10 Design Power BI reports

- 1. Introduction

- 2. Design the analytical report layout

- 3. Design visually appealing reports

- 4. Report objects

- 5. Select report visuals

- 6. Select report visuals to suit the report layout

- 7. Format and configure visualizations

- 8. Work with key performance indicators

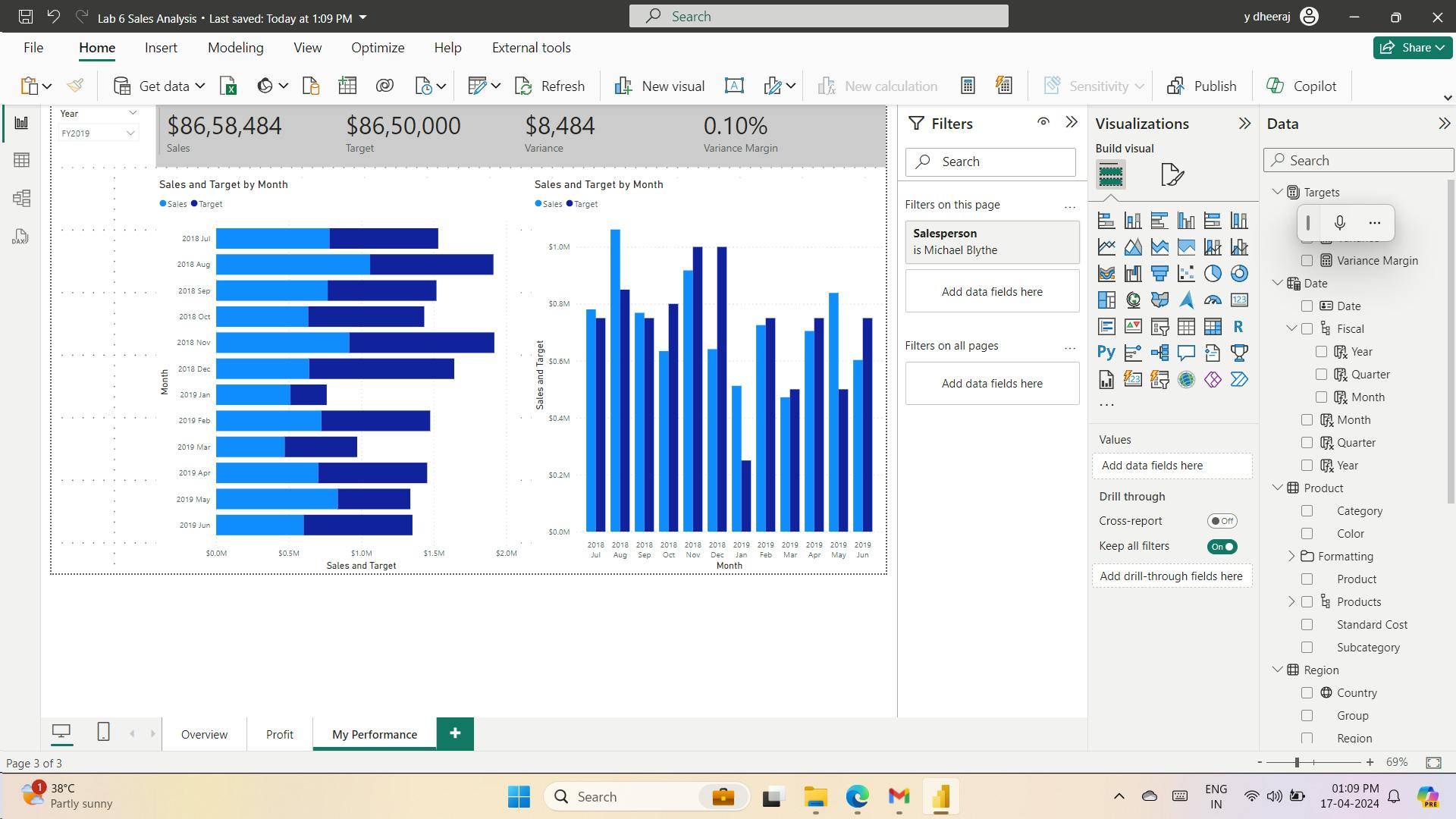

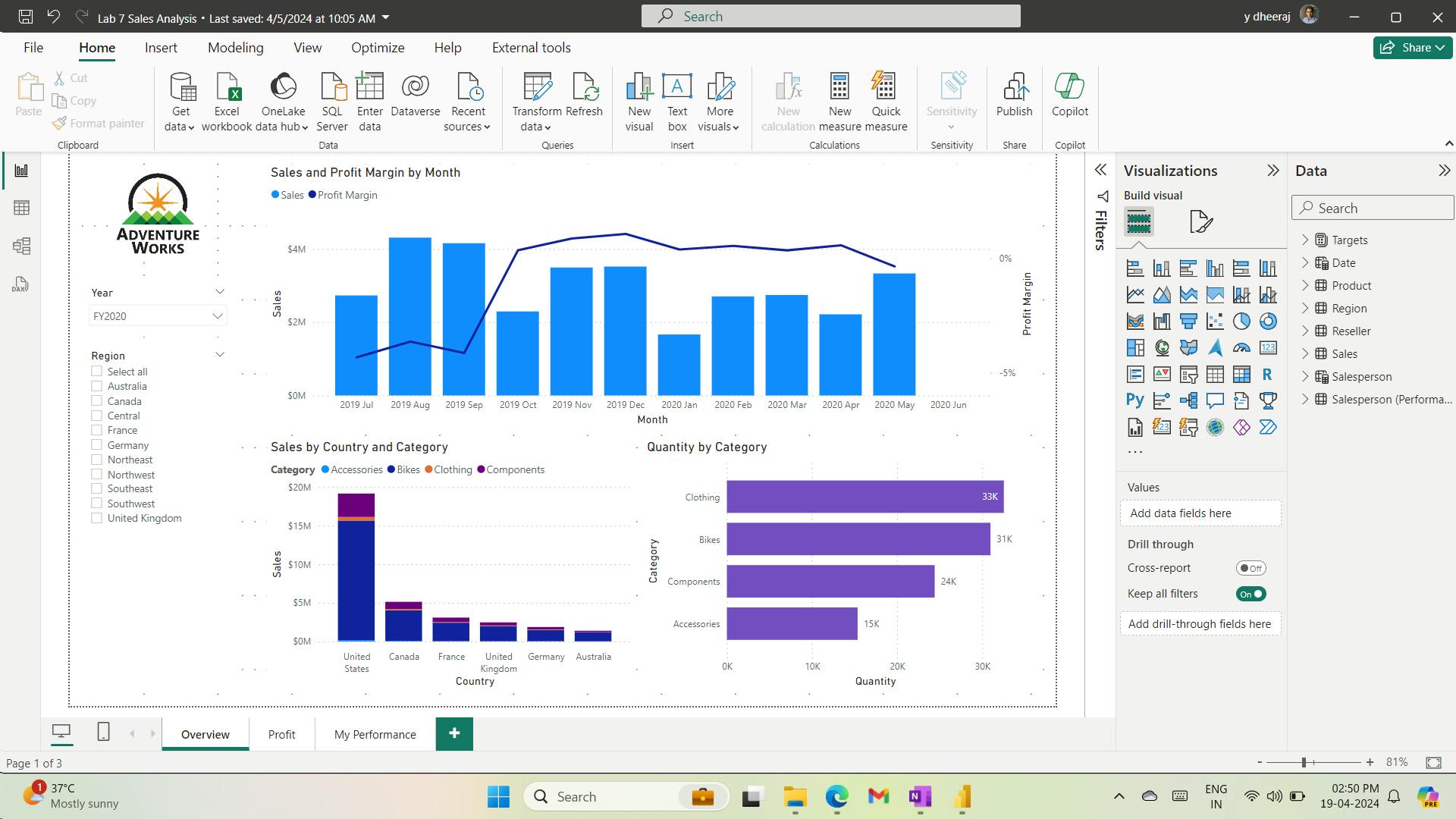

- 9. Exercise - Design a report in Power BI desktop

- 10. Check your knowledge

- 11. Summary

- Learning objectives

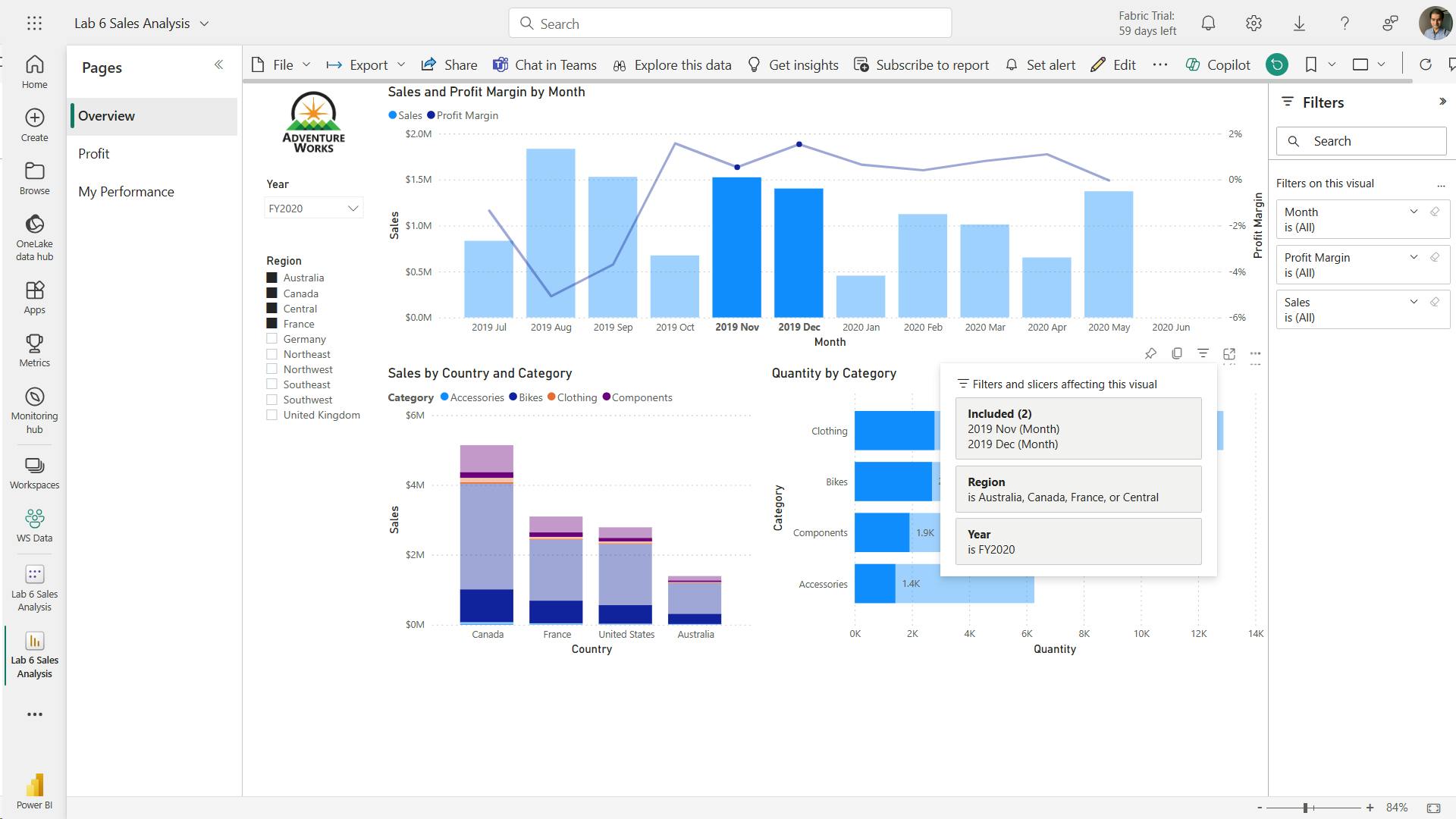

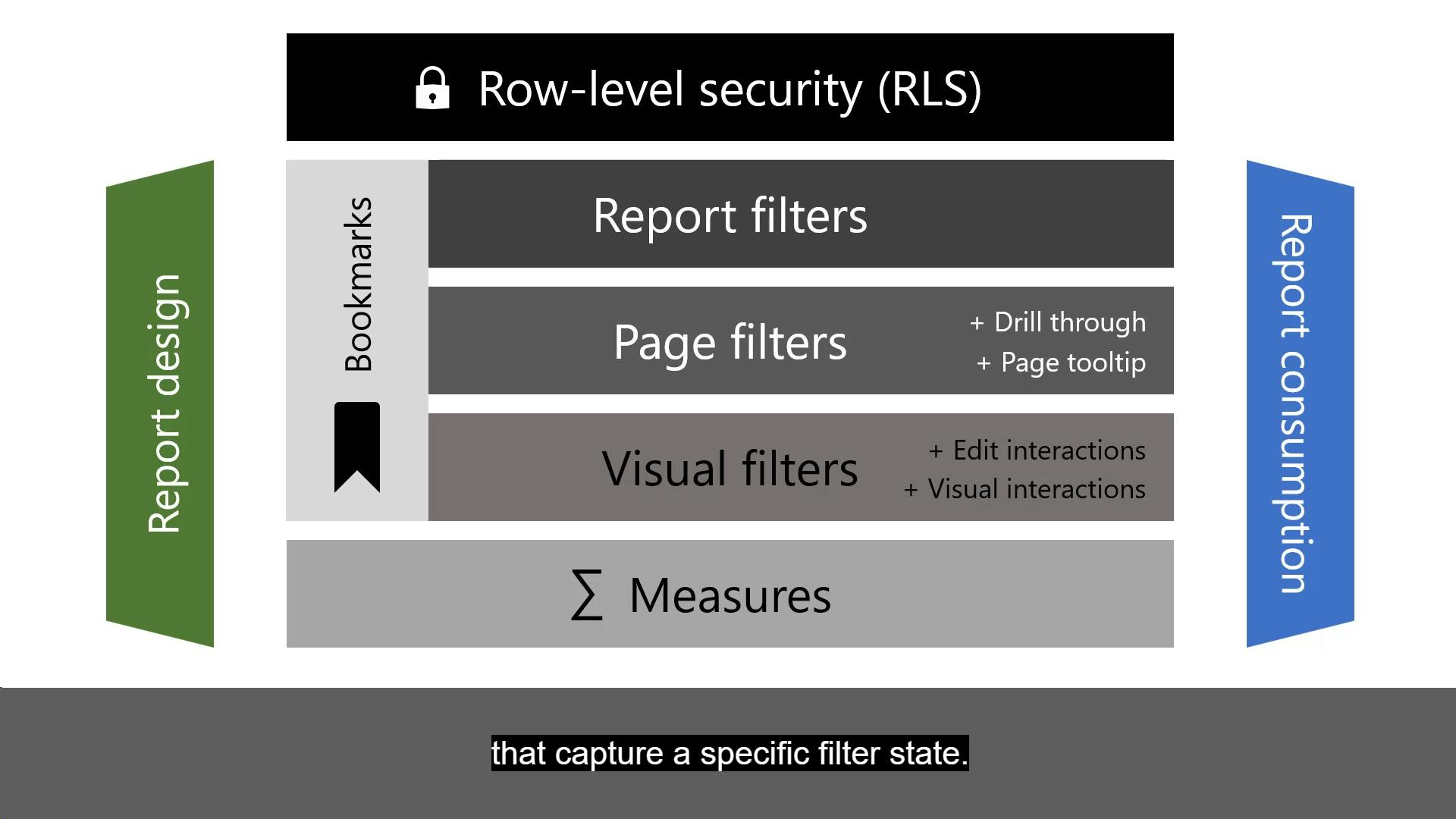

- XI. Module 11 Configure Power BI report filters

- 1. Introduction to designing reports for filtering

- 2. Apply filters to the report structure

- 3. Apply filters with slicers

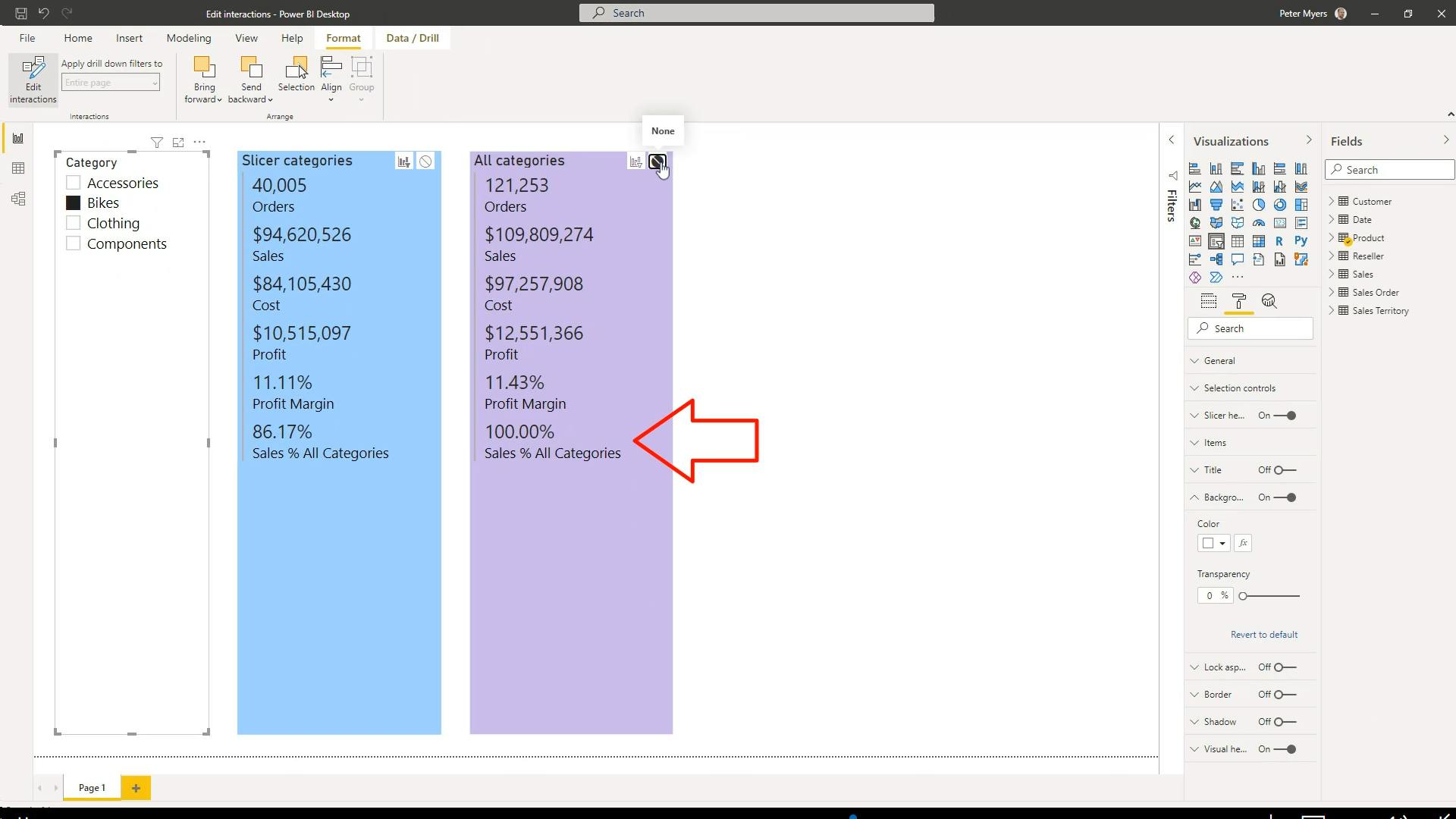

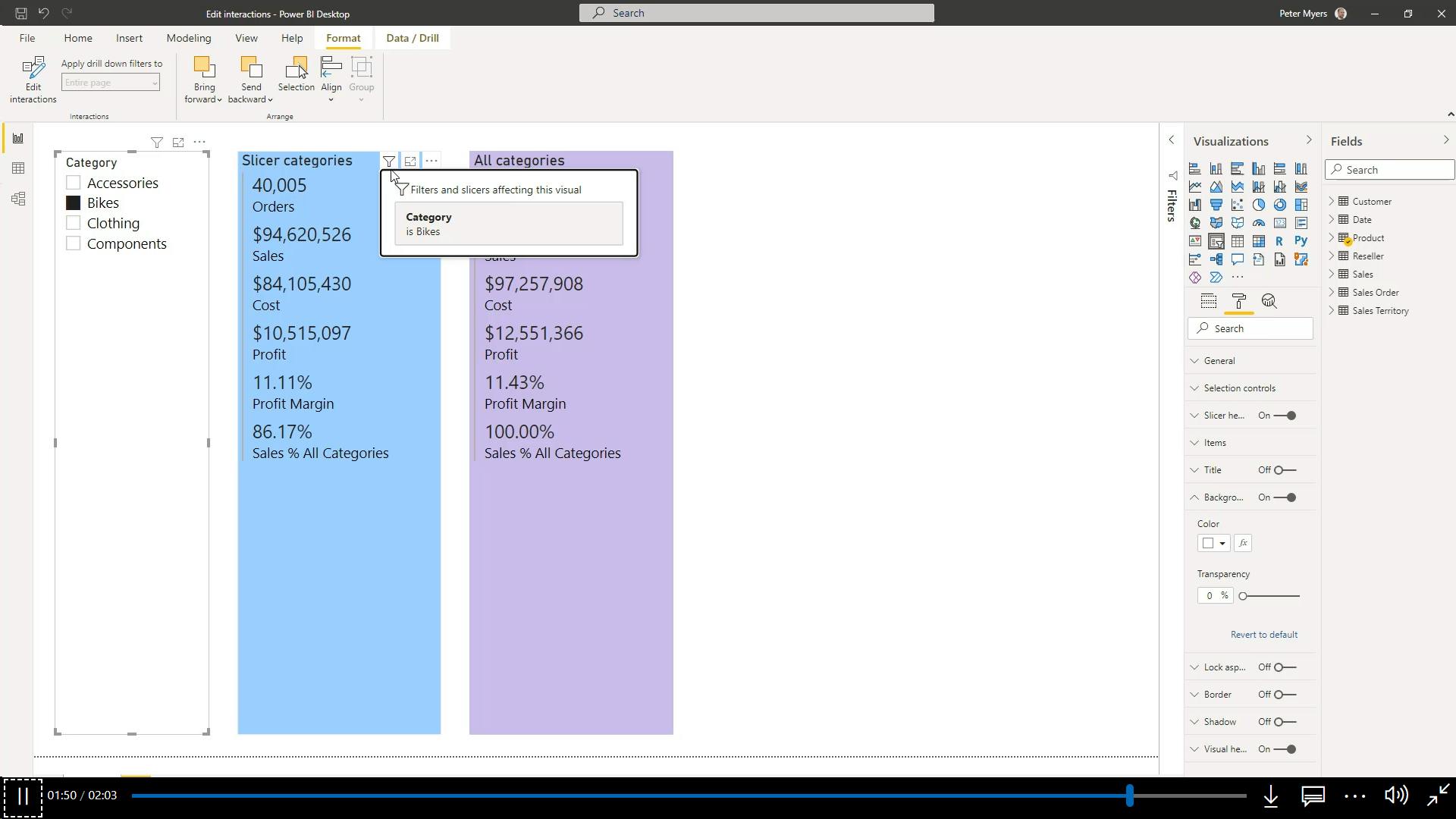

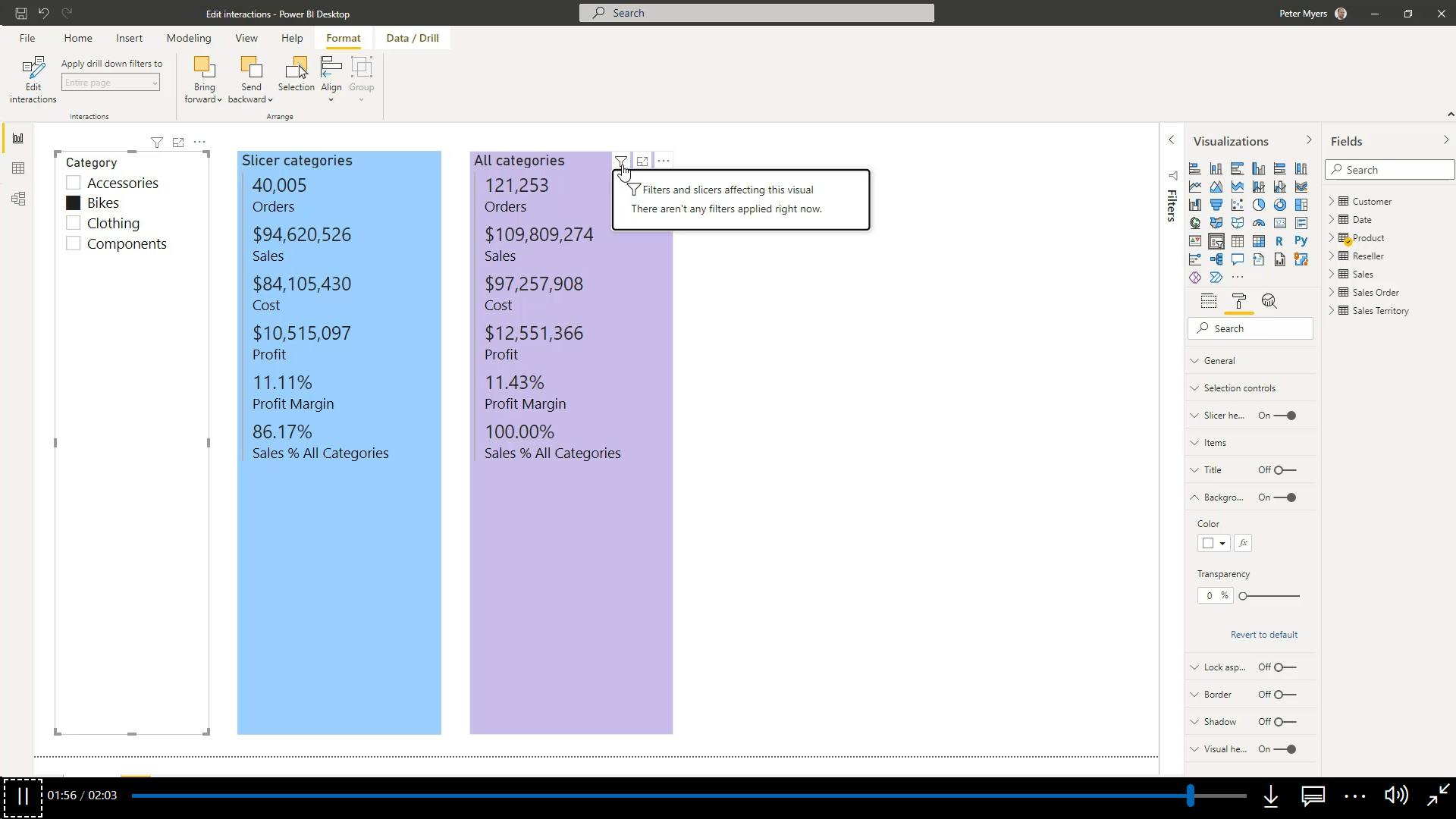

- 4. Design reports with advanced filtering techniques

- 5. Consumption-time filtering

- 6. Select report filter techniques

- 7. Case study - Configure report filters based on feedback

- 8. Check your knowledge

- 9. Summary

- Learning objectives

- XII. Module 12 Enhance Power BI report designs for the user experience

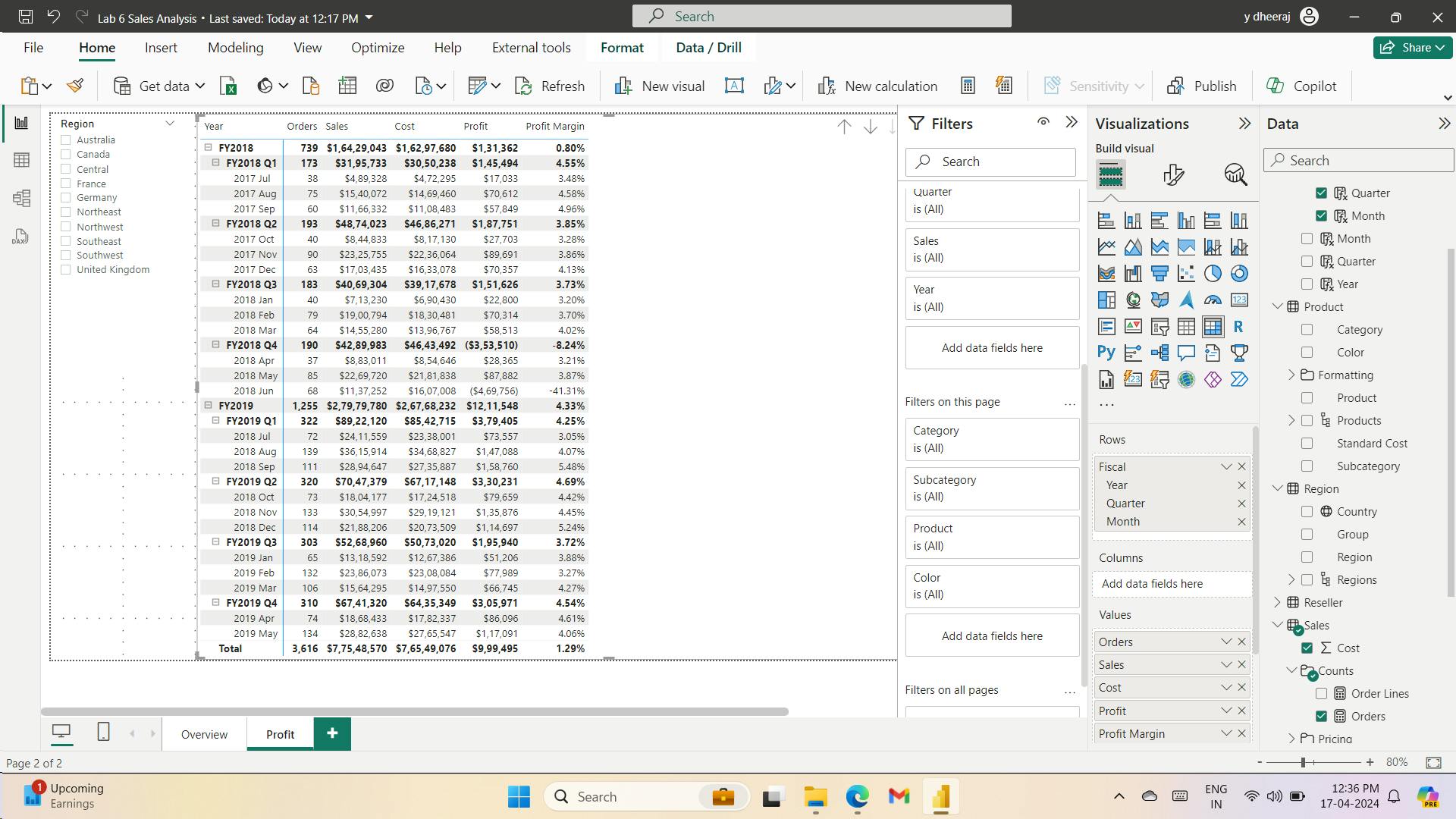

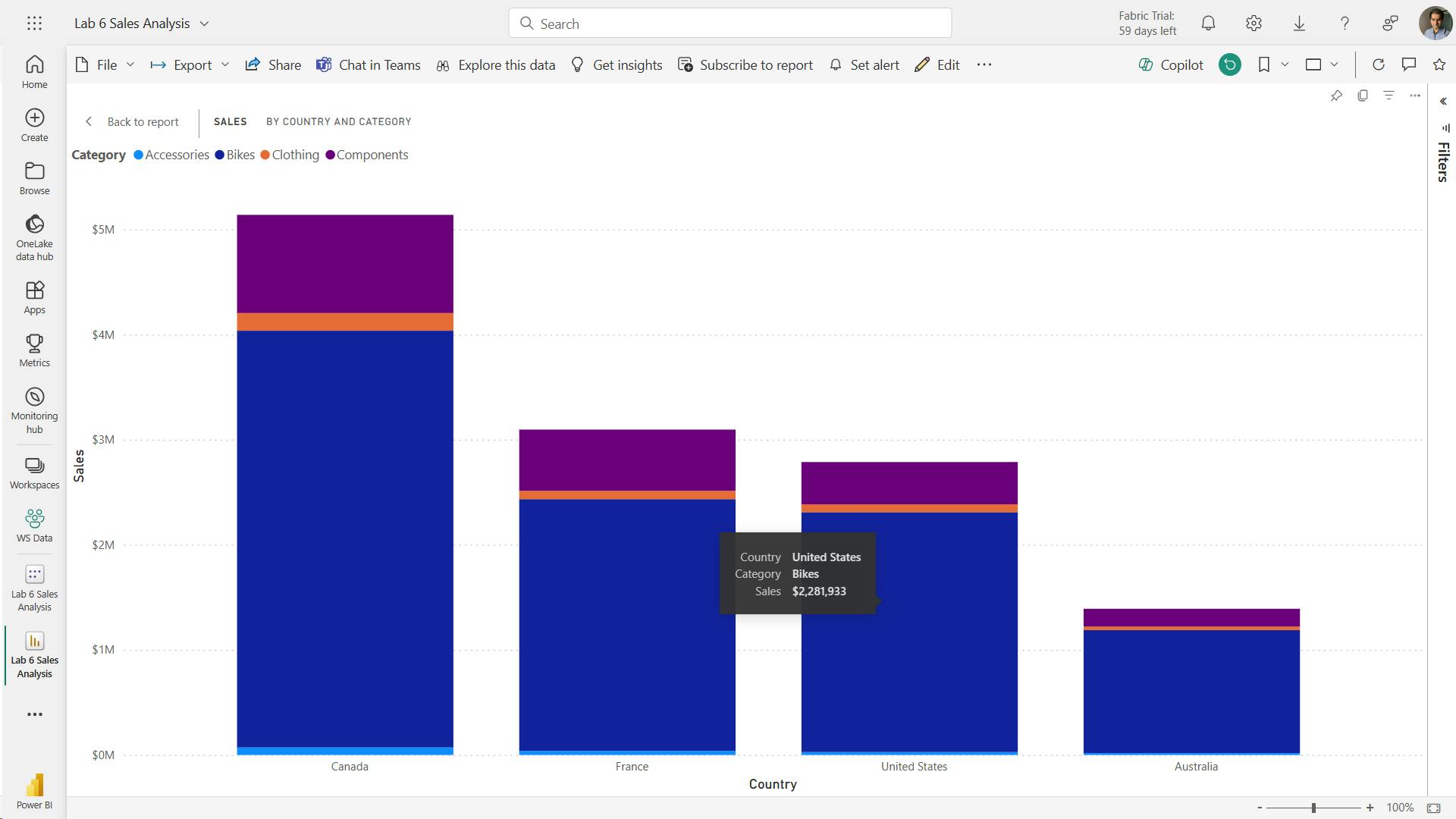

- 1. Design reports to show details

- 2. Design reports to highlight values

- 3. Design reports that behave like apps

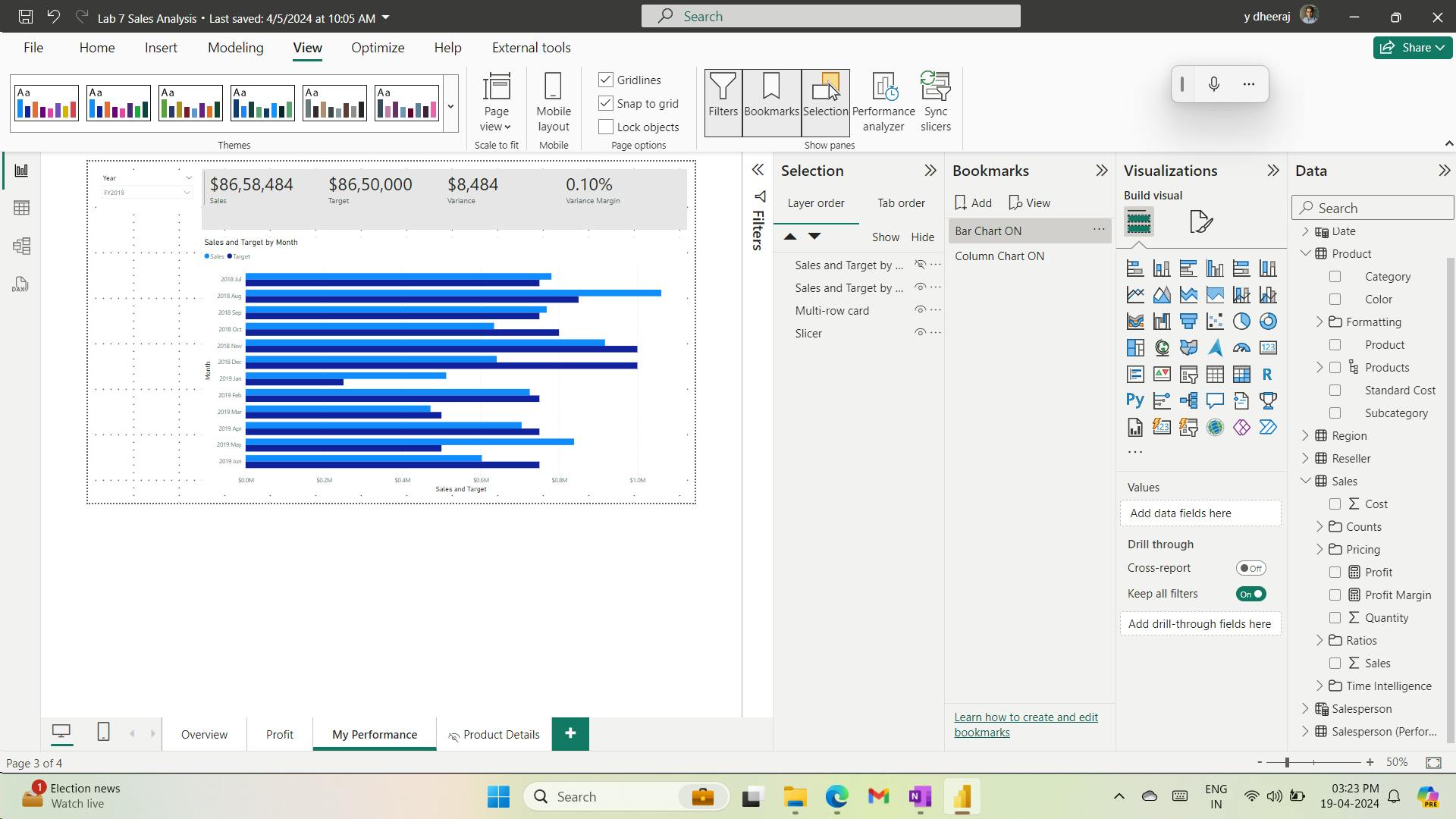

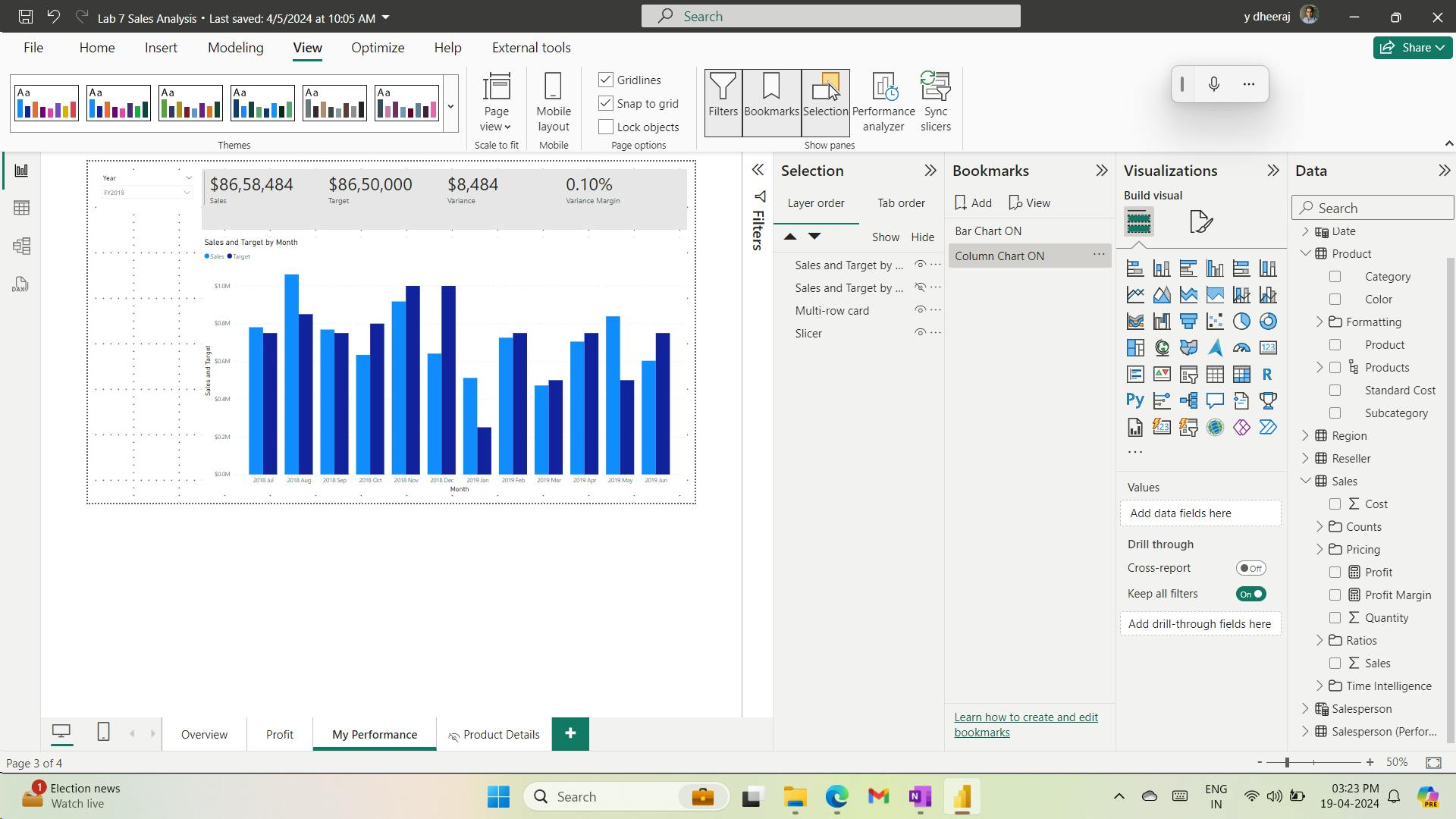

- 4. Work with bookmarks

- 5. Design reports for navigation

- 6. Work with visual headers

- 7. Design reports with built-in assistance

- 8. Tune report performance

- 9. Optimize reports for mobile use

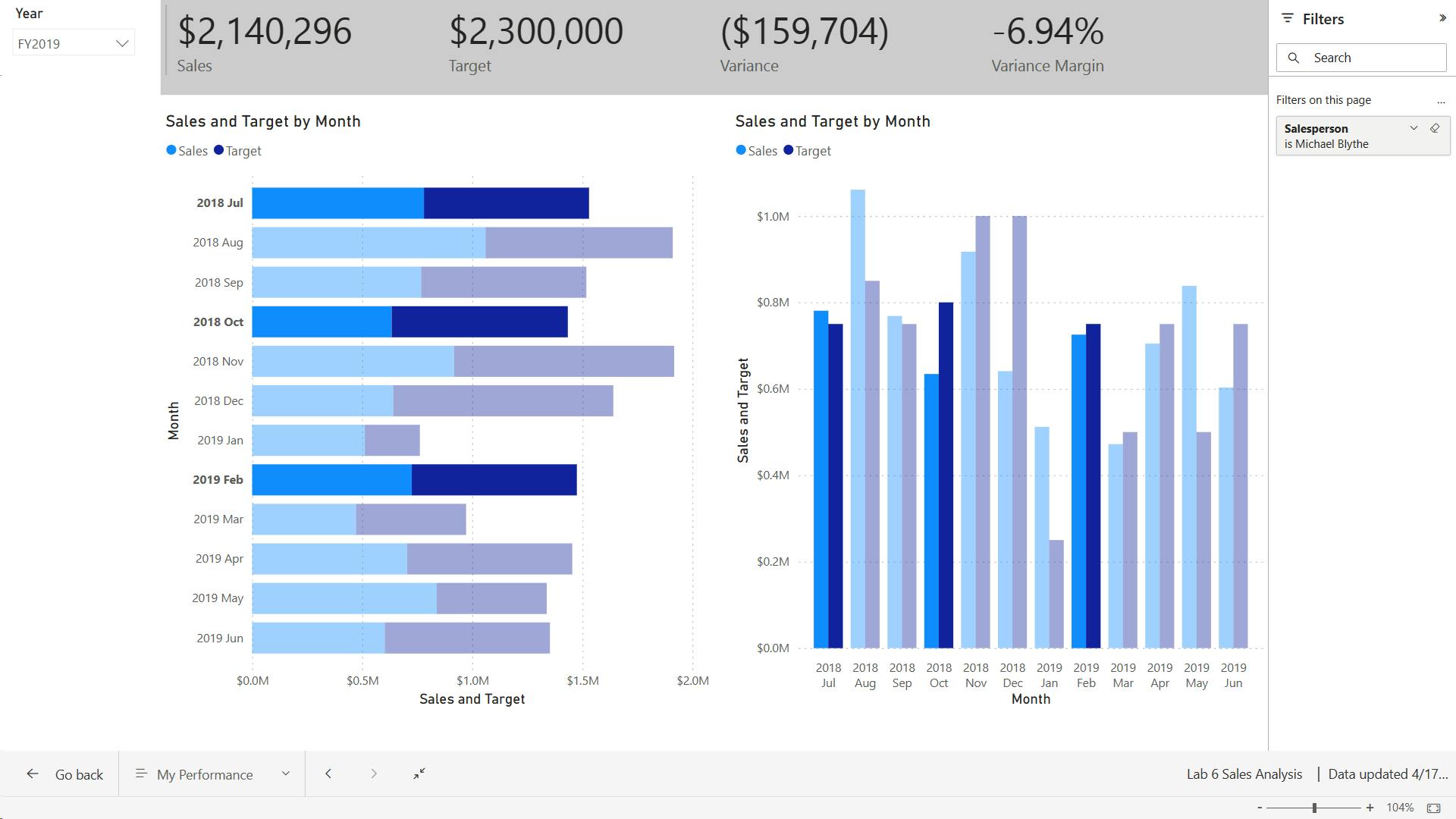

- 10. Exercise - Enhance Power BI reports

- i. Lab story

- ii. Get started – Sign in

- iii. Get started – Open report

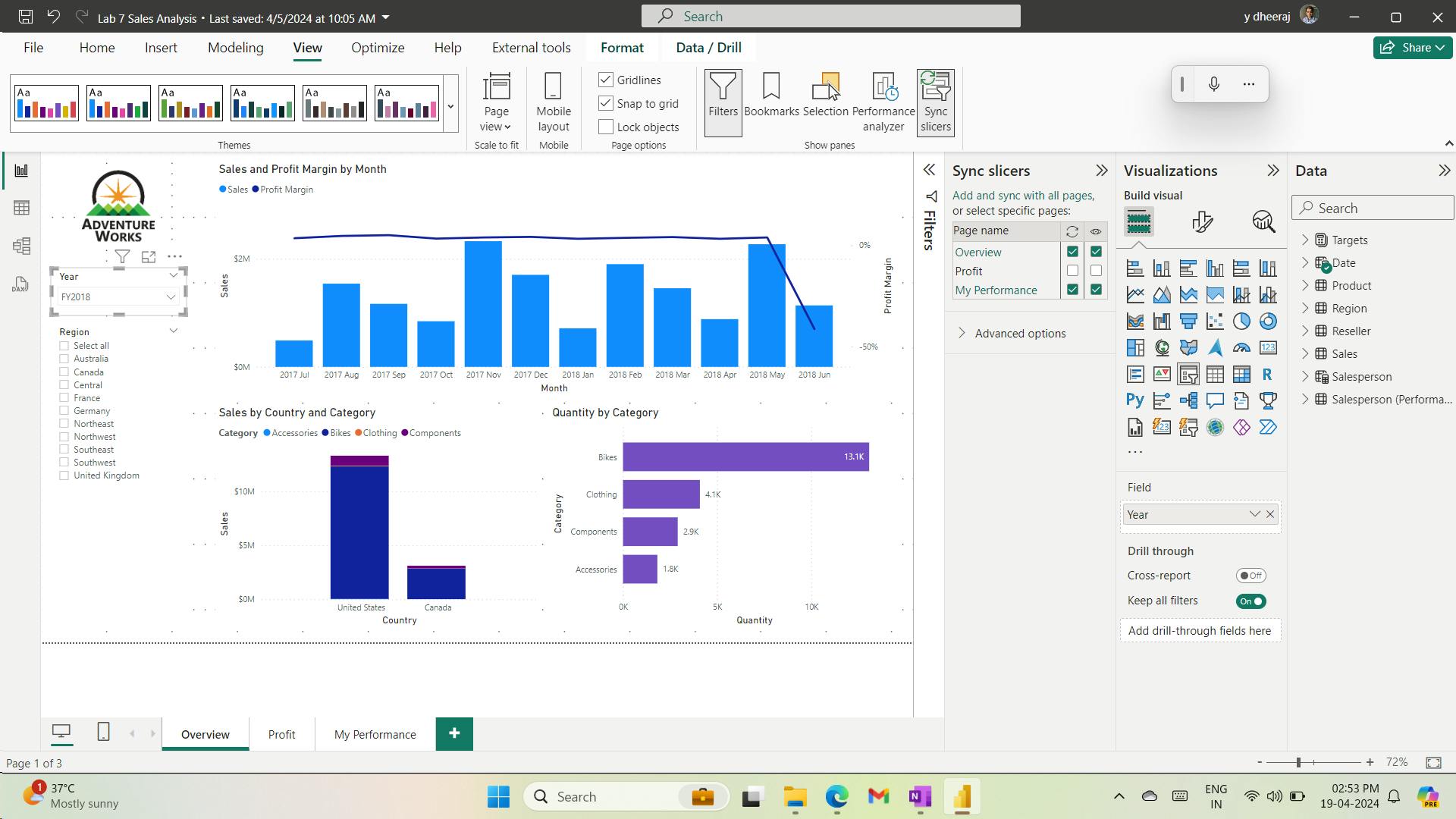

- iv. Sync slicers

- v. Configure drill through

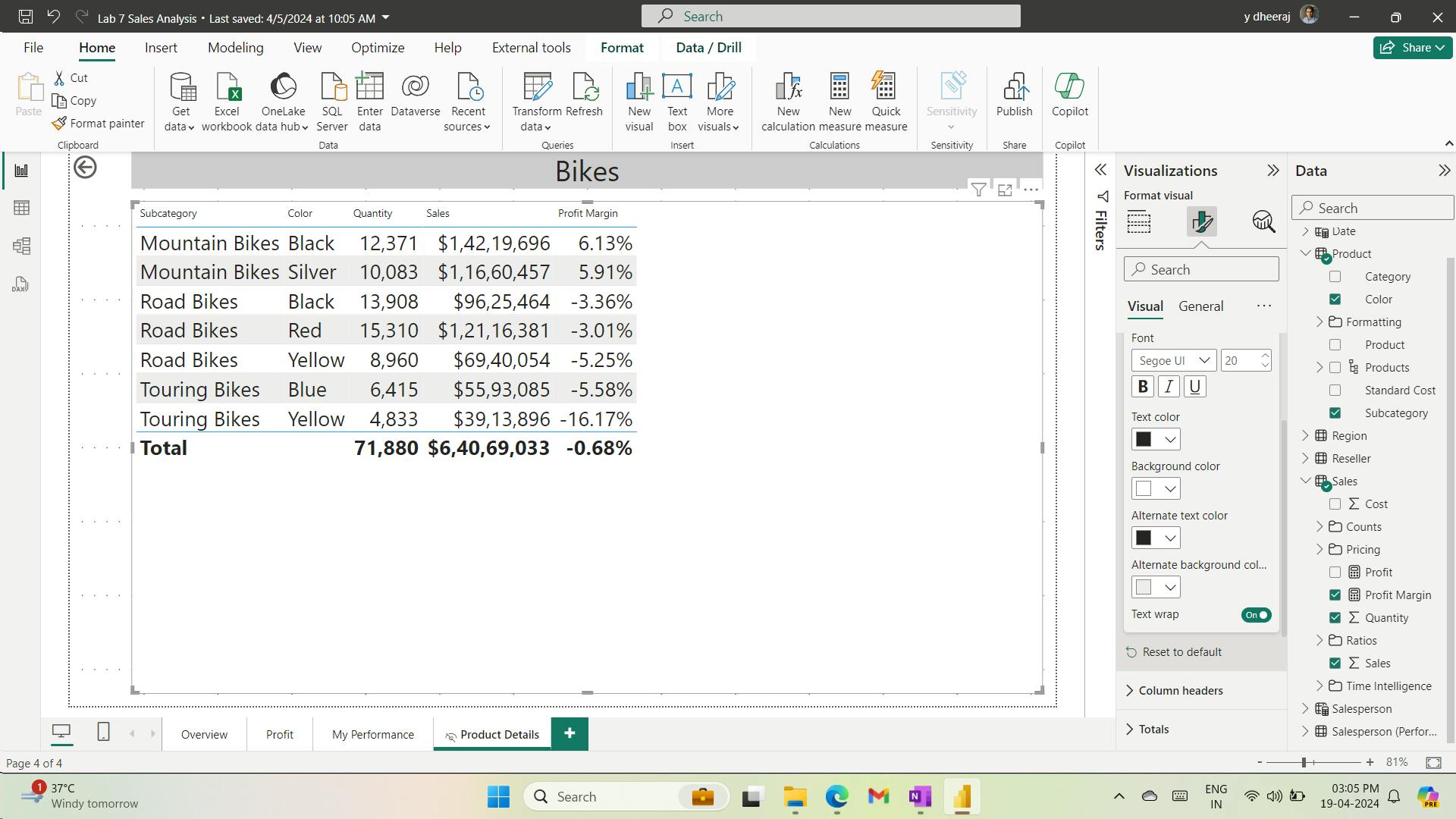

- vi. Create a drill through page

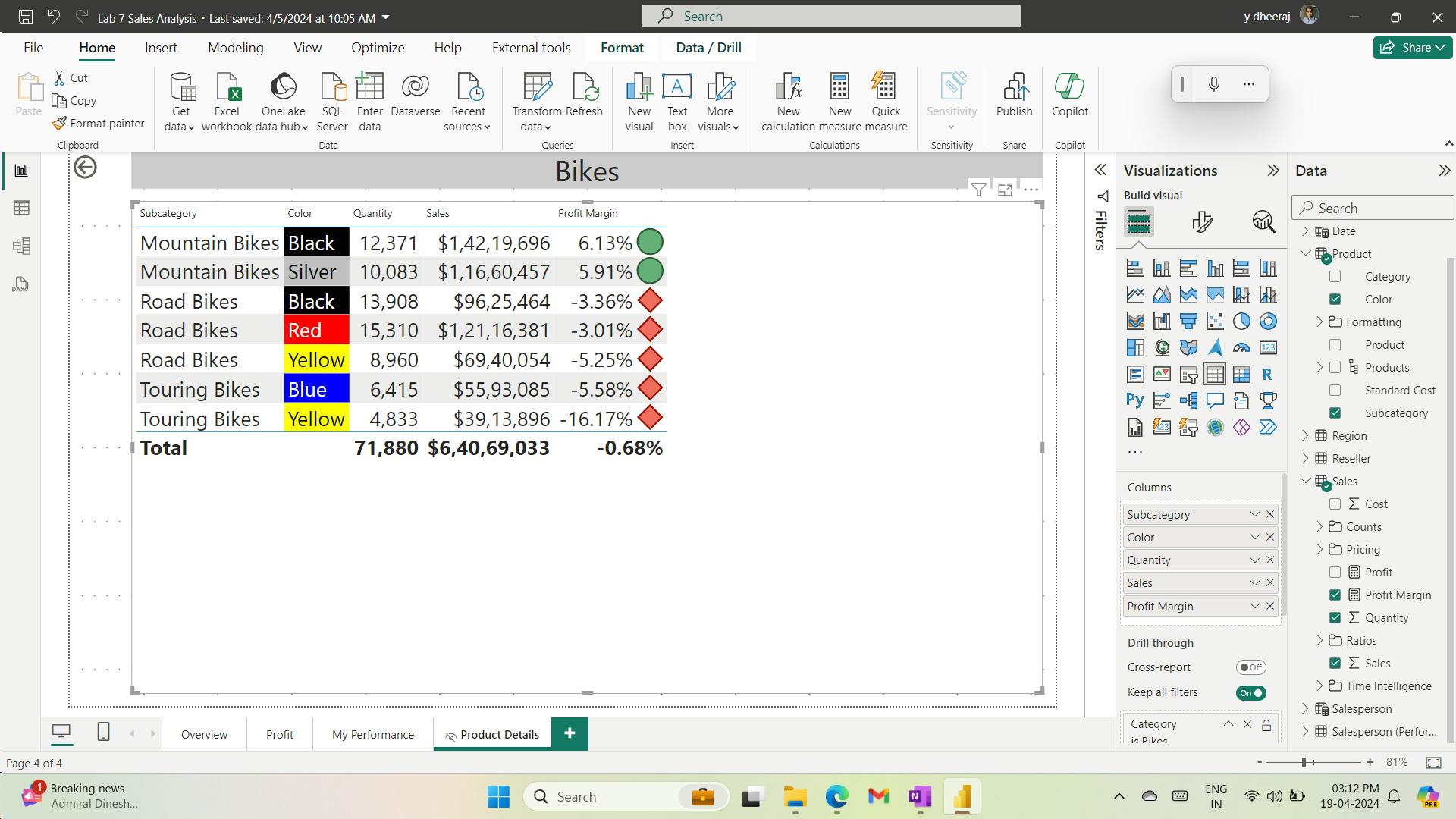

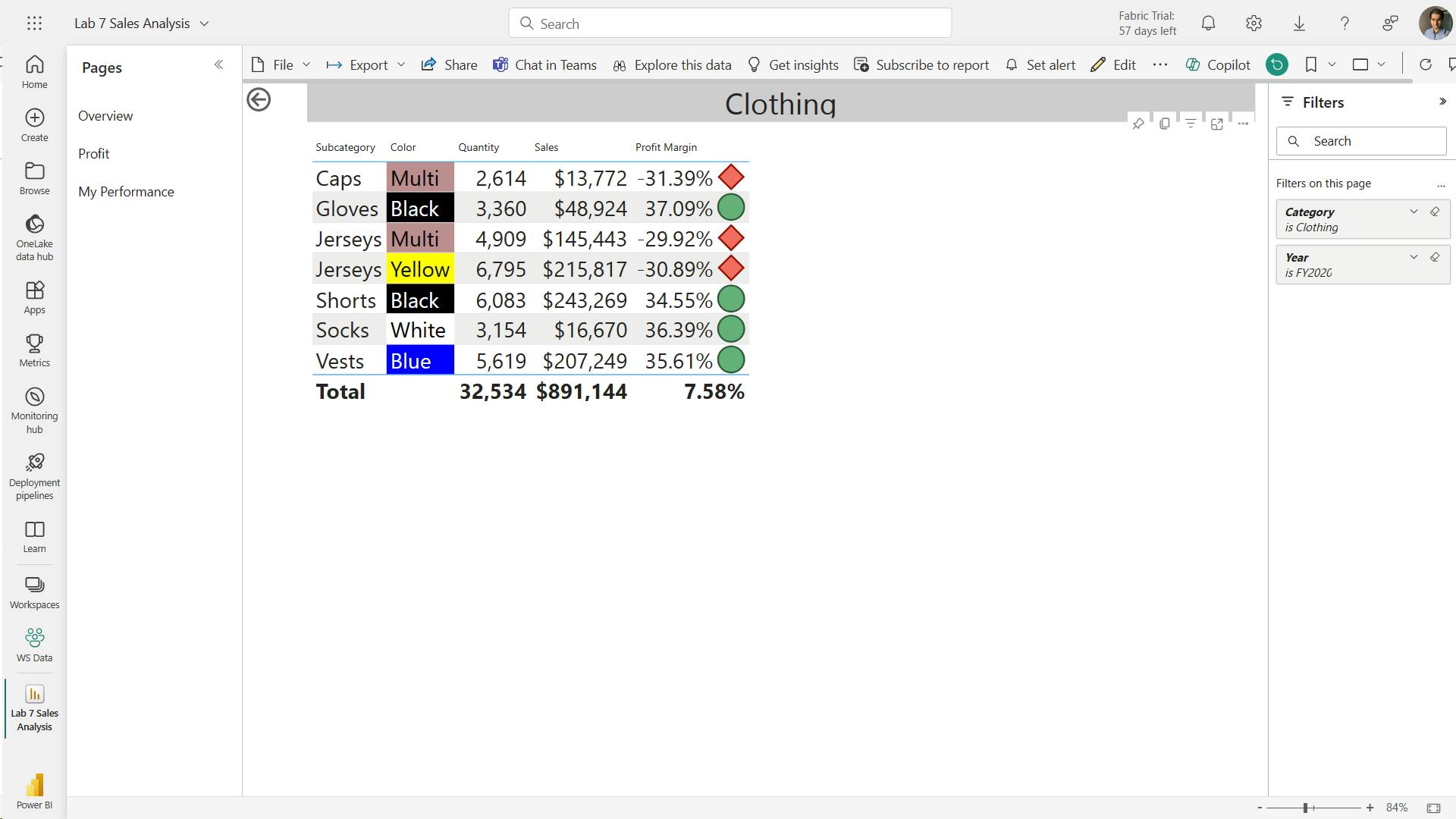

- vii. Add Conditional Formatting

- viii. Add conditional formatting

- ix. Add Bookmarks and Buttons

- x. Add bookmarks

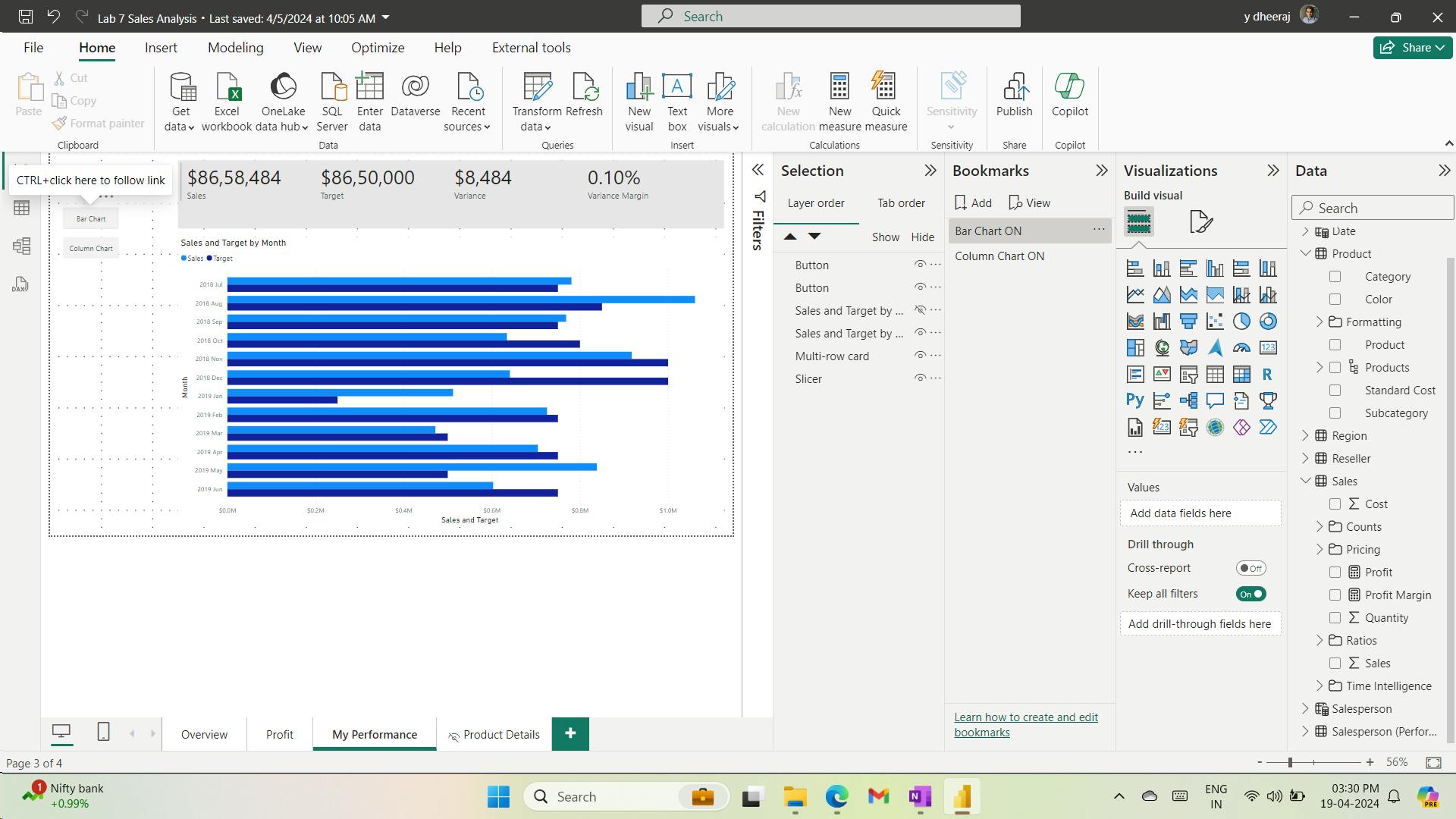

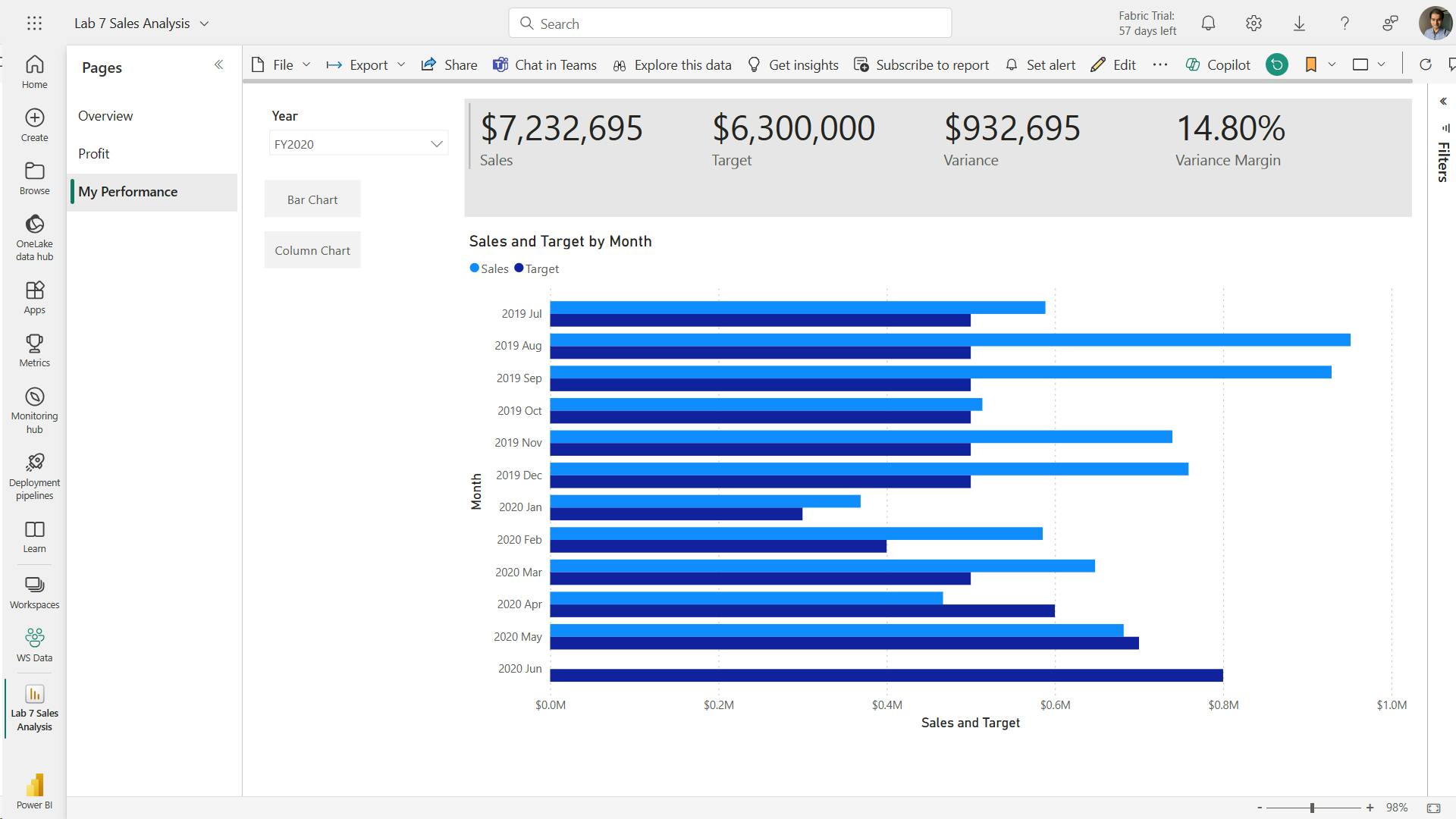

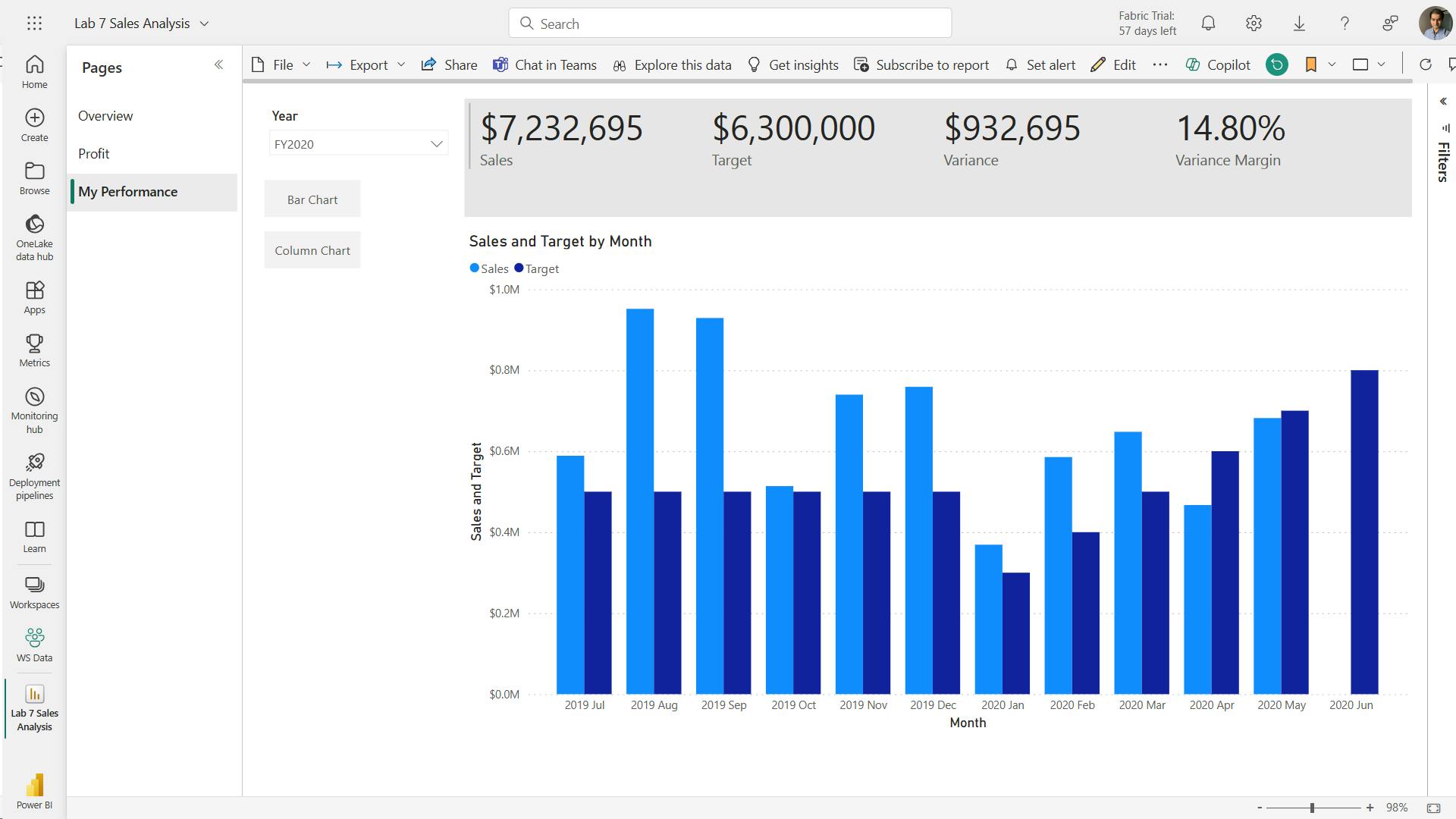

- xi. Add buttons

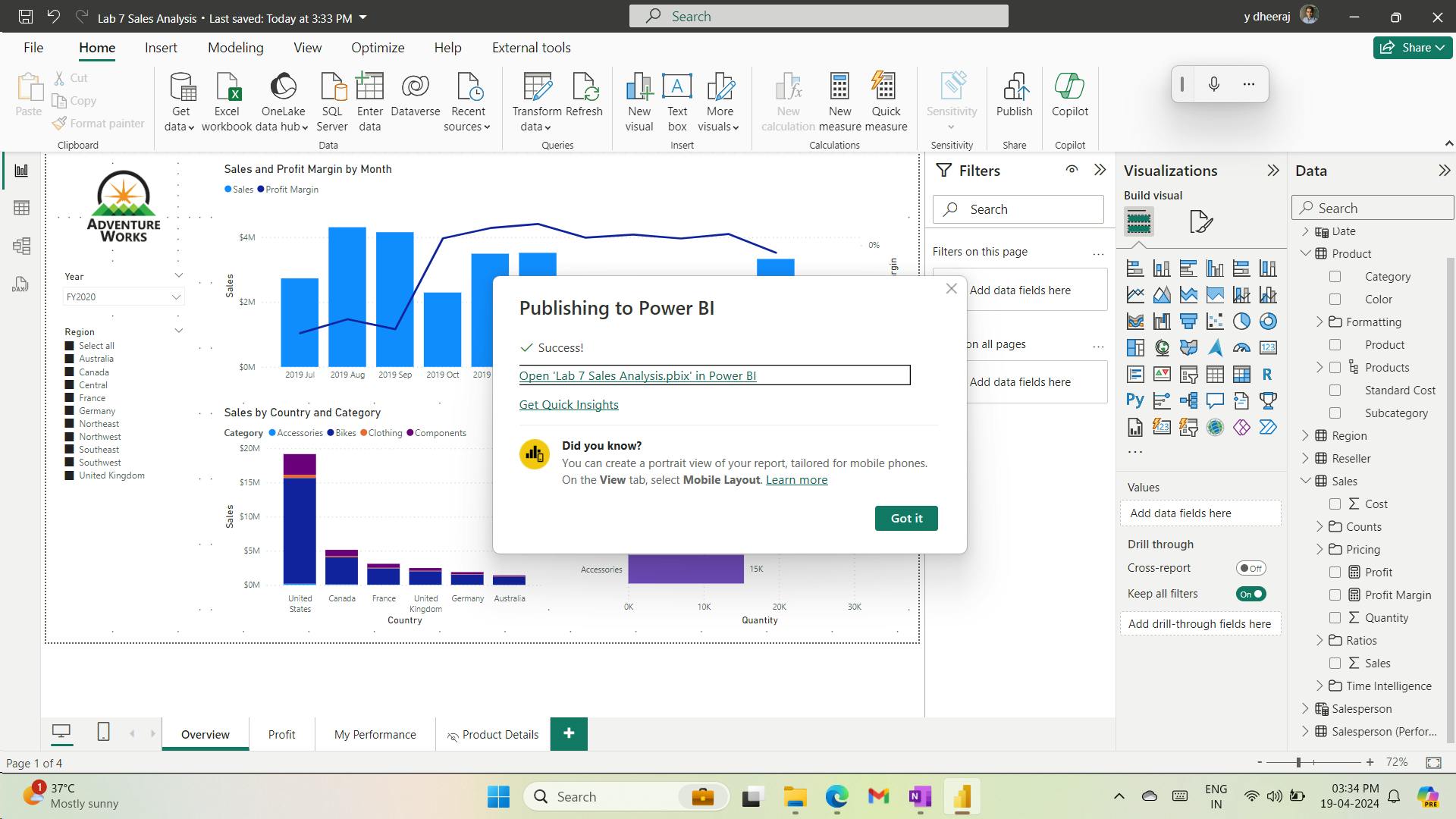

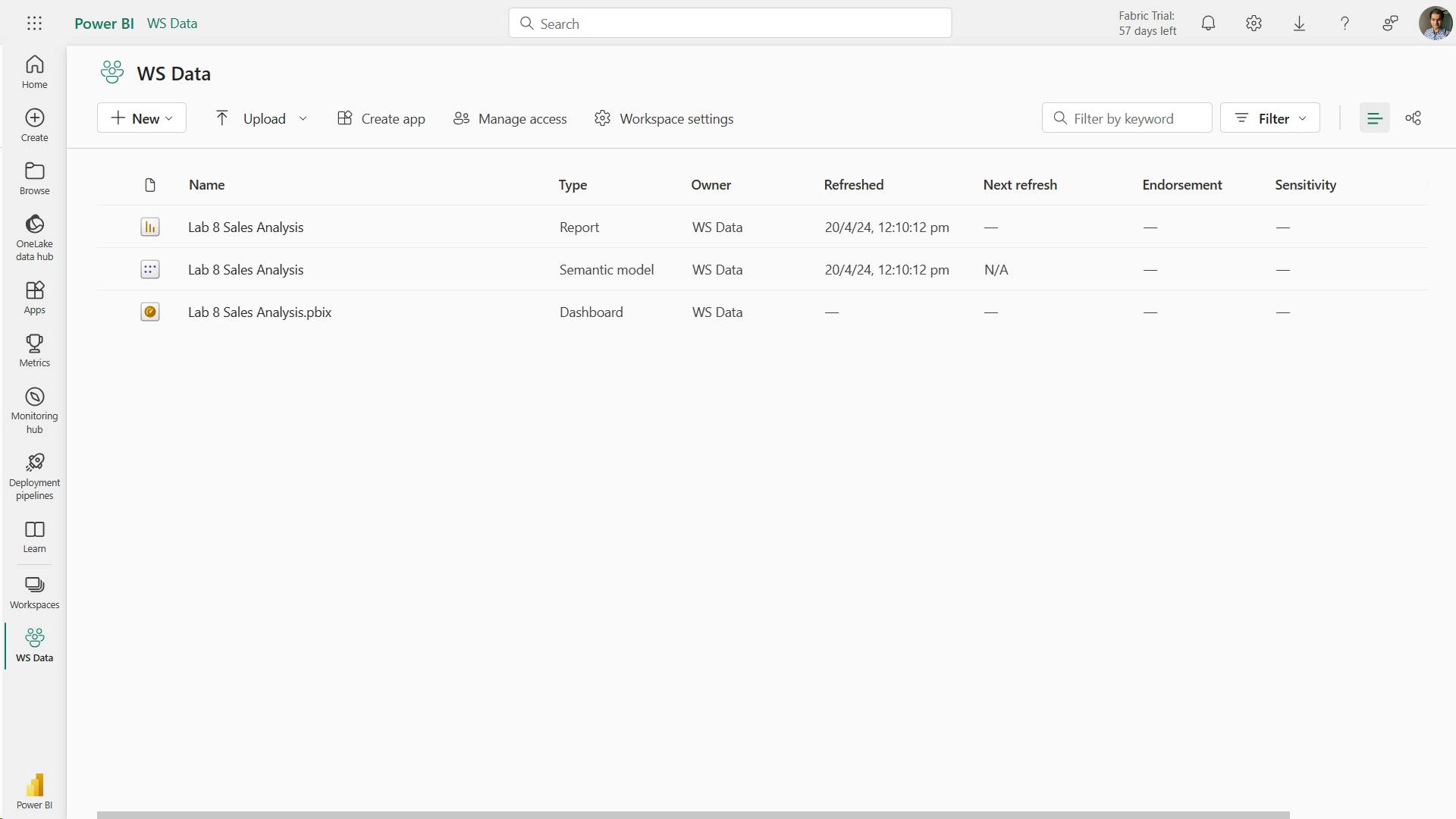

- xii. Publish the report

- xiii. Explore the report

- 11. Check your knowledge

- 12. Summary

- Learning objectives

- XIII. Module 13 Perform analytics in Power BI

- 1. Introduction to analytics

- 2. Explore statistical summary

- 3. Identify outliers with Power BI visuals

- 4. Group and bin data for analysis

- 5. Apply clustering techniques

- 6. Conduct time series analysis

- 7. Use the Analyze feature

- 8. Create what-if parameters

- 9. Use specialized visuals

- 10. Exercise - Perform Advanced Analytics with AI Visuals

- 11. Check your knowledge

- 12. Summary

- Learning objectives

- XIV. Module 14 Create and manage workspaces in Power BI

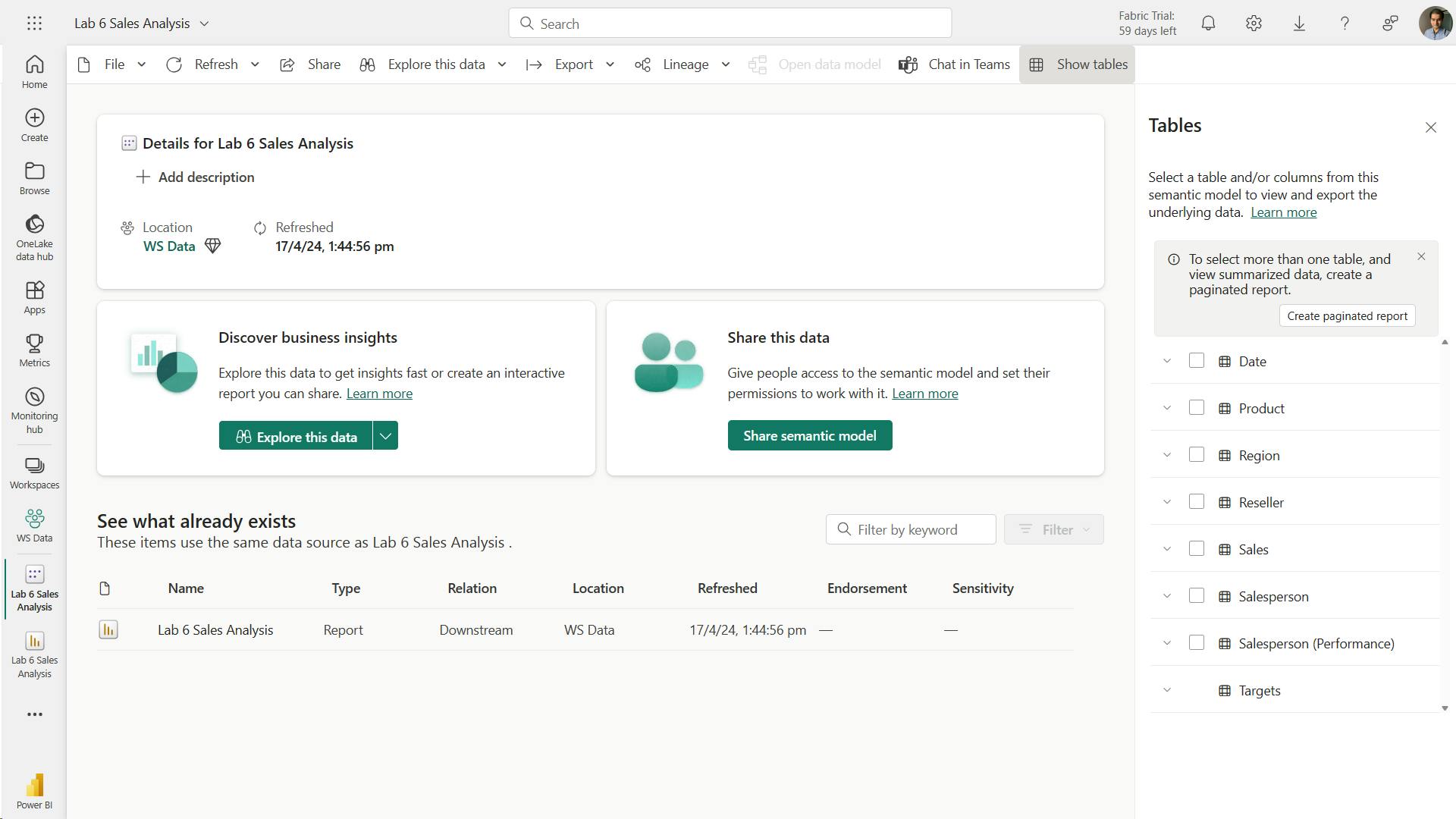

- XV. Module 15 Manage semantic models in Power BI

- 1. Introduction

- 2. Use a Power BI gateway to connect to on-premises data sources

- 3. Configure a semantic model scheduled refresh

- 4. Configure incremental refresh settings

- 5. Manage and promote semantic models

- 6. Troubleshoot service connectivity

- 7. Boost performance with query caching (Premium)

- 8. Check your knowledge

- 9. Summary

- Learning objectives

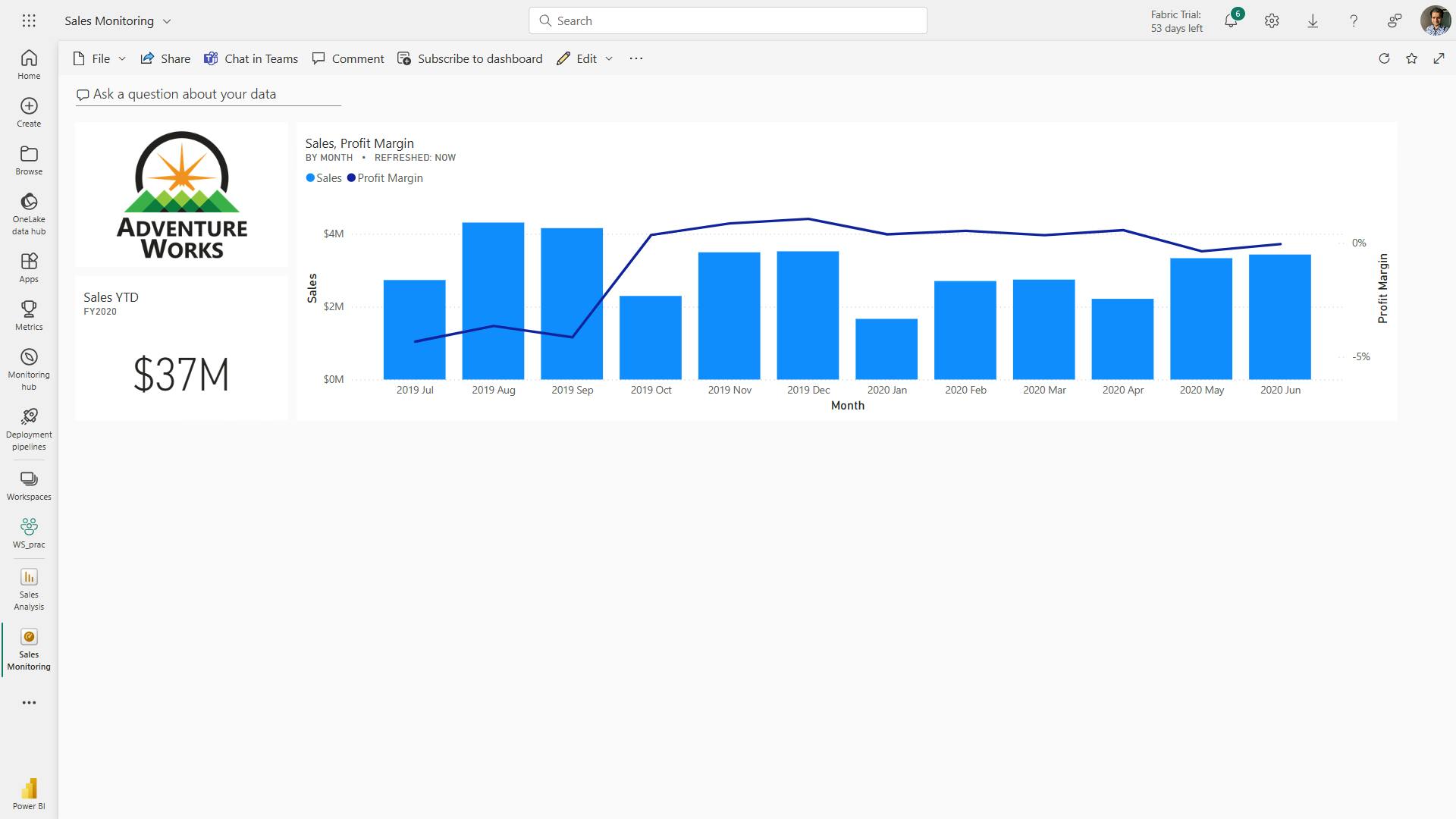

- XVI. Module 16 Create dashboards in Power BI

- 1. Introduction to dashboards

- 2. Configure data alerts

- 3. Explore data by asking questions

- 4. Review Quick insights

- 5. Add a dashboard theme

- 6. Pin a live report page to a dashboard

- 7. Configure a real-time dashboard

- 8. Set mobile view

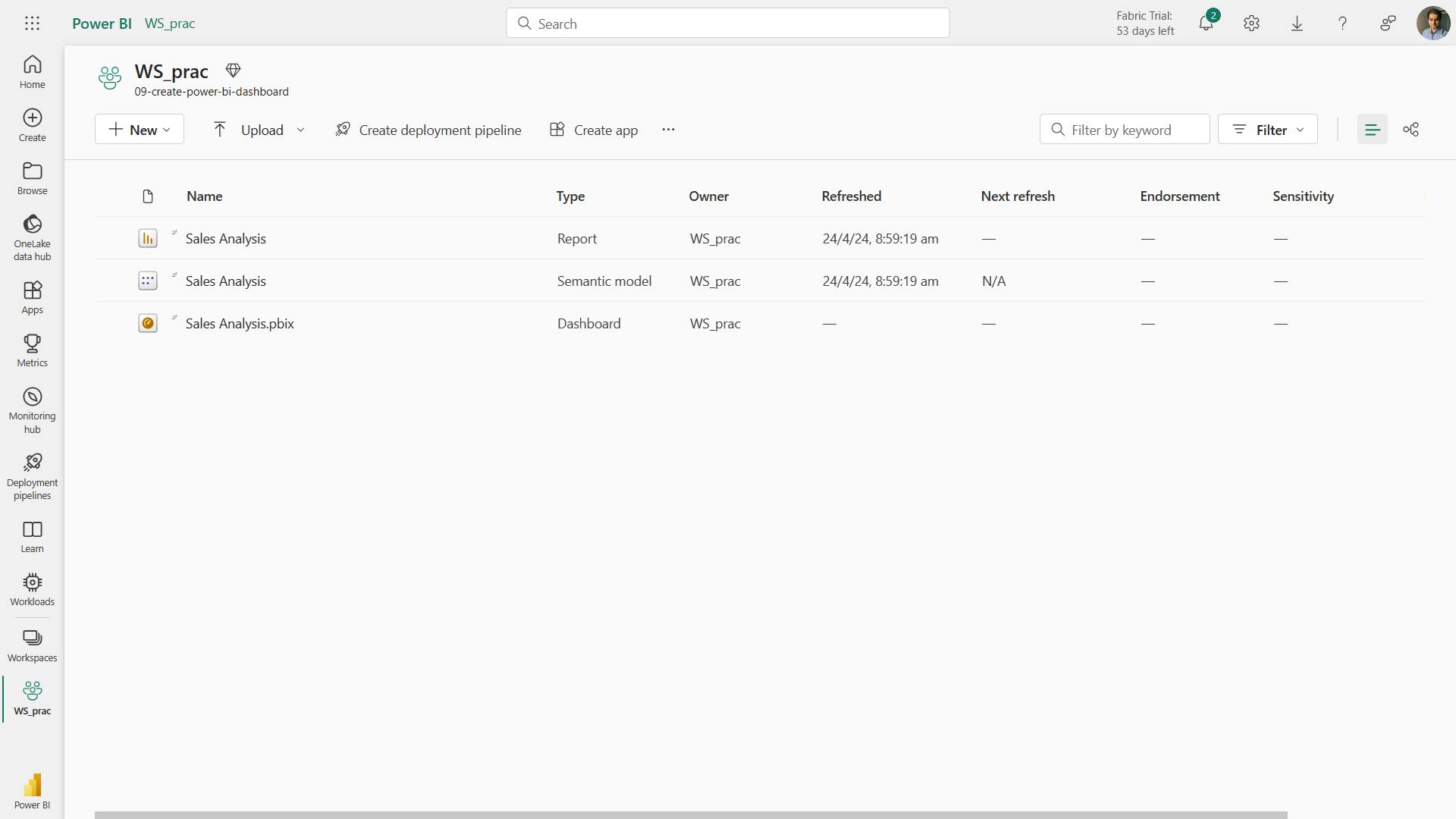

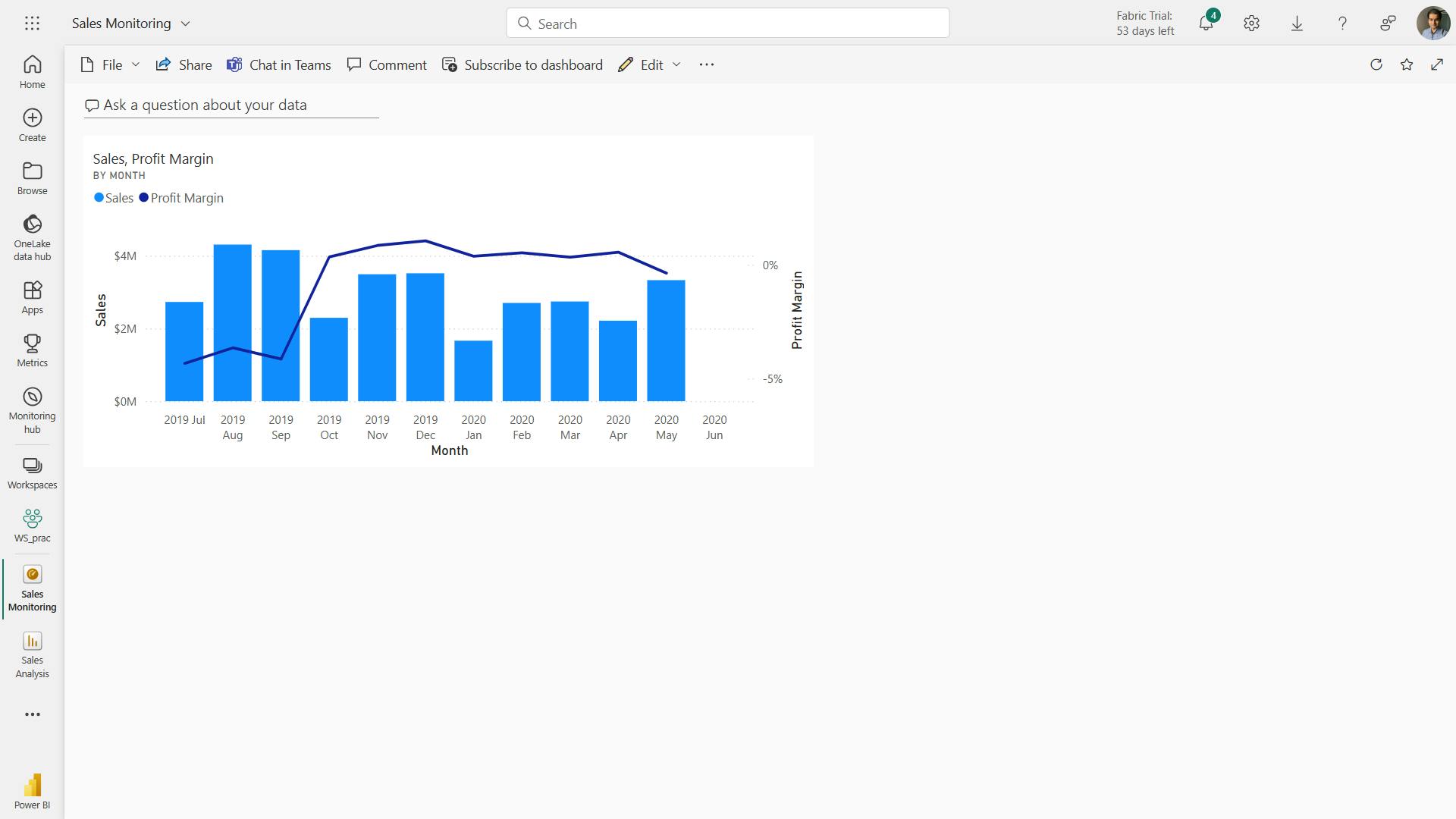

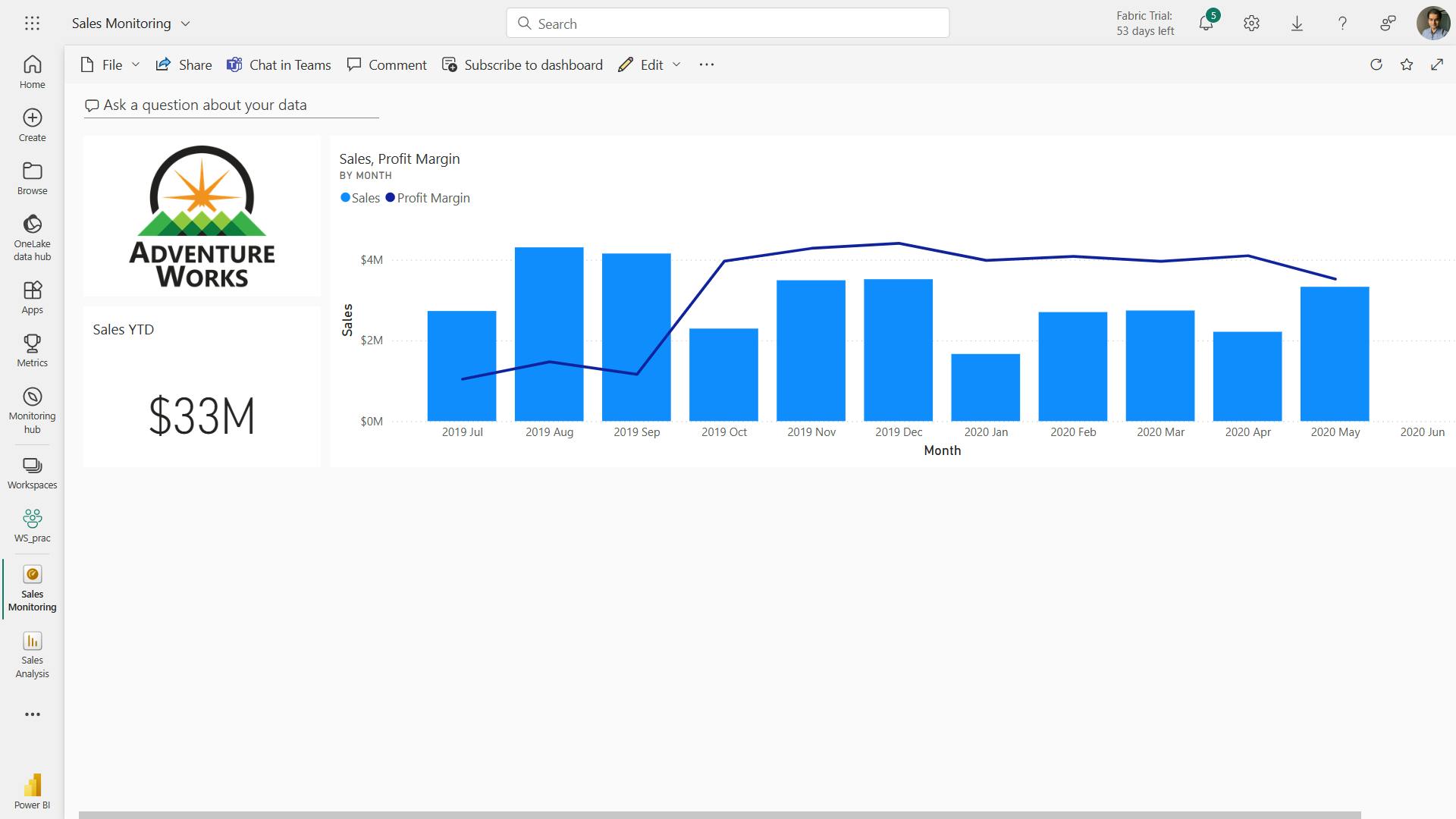

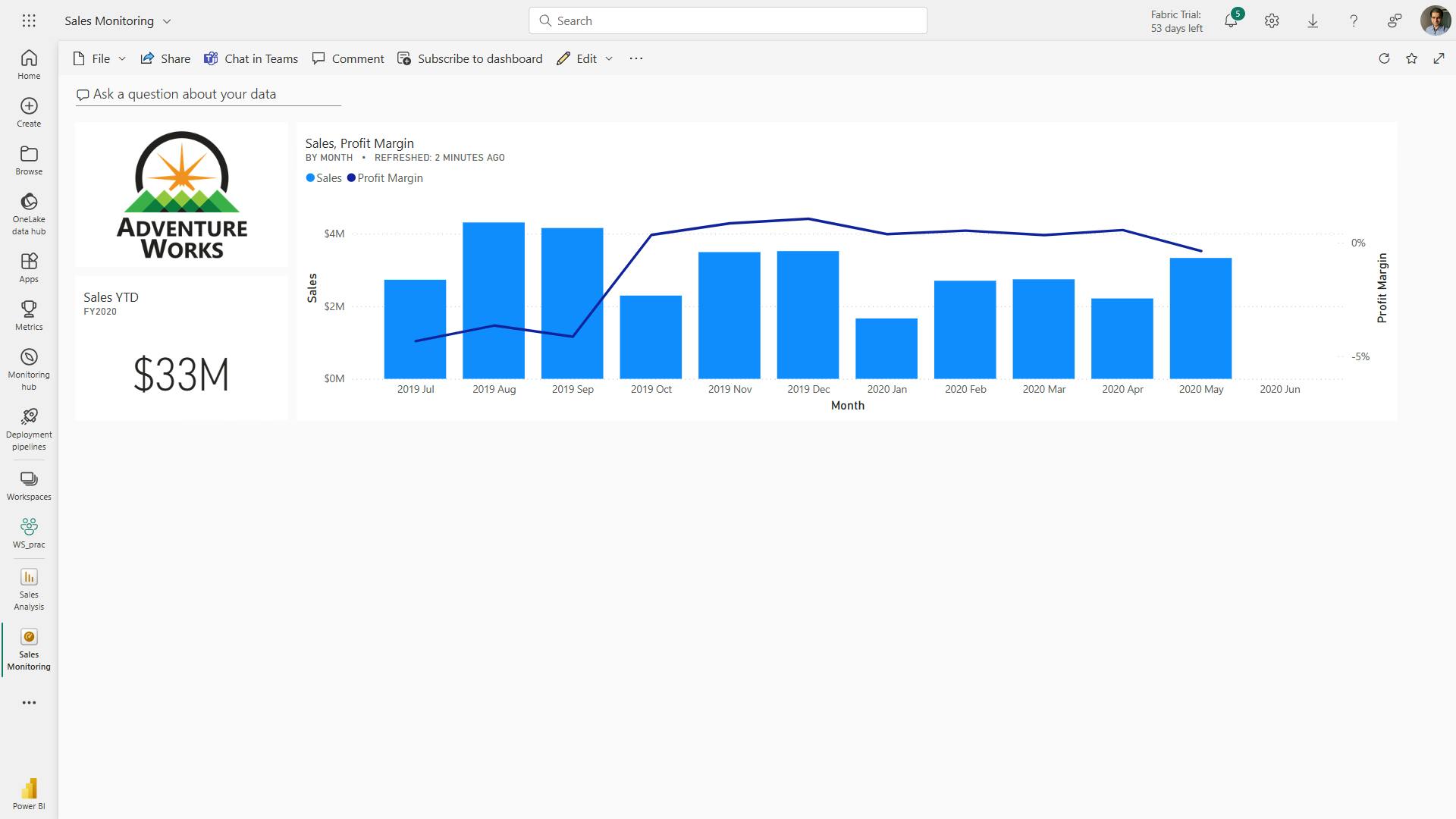

- 9. Exercise - Create a Power BI dashboard

- 10. Check your knowledge

- 11. Summary

- Learning objectives

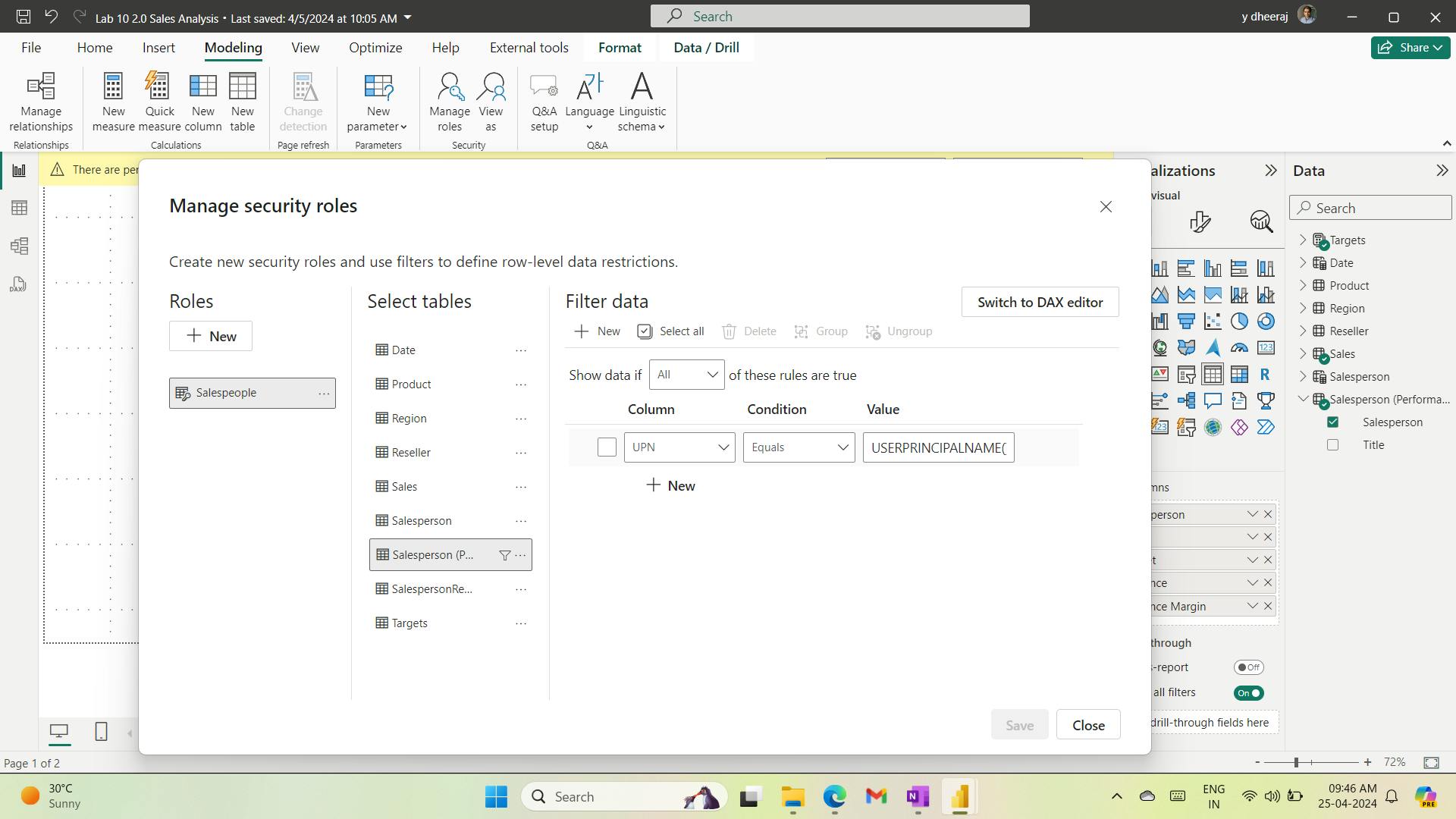

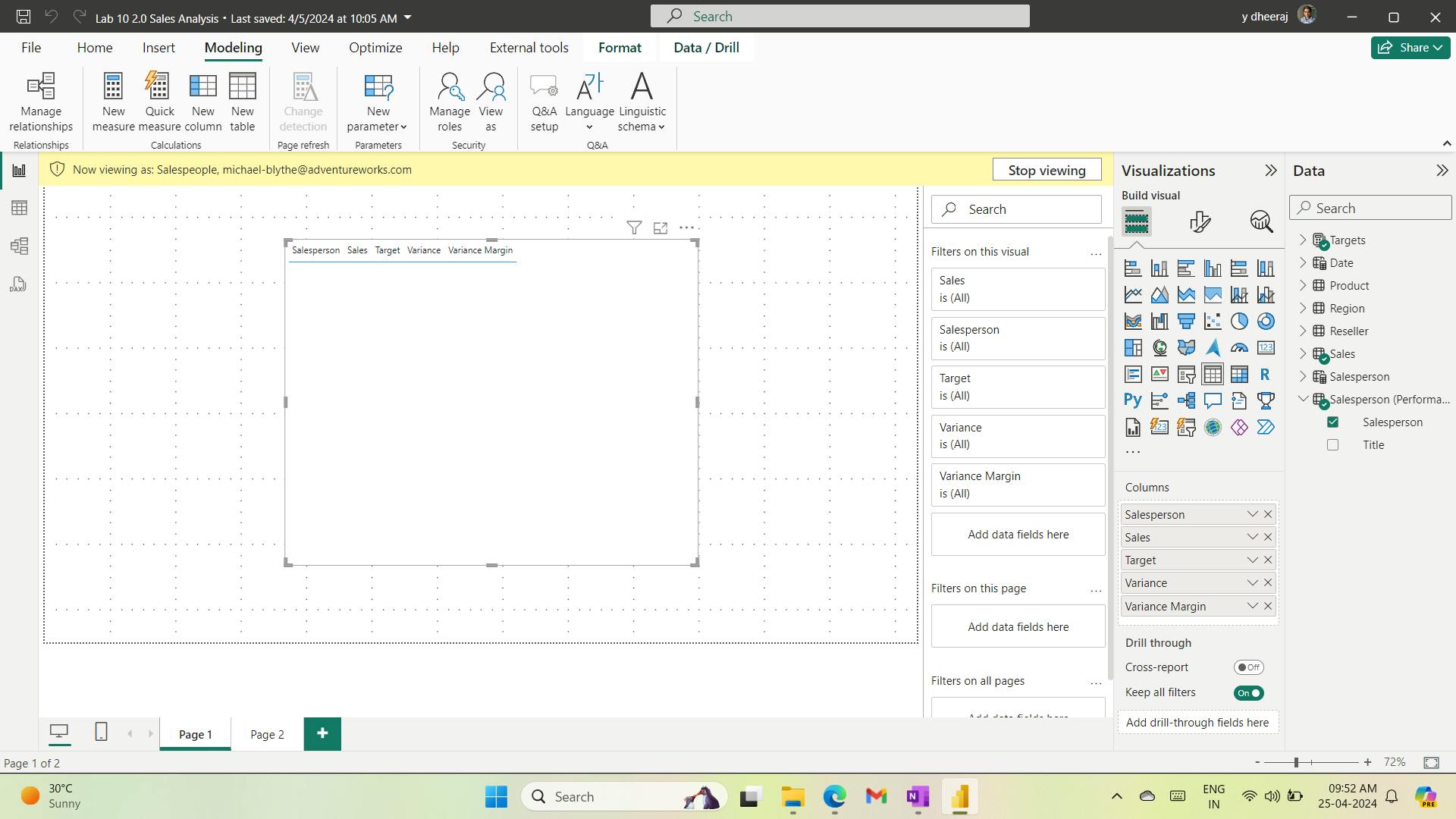

- XVI. Module 17 Implement row-level security

- Conclusion

- Source: Microsoft Power BI Data Analyst [Link]

- Author: Dheeraj.Yss

- Connect with me:

In this article, contains info about #MicrosoftPowerBIDataAnalyst and get prepared about for Exam PL-300.

Foreword:

The entire content is owned by Microsoft, and I am logging for practice and it is for educational purposes only.

All presented information is owned by Microsoft and intended solely for learning about the covered products and services in my Microsoft Learn AI Skills Challenge: Fabric Analytics Engineer Journey.

Overview:

This course covers the various methods and best practices that are in line with business and technical requirements for

modeling,

visualizing, and

analyzing data with Power BI.

The course will show how to access and process data from a range of data sources including both relational and non-relational sources.

Finally, this course will also discuss how to manage and deploy reports and dashboards for sharing and content distribution.

As a candidate for this "Microsoft Power BI Data Analyst" certification, you should deliver actionable insights by working with available data and applying domain expertise.

You should:

Provide meaningful business value through easy-to-comprehend data visualizations.

Enable others to perform self-service analytics.

Deploy and configure solutions for consumption.

As a Power BI data analyst, you work closely with business stakeholders to identify business requirements.

You collaborate with enterprise data analysts and data engineers to identify and acquire data.

You use Power BI to:

Transform the data.

Create data models.

Visualize data.

Share assets.

You should be proficient at using Power Query and writing expressions by using Data Analysis Expressions (DAX).

You know how to assess data quality. Plus, you understand data security, including row-level security and data sensitivity.

Skills measured

Prepare the data

Model the data

Visualize and analyze the data

Deploy and maintain assets

| Course | Microsoft Power BI Data Analyst |

| Module 17 |

I. Module 1 Discover data analysis

| Course | Microsoft Power BI Data Analyst |

| Module 1/17 | Discover data analysis |

Would you like to explore the journey of a data analyst and learn how a data analyst tells a story with data?

1. Introduction

As a data analyst, you are on a journey.

With data and information as the most strategic asset of a business, the underlying challenge that organizations have today is understanding and using their data to positively effect change within the business.

Businesses continue to struggle to use their data in a meaningful and productive way, which impacts their ability to act.

You need to be able to look at the data and facilitate trusted business decisions. Then, you need the ability to look at metrics and clearly understand the meaning behind those metrics.

Data analysis exists to help overcome these challenges and pain points, ultimately assisting businesses in finding insights and uncovering hidden value in troves of data through storytelling.

As you read on, you will learn how to use and apply analytical skills to go beyond a single report and help impact and influence your organization by telling stories with data and driving that data culture.

2. Overview of data analysis

Data analysis is the process of identifying, cleaning, transforming, and modeling data to discover meaningful and useful information.

The data is then crafted into a story through reports for analysis to support the critical decision-making process.

While the process of data analysis focuses on the tasks of cleaning, modeling, and visualizing data, the concept of data analysis and its importance to business should not be understated. To analyze data, core components of analytics are divided into the following categories:

Descriptive - key performance indicators (KPIs) - return on investment (ROI), organization's sales and financial data.

Diagnostic - questions about why events happened

Predictive - questions about what will happen in the future

Prescriptive - questions about which actions should be taken to achieve a goal or target.

Cognitive - a self-learning feedback loop.

As the amount of data grows, so does the need for data analysts. A data analyst knows how to organize information and distill it into something relevant and comprehensible. A data analyst knows how to gather the right data and what to do with it, in other words, making sense of the data in your data overload.

3. Roles in data

Business analyst

Data analyst

Data engineer

Data scientist

Database administrator

4. Tasks of a data analyst

Data preparation - profiling, cleaning, and transforming your data to get it ready to model and visualize.

Data modeling - determining how your tables are related to each other.

visualization - report should tell a compelling story about that data.

Analyze - find insights, identify patterns and trends, predict outcomes, and then communicate those insights.

Manage - management of Power BI

5. Answers:

A data analyst uses appropriate visuals to help business decision makers gain deep and meaningful insights from data.

An optimized and tuned semantic model performs better and provides a better data analysis experience.

A key benefit of data analysis is the ability to gain valuable insights from a business's data assets to make timely and optimal business decisions.

Learning objectives

In this module, you'll:

Learn about the roles in data.

Learn about the tasks of a data analyst.

II. Module 2 Get started building with Power BI

| Course | Microsoft Power BI Data Analyst |

| Module 2/17 | Get started building with Power BI |

1. Introduction

Microsoft Power BI is a complete reporting solution that offers data preparation, data visualization, distribution, and management through development tools and an online platform.

Use Power BI to create visually stunning, interactive reports requiring complex data modeling & to serve as the analytics and decision engine.

2. Use Power BI

Power BI Desktop - development tool available to data analysts and other report creators.

Power BI service - allows you to organize, manage, and distribute your reports and other Power BI items, create high-level dashboards that drill down to reports.

The flow of Power BI is:

Connect to data with Power BI Desktop.

Transform in Power Query and model data with Power BI Desktop.

Create visualizations and reports with Power BI Desktop.

Publish report to Power BI service.

Distribute and manage reports in the Power BI service.

3. Building blocks of Power BI

The building blocks of Power BI are semantic models and visualizations.

semantic model - consists of all connected data, transformations, relationships, and calculations.

4. Tour and use the Power BI service

Workspaces are the foundation of the Power BI service.

Distribute content - Apps are the ideal sharing solution within any organization.

Refresh a semantic model - configure scheduled refreshes of your semantic models in the Power BI service.

5. Answers:

The Power BI service lets you view and interact with reports and dashboards, but doesn't let you shape data.

Without a semantic model, you can't create visualizations, and reports are made up of visualizations.

An app is a collection of ready-made visuals, pre-arranged in dashboards and reports. You can get apps that connect to many online services from the AppSource.

6. Summary

Microsoft Power BI offers a complete data analytics solution that includes data preparation, visualization, and distribution.

Semantic models and visualizations are the building blocks of Power BI.

The flow and components of Power BI include:

Power BI Desktop for creating semantic models and reports with visualizations.

Power BI service for creating dashboards from published reports and distributing content with apps.

Power BI Mobile for on-the-go access to the Power BI service content, designed for mobile.

By using Power BI, you can make data-informed decisions across your organization.

Learning objectives

In this module, you'll learn:

How Power BI services and applications work together.

Explore how Power BI can make your business more efficient.

How to create compelling visuals and reports.

III. Module 3 Get data in Power BI

| Course | Microsoft Power BI Data Analyst |

| Module 3/17 | Get data in Power BI |

You'll learn how to retrieve data from a variety of data sources, including Microsoft Excel, relational databases, and NoSQL data stores.

You'll also learn how to improve performance while retrieving data.

1. Introduction

create a suite of reports that are dependent on data in several different locations.

This module will focus on the first step of getting the data from the different data sources and importing it into Power BI by using Power Query.

2. Get data from files

A flat file is a type of file that has only one data table and every row of data is in the same structure.

The file doesn't contain hierarchies. Likely, you're familiar with the most common types of flat files, which are comma-separated values (.csv) files, delimited text (.txt) files, and fixed width files.

Another type of file would be the output files from different applications, like Microsoft Excel workbooks (.xlsx).

Change the source file - Data source settings.

3. Get data from relational data sources

SQL Server.

Change data source settings - Power BI /Home tab, select Transform data, and then select the Data source settings option.

Write an SQL statement - SQL is beneficial because it allows you to load only the required set of data by specifying exact columns and rows in your SQL statement and then importing them into your semantic model. You can also join different tables, run specific calculations, create logical statements, and filter data in your SQL query.

It's a best practice to avoid doing this directly in Power BI. Instead, consider writing a query like this in a view.

A view is an object in a relational database, similar to a table. Views have rows and columns, and can contain almost every operator in the SQL language.

If Power BI uses a view, when it retrieves data, it participates in query folding, a feature of Power Query. Query folding will be explained later, but in short, Power Query will optimize data retrieval according to how the data is being used later.

4. Create dynamic reports with parameters

Creating dynamic reports allows you to give users more power over the data that is displayed in your reports; they can change the data source and filter the data by themselves.

Power Query Editor / Home tab, select Manage parameters > New parameter.

5. Create dynamic reports for multiple values

To accommodate multiple values at a time, you first need to create a Microsoft Excel worksheet that has a table consisting of one column that contains the list of values.

6. Get data from a NoSQL database

A NoSQL database (also referred to as non-SQL, not only SQL or non-relational) is a flexible type of database that doesn't use tables to store data.

If you're working with data stored in JSON format, it's often necessary to extract and normalize the data first. This is because JSON data is often stored in a nested or unstructured format, which makes it difficult to analyze or report on directly.

7. Get data from online services

To support their daily operations, organizations frequently use a range of software applications, such as SharePoint, OneDrive, Dynamics 365, Google Analytics and so on. These applications produce their own data. Power BI can combine the data from multiple applications to produce more meaningful insights and reports.

8. Select a storage mode

business requirements are satisfied when you're importing data into Power BI.

However, sometimes there may be security requirements around your data that make it impossible to directly import a copy. Or your semantic models may simply be too large and would take too long to load into Power BI, and you want to avoid creating a performance bottleneck.

Power BI solves these problems by using the DirectQuery storage mode, which allows you to query the data in the data source directly and not import a copy into Power BI. DirectQuery is useful because it ensures you're always viewing the most recent version of the data.

The three different types of storage modes you can choose from:

Import -

create a local Power BI copy of your semantic models from your data source, use all Power BI service features with this storage mode,

including Q&A and Quick Insights,

Data refreshes can be scheduled or on-demand.

DirectQuery

useful when you don't want to save local copies of your data because your data won't be cached,

you can query the specific tables that you'll need by using native Power BI queries,

Essentially, you're creating a direct connection to the data source,

ensures that you're always viewing the most up-to-date data, and that all security requirements are satisfied,

Additionally, this mode is suited for when you have large semantic models to pull data from. Instead of slowing down performance by having to load large amounts of data into Power BI, you can use DirectQuery to create a connection to the source, solving data latency issues as well.

Dual (Composite)

In Dual mode, you can identify some data to be directly imported and other data that must be queried. Any table that is brought in to your report is a product of both Import and DirectQuery modes.

Using the Dual mode allows Power BI to choose the most efficient form of data retrieval.

9. Get data from Azure Analysis Services

Azure Analysis Services is a fully managed platform as a service (PaaS) that provides enterprise-grade semantic models in the cloud.

You can use advanced mashup and modeling features to combine data from multiple data sources, define metrics, and secure your data in a single, trusted tabular semantic model.

The semantic model provides an easier and faster way for users to perform ad hoc data analysis using tools like Power BI.

Notable differences between Azure Analysis Services and SQL Server are:

Analysis Services models have calculations already created.

If you don’t need an entire table, you can query the data directly. Instead of using Transact-SQL (T-SQL) to query the data, like you would in SQL Server, you can use multi-dimensional expressions (MDX) or data analysis expressions (DAX).

10. Fix performance issues

Occasionally, organizations will need to address performance issues when running reports.

Power BI provides the Performance Analyzer tool to help fix problems and streamline the process.

i. Optimize performance in Power Query

The performance in Power Query depends on the performance at the data source level.

The variety of data sources that Power Query offers is wide, and the performance tuning techniques for each source are equally wide.

For instance, if you extract data from a Microsoft SQL Server, you should follow the performance tuning guidelines for the product. Good SQL Server performance tuning techniques include index creation, hardware upgrades, execution plan tuning, and data compression.

These topics are beyond the scope here, and are covered only as an example to build familiarity with your data source and reap the benefits when using Power BI and Power Query.

Power Query takes advantage of good performance at the data source through a technique called Query Folding.

a. Query folding

Query folding is the process by which the transformations and edits that you make in Power Query Editor are simultaneously tracked as native queries, or simple Select SQL statements, while you're actively making transformations.

The reason for implementing this process is to ensure that these transformations can take place in the original data source server and don't overwhelm Power BI computing resources.

You can use Power Query to load data into Power BI. Then use Power Query Editor to transform your data, such as renaming or deleting columns, appending, parsing, filtering, or grouping your data.

Consider a scenario where you’ve renamed a few columns in the Sales data and merged a city and state column together in the “city state” format. Meanwhile, the query folding feature tracks those changes in native queries. Then, when you load your data, the transformations take place independently in the original source, this ensures that performance is optimized in Power BI.

The benefits to query folding include:

More efficiency in data refreshes and incremental refreshes. When you import data tables by using query folding, Power BI is better able to allocate resources and refresh the data faster because Power BI doesn't have to run through each transformation locally.

Automatic compatibility with DirectQuery and Dual storage modes. All DirectQuery and Dual storage mode data sources must have the back-end server processing abilities to create a direct connection, which means that query folding is an automatic capability that you can use. If all transformations can be reduced to a single Select statement, then query folding can occur.

If all transformations can be reduced to a single Select statement, then query folding can occur.

Native queries aren't possible for the following transformations:

Adding an index column

Merging and appending columns of different tables with two different sources

Changing the data type of a column

A good guideline to remember is that if you can translate a transformation into a Select SQL statement, which includes operators and clauses such as GROUP BY, SORT BY, WHERE, UNION ALL, and JOIN, you can use query folding.

While query folding is one option to optimize performance when retrieving, importing, and preparing data, another option is query diagnostics.

ii. Query diagnostics

Another tool that you can use to study query performance is query diagnostics. You can determine what bottlenecks may exist while loading and transforming your data, refreshing your data in Power Query, running SQL statements in Query Editor, and so on.

This tool is useful when you want to analyze performance on the Power Query side for tasks such as loading semantic models, running data refreshes, or running other transformative tasks.

iii. Other techniques to optimize performance

Other ways to optimize query performance in Power BI include:

Process as much data as possible in the original data source. Power Query and Power Query Editor allow you to process the data; however, the processing power that is required to complete this task might lower performance in other areas of your reports. Generally, a good practice is to process, as much as possible, in the native data source.

Use native SQL queries. When using DirectQuery for SQL databases, such as the case for our scenario, make sure that you aren't pulling data from stored procedures or common table expressions (CTEs).

Separate date and time, if bound together. If any of your tables have columns that combine date and time, make sure that you separate them into distinct columns before importing them into Power BI. This approach will increase compression abilities.

11. Resolve data import errors

While importing data into Power BI, you may encounter errors resulting from factors such as:

Power BI imports from numerous data sources.

Each data source might have dozens (and sometimes hundreds) of different error messages.

Other components can cause errors, such as hard drives, networks, software services, and operating systems.

Data often can't comply with any specific schema.

The following sections cover some of the more common error messages that you might encounter in Power BI.

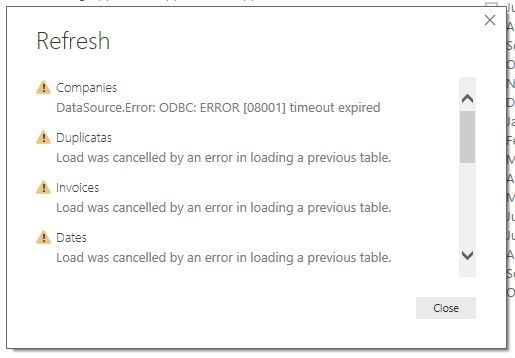

i. Query timeout expired

Relational source systems often have many people who are concurrently using the same data in the same database. Some relational systems and their administrators seek to limit a user from monopolizing all hardware resources by setting a query timeout. These timeouts can be configured for any timespan, from as little as five seconds to as much as 30 minutes or more.

For instance, if you’re pulling data from your organization’s SQL Server, you might see the error shown in the following figure.

ii. Power BI Query Error: Timeout expired

This error indicates that you’ve pulled too much data according to your organization’s policies. Administrators incorporate this policy to avoid slowing down a different application or suite of applications that might also be using that database.

You can resolve this error by pulling fewer columns or rows from a single table. While you're writing SQL statements, it might be a common practice to include groupings and aggregations. You can also join multiple tables in a single SQL statement. Additionally, you can perform complicated subqueries and nested queries in a single statement. These complexities add to the query processing requirements of the relational system and can greatly elongate the time of implementation.

If you need the rows, columns, and complexity, consider taking small chunks of data and then bringing them back together by using Power Query. For instance, you can combine half the columns in one query and the other half in a different query. Power Query can merge those two queries back together after you're finished.

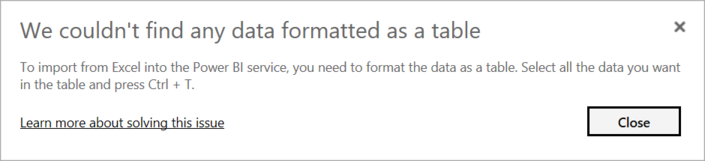

iii. We couldn't find any data formatted as a table

Occasionally, you may encounter the “We couldn’t find any data formatted as a table” error while importing data from Microsoft Excel. Fortunately, this error is self-explanatory. Power BI expects to find data formatted as a table from Excel. The error even tells you the resolution. Perform the following steps to resolve the issue:

Open your Excel workbook, and highlight the data that you want to import.

Press the Ctrl-T keyboard shortcut. The first row will likely be your column headers.

Verify that the column headers reflect how you want to name your columns. Then, try to import data from Excel again. This time, it should work.

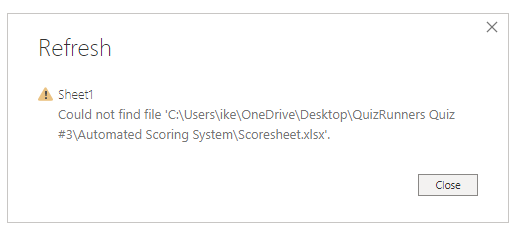

iv. Couldn't find file

While importing data from a file, you may get the "Couldn't find file" error.

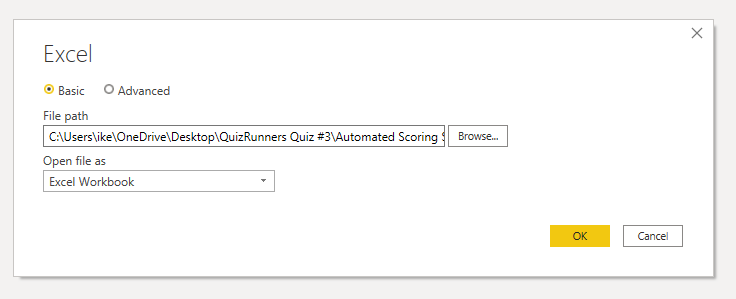

Usually, this error is caused by the file moving locations or the permissions to the file changing. If the cause is the former, you need to find the file and change the source settings.

Open Power Query by selecting the Transform Data button in Power BI.

Highlight the query that is creating the error.

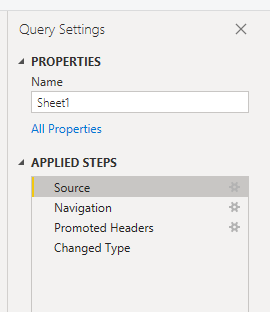

On the left, under Query Settings, select the gear icon next to Source.

Change the file location to the new location.

iv. Data type errors

Sometimes, when you import data into Power BI, the columns appear blank. This situation happens because of an error in interpreting the data type in Power BI. The resolution to this error is unique to the data source. For instance, if you're importing data from SQL Server and see blank columns, you could try to convert to the correct data type in the query.

Instead of using this query:

SELECT CustomerPostalCode FROM Sales.Customers

Use this query:

SELECT CAST(CustomerPostalCode as varchar(10)) FROM Sales.Customers

By specifying the correct type at the data source, you eliminate many of these common data source errors.

You may encounter different types of errors in Power BI that are caused by the diverse data source systems where your data resides.

12. Exercise - Prepare data in Power BI Desktop

Get Data in Power BI Desktop

Lab story

This lab is designed to introduce you to Power BI Desktop application and how to connect to data and how to use data preview techniques to understand the characteristics and quality of the source data. The learning objectives are:

Open Power BI Desktop

Connect to different data sources

Preview source data with Power Query

Use data profiling features in Power Query

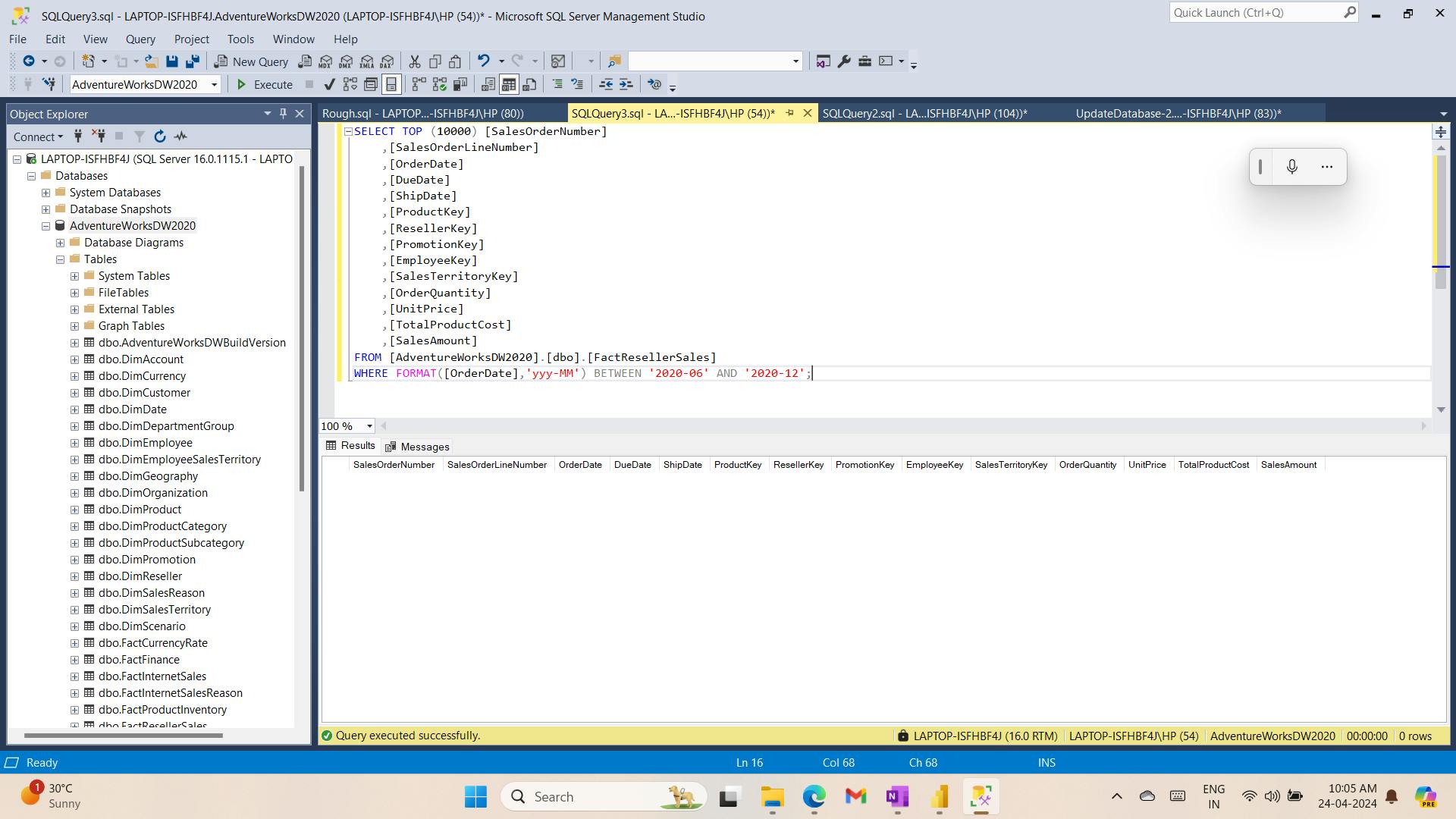

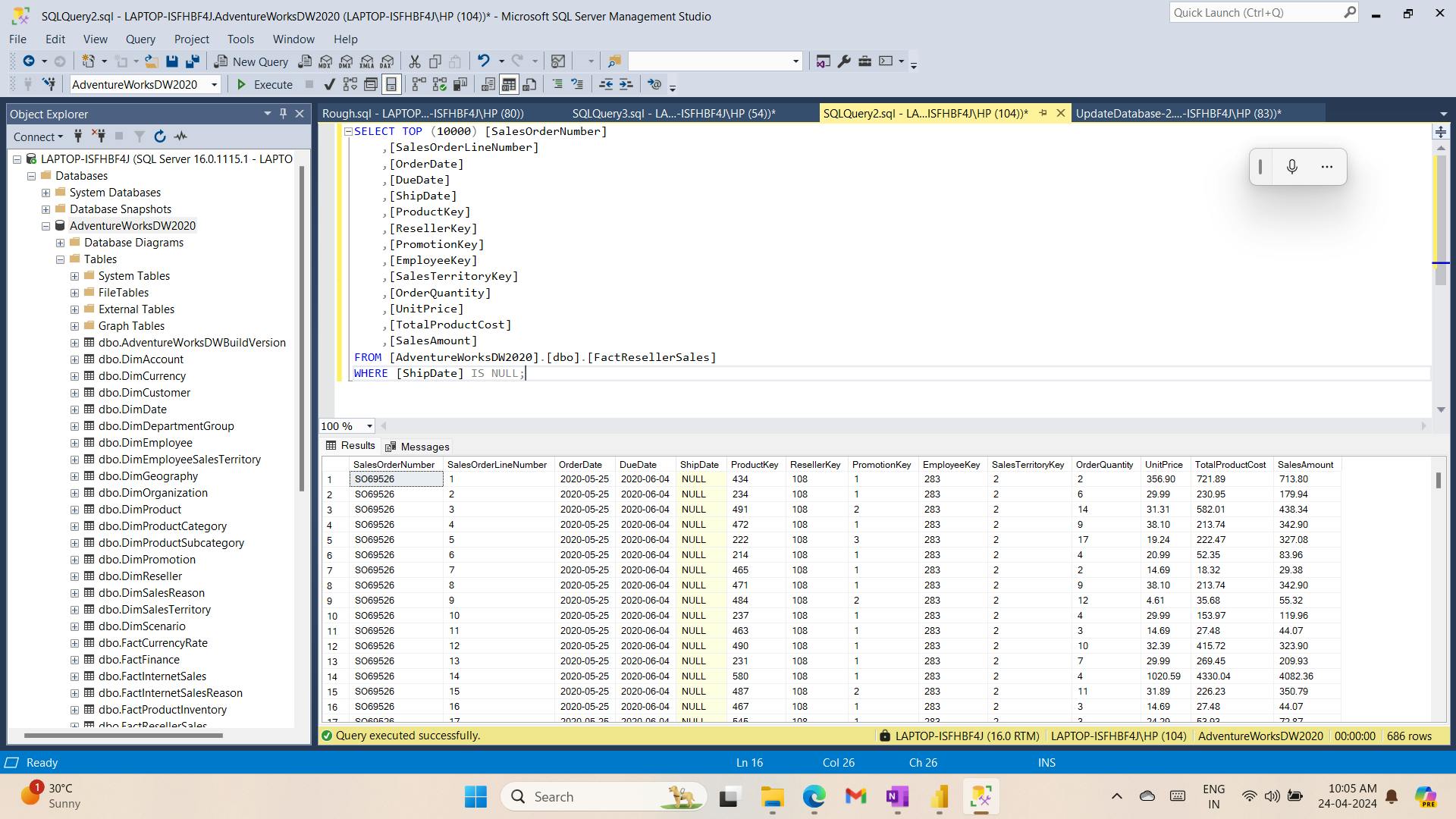

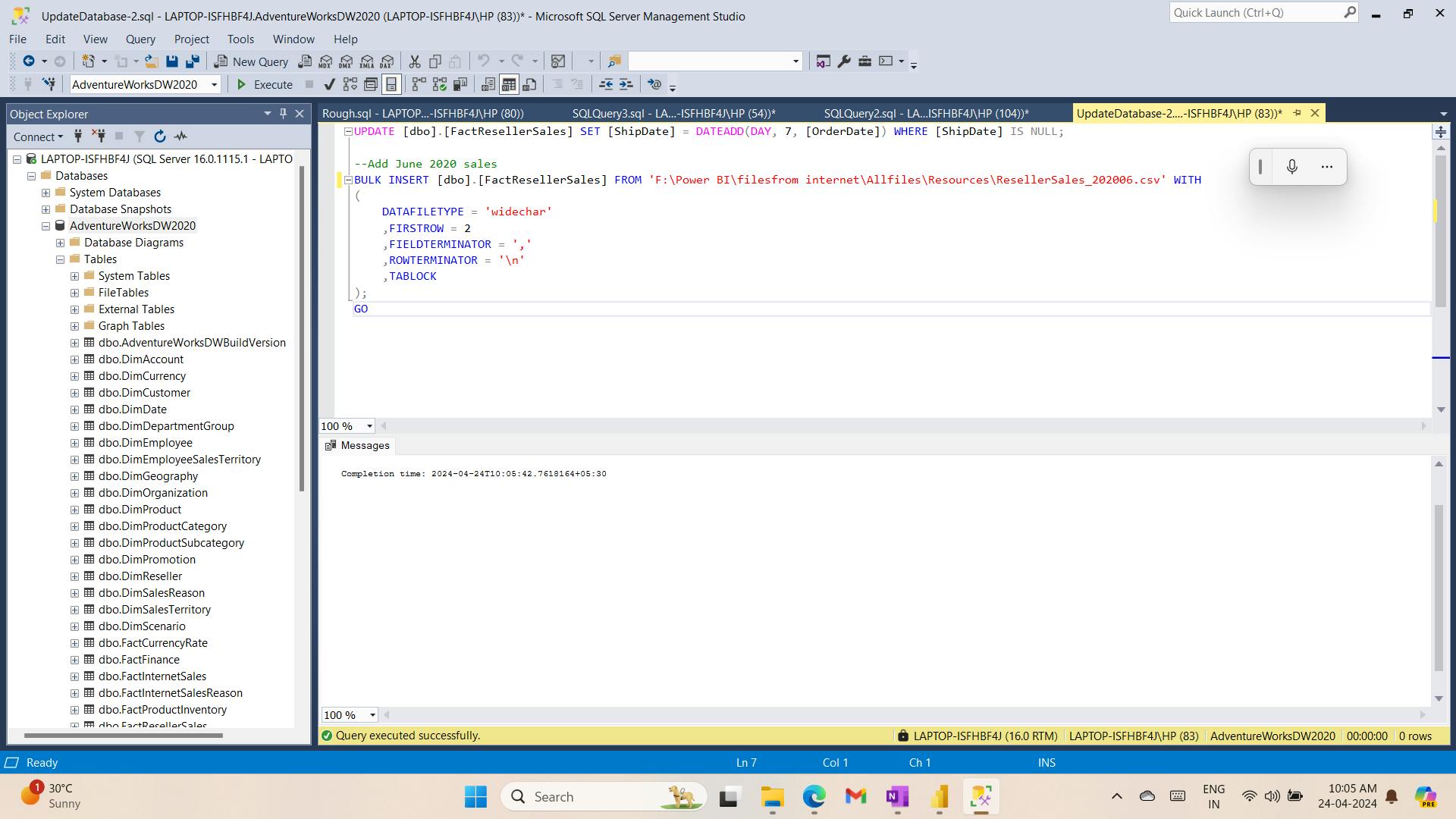

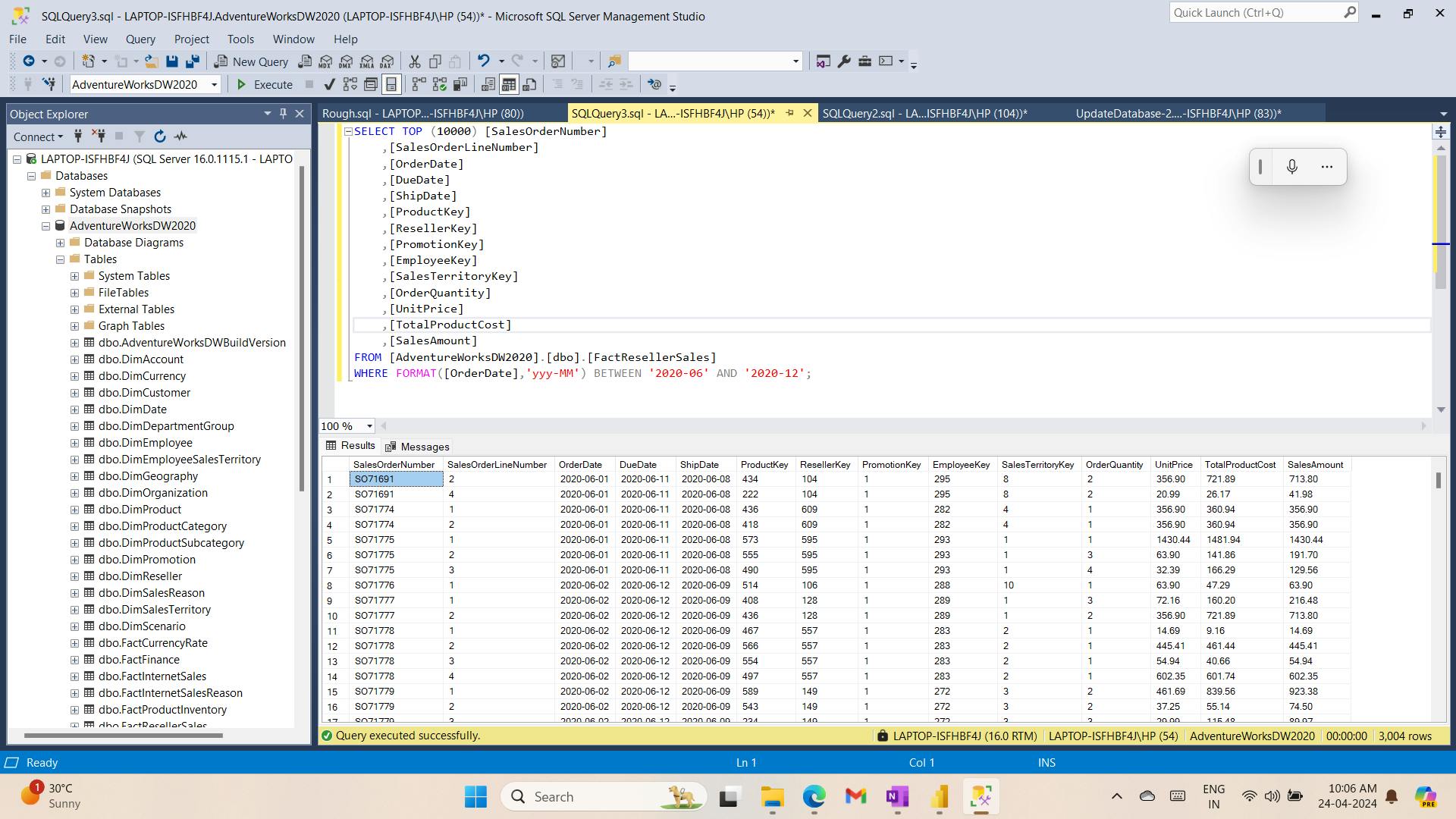

i. Get data from SQL Server

ii. Preview Data in Power Query Editor

Observed:

a. DimEmployee Query

table - Notice that the last five columns contain Table or Value links.

These five columns represent relationships to other tables in the database.

They can be used to join tables together. You’ll join tables in the Load Transformed Data in Power BI Desktop lab.

Column Quality for Position column - shows 94% empty.

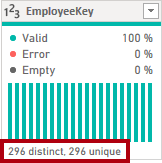

Column Distribution for EmployeeKey column

When the distinct and unique counts are the same, it means the column contains unique values.

When modeling, it’s important that some model tables have unique columns. These unique columns can be used to create one-to-many relationships, which you’ll do in theModel Data in Power BI Desktoplab.

b. DimReseller query

Column Profile for BusinessType column header - Notice the data quality issue: there are two labels for warehouse (Warehouse, and the misspelled Ware House) - You’ll apply a transformation to relabel these five rows in the Load Transformed Data in Power BI Desktop lab.

c. DimSalesTerritory query

In the Model Data in Power BI Desktop lab, you’ll create a hierarchy to support analysis at region, country, or group level.

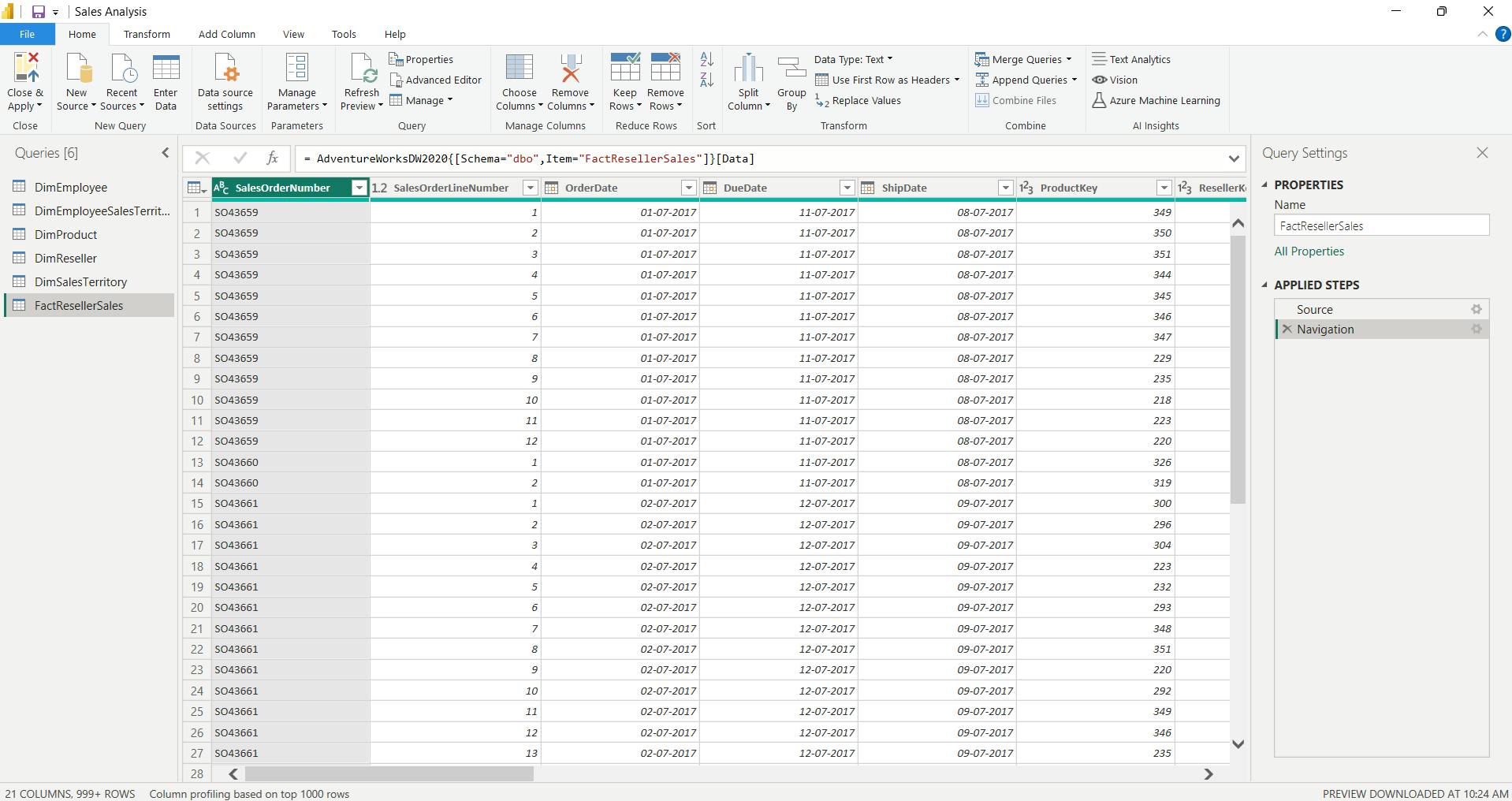

d. FactResellerSales query

column quality for the TotalProductCost column, and notice that 8% of the rows are empty - Missing TotalProductCost column values is a data quality issue. To address the issue, in the Load Transformed Data in Power BI Desktop lab, you’ll apply transformations to fill in missing values by using the product standard cost, which is stored in the related DimProduct table.

iii. Get data from a CSV file

ResellerSalesTargets query

Repeat the steps to create a query based on the D:\Allfiles\Resources\ColorFormats.csv file.

Save / apply later.

13. Check your knowledge

T-SQL is the query language that you would use for SQL Server.

You're creating a Power BI report with data from an Azure Analysis Services MDX Cube. When the data refreshes in the cube, you would like to see it immediately in the Power BI report. How should you connect? - Live connection.

What can you do to improve performance when you're getting data in Power BI?Always use the least amount of data needed for your project.

14. Summary

In this module, you learned about pulling data from many different data sources and into Power BI.

You can pull data from files, relational databases, Azure Analysis Services, cloud-based applications, websites, and more.

Retrieving data from different data sources requires treating each data source differently. For instance, Microsoft Excel data should be pulled in from an Excel table. Relational databases often have query timeouts.

You can connect to SQL Server Analysis Services with Connect live, which allows you to see data changes in real-time.

It's important to select the correct storage mode for your data.

Do you require that visuals interact quickly but don’t mind possibly refreshing the data when the underlying data source changes?

If so, select Import to import data into Power BI.

If you prefer to see updates to data as soon as they happen at the cost of interactivity performance, then choose Direct Query for your data instead.

In addition, you learned how to solve performance problems and data import errors.

You learned that Power BI gives you tooling to identify where performance problems may exist. Data import errors can be alarming at first, but you can see that the resolution is easily implemented.

Learning objectives

By the end of this module, you'll be able to:

Identify and connect to a data source

Get data from a relational database, like Microsoft SQL Server

Get data from a file, like Microsoft Excel

Get data from applications

Get data from Azure Analysis Services

Select a storage mode

Fix performance issues

Resolve data import errors

IV. Module 4 Clean, transform, and load data in Power BI

| Course | Microsoft Power BI Data Analyst |

| Module 4/17 | Clean, transform, and load data in Power BI |

Power Query has an incredible number of features that are dedicated to helping you clean and prepare your data for analysis.

You'll learn how to simplify a complicated model, change data types, rename objects, and pivot data.

You'll also learn how to profile columns so that you know which columns have the valuable data that you’re seeking for deeper analytics.

1. Introduction

When examining the data, you discover several issues, including:

A column called Employment status only contains numerals.

Several columns contain errors.

Some columns contain null values.

The customer ID in some columns appears as if it was duplicated repeatedly.

A single address column has combined street address, city, state, and zip code.

Clean data has the following advantages:

Measures and columns produce more accurate results when they perform aggregations and calculations.

Tables are organized, where users can find the data in an intuitive manner.

Duplicates are removed, making data navigation simpler. It will also produce columns that can be used in slicers and filters.

A complicated column can be split into two, simpler columns. Multiple columns can be combined into one column for readability.

Codes and integers can be replaced with human readable values.

2. Shape the initial data

Power Query Editor in Power BI Desktop allows you to shape (transform) your imported data.

You can accomplish actions such as

renaming columns or tables,

changing text to numbers,

removing rows,

setting the first row as headers,

and much more.

It is important to shape your data to ensure that it meets your needs and is suitable for use in reports.

i. Get started with Power Query Editor

Removing columns at an early stage in the process rather than later is best, especially when you have established relationships between your tables. Removing unnecessary columns will help you to focus on the data that you need and help improve the overall performance of your Power BI Desktop semantic models and reports.

3. Simplify the data structure

Rename a query

Replace values

Replace null values

Remove duplicates

4. Evaluate and change column data types

Implications of incorrect data types

Incorrect data types will prevent you from creating certain calculations, deriving hierarchies, or creating proper relationships with other tables.

For example, if you try to calculate the Quantity of Orders YTD, you'll get the following error stating that the OrderDate column data type isn't Date, which is required in time-based calculations.

Another issue with having an incorrect data type applied on a date field is the inability to create a date hierarchy, which would allow you to analyze your data on a yearly, monthly, or weekly basis.

Change the column data type in Power Query Editor

the change that you make to the column data type is saved as a programmed step. This step is called Changed Type

5. Combine multiple tables into a single table

You can combine tables into a single table in the following circumstances:

Too many tables exist, making it difficult to navigate an overly complicated semantic model.

Several tables have a similar role.

A table has only a column or two that can fit into a different table.

You want to use several columns from different tables in a custom column.

You can combine the tables in two different ways: merging and appending.

i. Append queries

When you append queries, you'll be adding rows of data to another table or query.

For example, you could have two tables, one with 300 rows and another with 100 rows, and when you append queries, you'll end up with 400 rows.

ii. Merge queries

When you merge queries, you'll be adding columns from one table (or query) into another. To merge two tables, you must have a column that is the key between the two tables.

This process is similar to the JOIN clause in SQL.

6. Profile data in Power BI

Profiling data is about studying the nuances of the data: determining anomalies, examining and developing the underlying data structures, and querying data statistics such as row counts, value distributions, minimum and maximum values, averages, and so on.

i. Find data anomalies and data statistics

Column quality shows you the percentages of data that is valid, in error, and empty.

Column profile gives you a more in-depth look into the statistics within the columns for the first 1,000 rows of data.

Column distribution shows you the distribution of the data within the column and the counts of distinct and unique values, both of which can tell you details about the data counts.

7. Use Advanced Editor to modify M code

Each time you shape data in Power Query, you create a step in the Power Query process. Those steps can be reordered, deleted, and modified where it makes sense.

8. Exercise - Load data in Power BI Desktop

Load Transformed Data in Power BI Desktop

Lab story

In this lab, you’ll use data cleansing and transformation techniques to start shaping your data model.

You’ll then apply the queries to load each as a table to the data model.

In this lab you learn how to:

Apply various transformations

Load queries to the data model

i. Configure the Salesperson query

ii. Configure the SalespersonRegion query

iii. Configure the Product query

iv. Configure the Reseller query

v. Configure the Region query

vi. Configure the Sales query

vii. Configure the Targets query

viii. Configure the ColorFormats query

ix. Update the Product query

x. Update the ColorFormats query

In the Query Properties window, uncheck the Enable Load To Report checkbox.

Disabling the load means it will not load as a table to the data model. This is done because the query was merged with theProductquery, which is enabled to load to the data model.

xi. Finish up

9. Check your knowledge

What is a risk of having null values in a numeric column?

AVERAGE takes the total and divides by the number of non-null values. If NULL is synonymous with zero in the data, the average will be different from the accurate average.

If you have two queries that have different data but the same column headers, and you want to combine both tables into one query with all the combined rows, which operation should you perform?

Append will take two tables and combine it into one query. The combined query will have more rows while keeping the same number of columns.

Which of the following selections aren't best practices for naming conventions in Power BI?

Abbreviations lead to confusion because they're often overused or not universally agreed on.

10. Summary

This module explained how you can take data that is difficult to read, build calculations on, and discover and make it simpler for report authors and others to use.

Additionally, you learned how to combine queries so that they were fewer in number, which makes data navigation more streamlined.

You also replaced renamed columns into a human readable form and reviewed good naming conventions for objects in Power BI.

Learning objectives

By the end of this module, you’ll be able to:

Resolve inconsistencies, unexpected or null values, and data quality issues.

Apply user-friendly value replacements.

Profile data so you can learn more about a specific column before using it.

Evaluate and transform column data types.

Apply data shape transformations to table structures.

Combine queries.

Apply user-friendly naming conventions to columns and queries.

Edit M code in the Advanced Editor.

V. Module 5 Design a semantic model in Power BI

| Course | Microsoft Power BI Data Analyst |

| Module 5/17 | Design a semantic model in Power BI |

Building a great semantic model is about simplifying the disarray.

A star schema is one way to simplify a semantic model.

You will also learn about why choosing the correct data granularity is important for performance and usability of your Power BI reports.

Finally, you learn about improving performance with your Power BI semantic models.

1. Introduction

A good semantic model offers the following benefits:

Data exploration is faster.

Aggregations are simpler to build.

Reports are more accurate.

Writing reports takes less time.

Reports are easier to maintain in the future.

Typically, a smaller semantic model is composed of fewer tables and fewer columns in each table that the user can see.

To summarize, you should aim for simplicity when designing your semantic models.

i. Star schemas

Now that you have learned about the relationships that make up the data schema, you are able to explore a specific type of schema design, the star schema, which is optimized for high performance and usability.

ii. Fact tables

Fact tables contain observational or event data values: sales orders, product counts, prices, transactional dates and times, and quantities.

Fact tables can contain several repeated values.

For example, one product can appear multiple times in multiple rows, for different customers on different dates. These values can be aggregated to create visuals.

For instance, a visual of the total sales orders is an aggregation of all sales orders in the fact table.

With fact tables, it is common to see columns that are filled with numbers and dates. The numbers can be units of measurement, such as sale amount, or they can be keys, such as a customer ID. The dates represent time that is being recorded, like order date or shipped date.

The Sales table contains the sales order values, which can be aggregated, it is considered a fact table.

iii. Dimension tables

Dimension tables contain the details about the data in fact tables: products, locations, employees, and order types.

These tables are connected to the fact table through key columns.

Dimension tables are used to filter and group the data in fact tables.

The fact tables, on the other hand, contain the measurable data, such as sales and revenue, and each row represents a unique combination of values from the dimension tables.

For the total sales orders visual, you could group the data so that you see total sales orders by product, in which product is data in the dimension table.

Fact tables are much larger than dimension tables because numerous events occur in fact tables, such as individual sales.

Dimension tables are typically smaller because you are limited to the number of items that you can filter and group on.

For instance, a year contains only so many months, and the United States are composed of only a certain number of states.

The Employee table contains the specific employee name, which filters the sales orders, so it would be a dimension table.

Note:

The Sales table contains the sales order values, which can be aggregated, it is considered a fact table.

The Employee table contains the specific employee name, which filters the sales orders, so it would be a dimension table

Star schemas and the underlying semantic model are the foundation of organized reports; the more time you spend creating these connections and design, the easier it will be to create and maintain reports.

2. Work with tables

This process of formatting and configuring tables can also be done in Power Query.

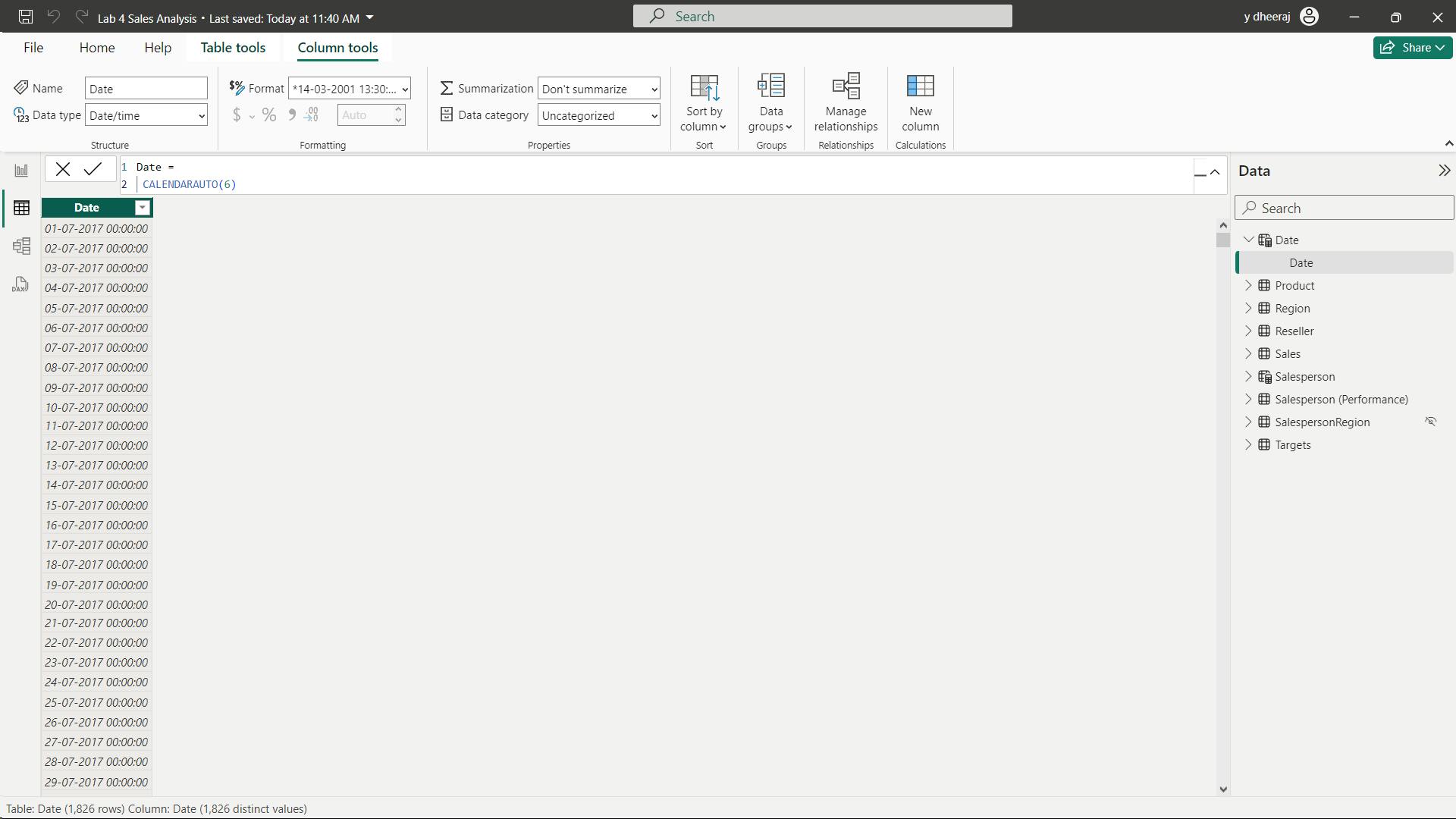

3. Create a date table

During report creation in Power BI, a common business requirement is to make calculations based on date and time. Organizations want to know how their business is doing over months, quarters, fiscal years, and so on.

You can create a common date table that can be used by multiple tables.

i. Create a common date table

Ways that you can build a common date table are:

Source data

DAX

Power Query

ii. DAX

You can use the Data Analysis Expression (DAX) functions CALENDARAUTO() or CALENDAR() to build your common date table.

The CALENDARAUTO() function returns a contiguous, complete range of dates that are automatically determined from your semantic model.

The starting date is chosen as the earliest date that exists in your semantic model, and the ending date is the latest date that exists in your semantic model plus data that has been populated to the fiscal month that you can choose to include as an argument in the CALENDARAUTO() function.

Dates = CALENDAR(DATE(2011, 5, 31), DATE(2022, 12, 31))

Year = YEAR(Dates[Date])

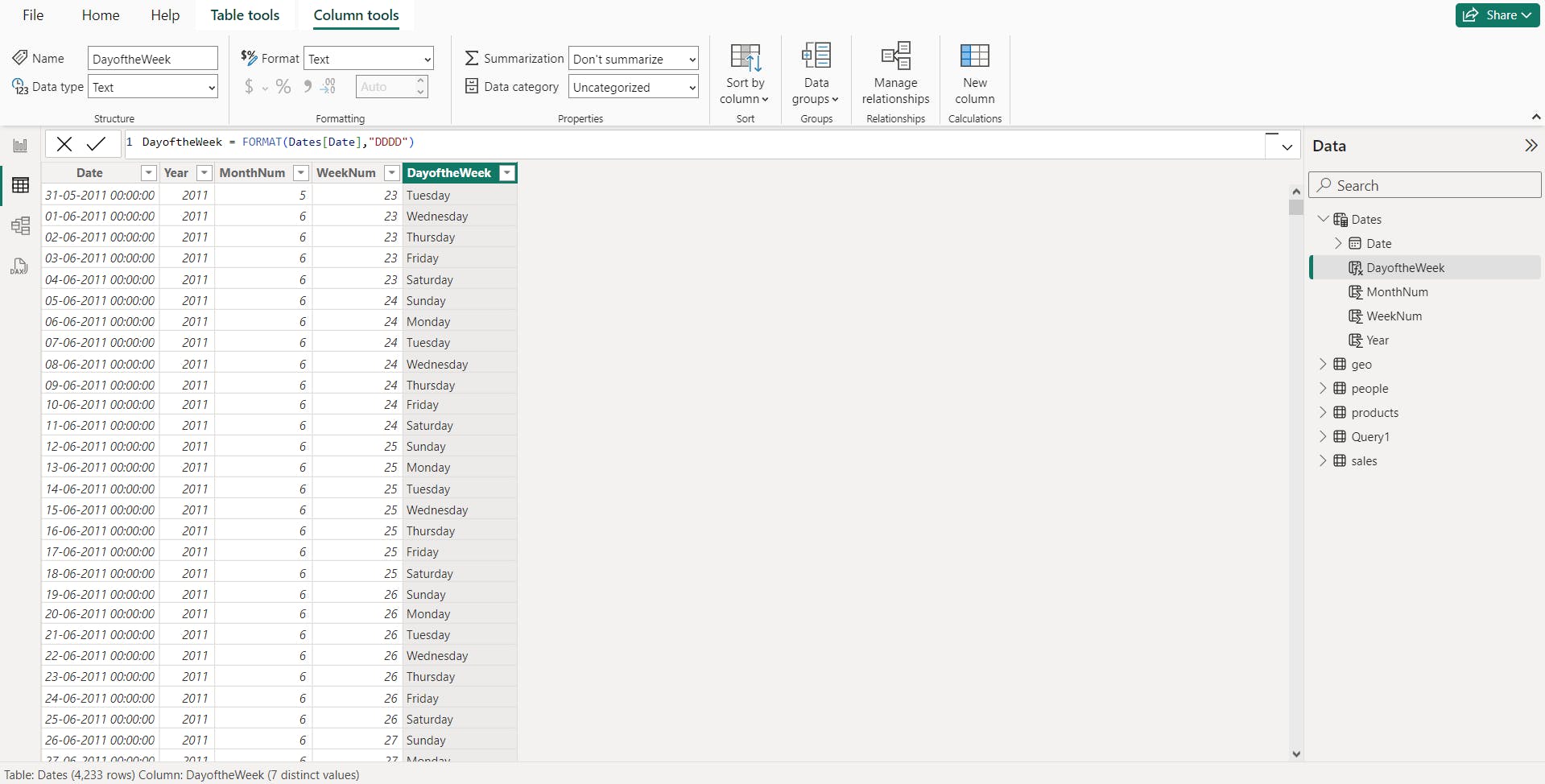

iii. Power Query

You can use M-language, the development language that is used to build queries in Power Query, to define a common date table.

= List.Dates(

#date(2011,05,31), // start date

365*10, //Dates for everyday for the next 10 years

#duration(1,0,0,0) //Specifies duration of the period 1 = days, 0 = hours, 0 = minutes, 0 = seconds

)

4. Work with dimensions

When building a star schema, you will have dimension and fact tables.

Fact tables contain information about events such as sales orders, shipping dates, resellers, and suppliers.

Dimension tables store details about business entities, such as products or time, and are connected back to fact tables through a relationship.

You can use hierarchies as one source to help you find detail in dimension tables. These hierarchies form through natural segments in your data.

For instance, you can have a hierarchy of dates in which your dates can be segmented into years, months, weeks, and days.

Hierarchies are useful because they allow you to drill down into the specifics of your data instead of only seeing the data at a high level.

i. Hierarchies

To create a hierarchy, go to the Fields pane on Power BI and then right-click the column that you want the hierarchy for. Select New hierarchy,

Parent-child hierarchy

Flatten parent-child hierarchy

The process of viewing multiple child levels based on a top-level parent is known as flattening the hierarchy.

In this process, you are creating multiple columns in a table to show the hierarchical path of the parent to the child in the same record. You will use PATH(), a simple DAX function that returns a text version of the managerial path for each employee, and PATHITEM() to separate this path into each level of managerial hierarchy.

Path = PATH(Employee[Employee ID], Employee[Manager ID])

To flatten the hierarchy, you can separate each level by using the PATHITEM function.

To view all three levels of the hierarchy separately, you can create four columns in the same way that you did previously, by entering the following equations. You will use the PATHITEM function to retrieve the value that resides in the corresponding level of your hierarchy.

Level 1 = PATHITEM(Employee[Path],1)

Level 2 = PATHITEM(Employee[Path],2)

Level 3 = PATHITEM(Employee[Path],3)

ii. Role-playing dimensions

Role-playing dimensions have multiple valid relationships with fact tables, meaning that the same dimension can be used to filter multiple columns or tables of data. As a result, you can filter data differently depending on what information you need to retrieve.

Calendar is the dimension table, while Sales and Order are fact tables.

The dimension table has two relationships: one with Sales and one with Order.

This example is of a role-playing dimension because the Calendar table can be used to group data in both Sales and Order.

If you wanted to build a visual in which the Calendar table references the Order and the Sales tables, the Calendar table would act as a role-playing dimension.

5. Define data granularity

Data granularity is the detail that is represented within your data, meaning that the more granularity your data has, the greater the level of detail within your data.

6. Work with relationships and cardinality

Power BI has the concept of directionality to a relationship.

This directionality plays an important role in filtering data between multiple tables.

i. Relationships

The following are different types of relationships that you'll find in Power BI.

Many-to-one (*:1) or one-to-many (1: *) relationship

Describes a relationship in which you have many instances of a value in one column that are related to only one unique corresponding instance in another column.

Describes the directionality between fact and dimension tables.

Is the most common type of directionality and is the Power BI default when you are automatically creating relationships.

One-to-one (1:1) relationship:

Describes a relationship in which only one instance of a value is common between two tables.

Requires unique values in both tables.

Is not recommended because this relationship stores redundant information and suggests that the model is not designed correctly. It is better practice to combine the tables.

Many-to-many (.) relationship:

Describes a relationship where many values are in common between two tables.

Does not require unique values in either table in a relationship.

Is not recommended; a lack of unique values introduces ambiguity and your users might not know which column of values is referring to what.

ii. Cross-filter direction

Data can be filtered on one or both sides of a relationship.

With a single cross-filter direction:

- Only one table in a relationship can be used to filter the data. For instance, Table 1 can be filtered by Table 2, but Table 2 cannot be filtered by Table 1.

Note: **

Follow the direction of the arrow on the relationship between your tables to know which direction the filter will flow. You typically want these arrows to point to your fact table.

- For a one-to-many or many-to-one relationship, the cross-filter direction will be from the "one" side, meaning that the filtering will occur in the table that has many values.

With both cross-filter directions or bi-directional cross-filtering:

One table in a relationship can be used to filter the other. For instance, a dimension table can be filtered through the fact table, and the fact tables can be filtered through the dimension table.

You might have lower performance when using bi-directional cross-filtering with many-to-many relationships.

iii. Cardinality and cross-filter direction

For one-to-one relationships, the only option that is available is bi-directional cross-filtering.

For many-to-many relationships, you can choose to filter in a single direction or in both directions by using bi-directional cross-filtering.

iv. Create many-to-many relationships

7. Resolve modeling challenges

When you are creating these relationships, a common pitfall that you might encounter are circular relationships.

i. Relationship dependencies

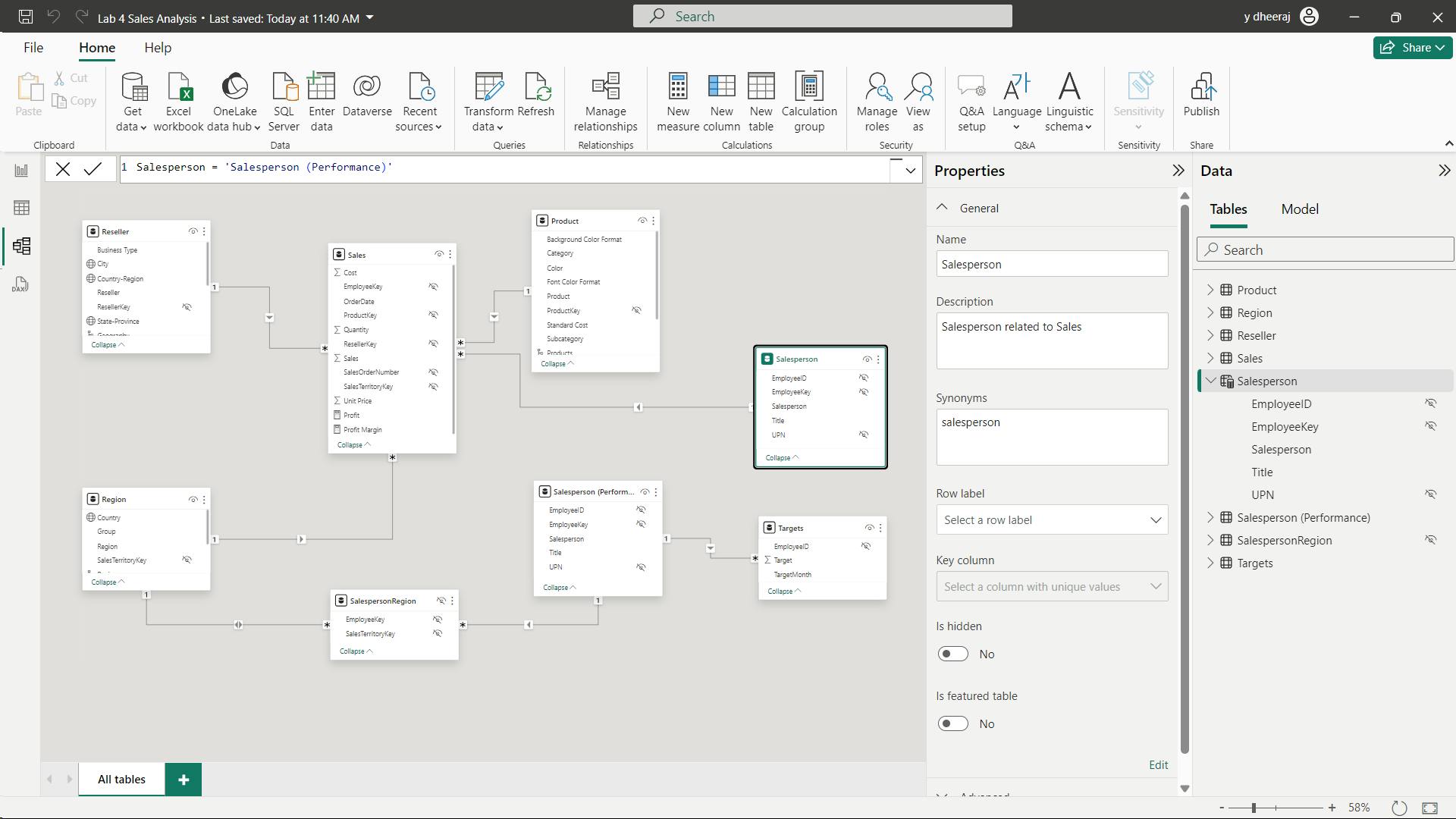

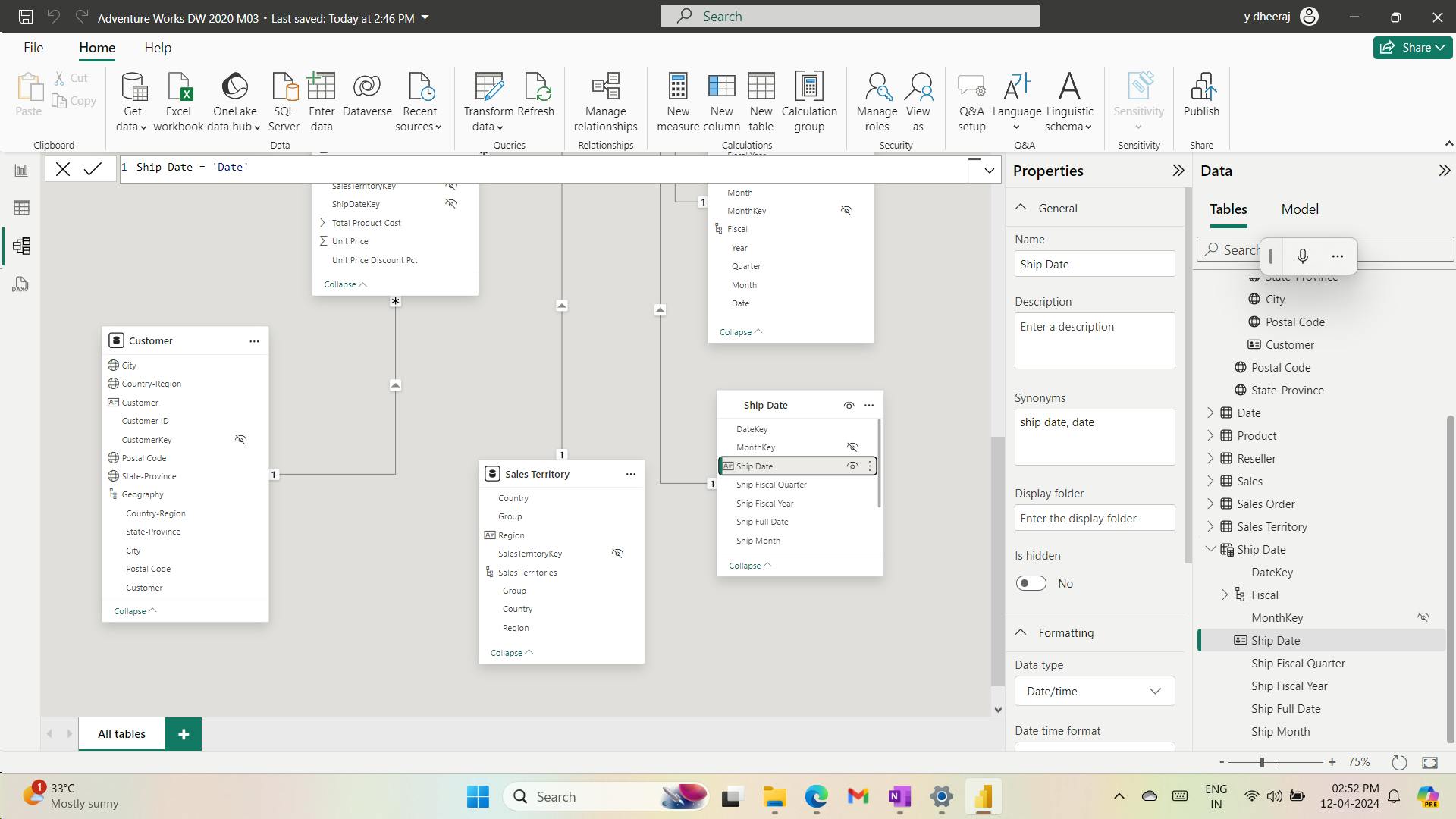

8. Exercise - Model data in Power BI Desktop

Design a Data Model in Power BI

Lab story

It will involve creating relationships between tables, and then configuring table and column properties to improve the friendliness and usability of the data model.

You’ll also create hierarchies and create quick measures.

In this lab you learn how to:

Create model relationships

Configure table and column properties

Create hierarchies

i. Create model relationships

You can interpret the cardinality that is represented by the 1 and (*) indicators.

Filter direction is represented by the arrow head.

A solid line represents an active relationship; a dashed line represents an inactive relationship.

Hover the cursor over the relationship to highlight the related columns.

ii. Configure Tables

iii. Configure the Product table

To organize columns into a display folder, in the Data pane, first select the Background Color Format column.

While pressing the Ctrl key, select the Font Color Format column.

In the Properties pane, in the Display Folder box, enter Formatting.

Display folders are a great way to declutter tables—especially for tables that comprise many fields. They’re logical presentation only.

iv. Configure the Region table

In the Properties pane, expand the Advanced section (at the bottom of the pane), and then in the Data Category dropdown list, select Country/Region.

Data categorization can provide hints to the report designer. In this case, categorizing the column as country or region provides more accurate information to Power BI when it renders a map visualization.

v. Configure the Reseller table

vi. Configure the Sales table

vii. Bulk update properties

In the Properties pane, slide the Is Hidden property to Yes.

The columns were hidden because they’re either used by relationships or will be used in row-level security configuration or calculation logic.

viii. Review the Model Interface

In the Data pane, notice the following:

Columns, hierarchies and their levels are fields, which can be used to configure report visuals

Only fields relevant to report authoring are visible

The SalespersonRegion table isn’t visible—because all of its fields are hidden

Spatial fields in the Region and Reseller table are adorned with a spatial icon

Fields adorned with the sigma symbol (Ʃ) will summarize, by default

A tooltip appears when hovering the cursor over the Sales | Cost field

ix. Review the model interface

To turn off auto/date time, Navigate to File > Options and Settings > Options > Current File group, and select Data Load. In the Time Intelligence section, uncheck Auto Date/Time.

x. Create Quick Measures

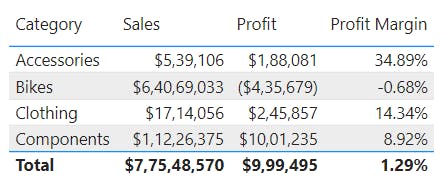

xi. Create quick measures

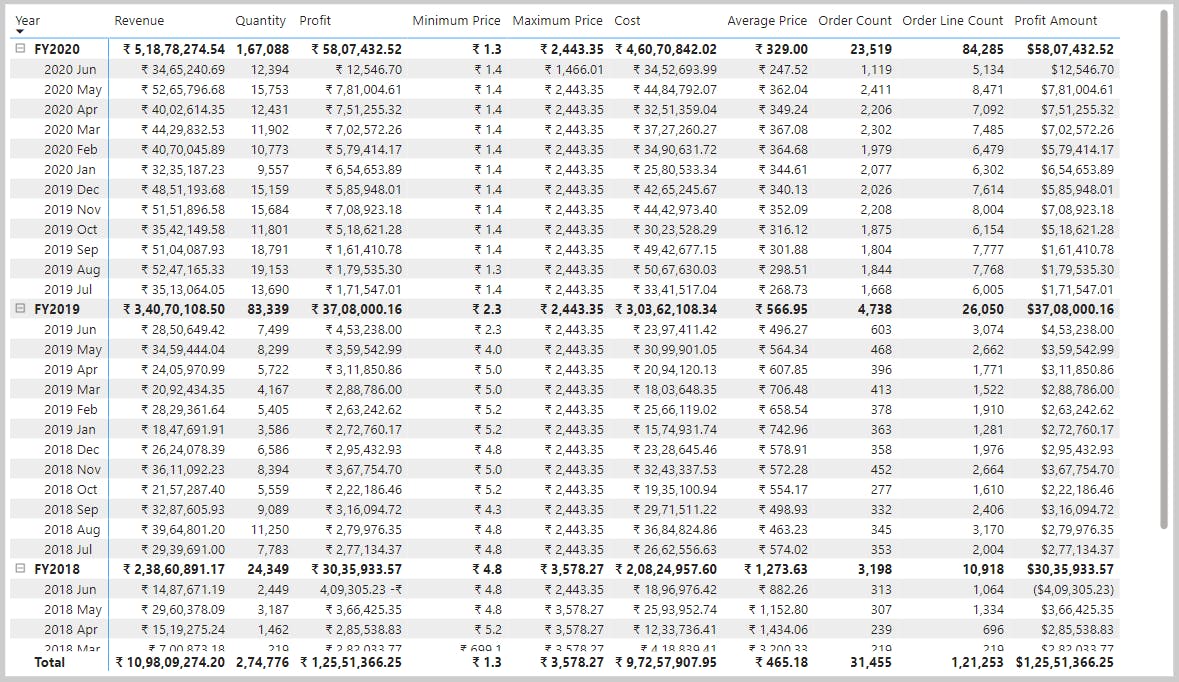

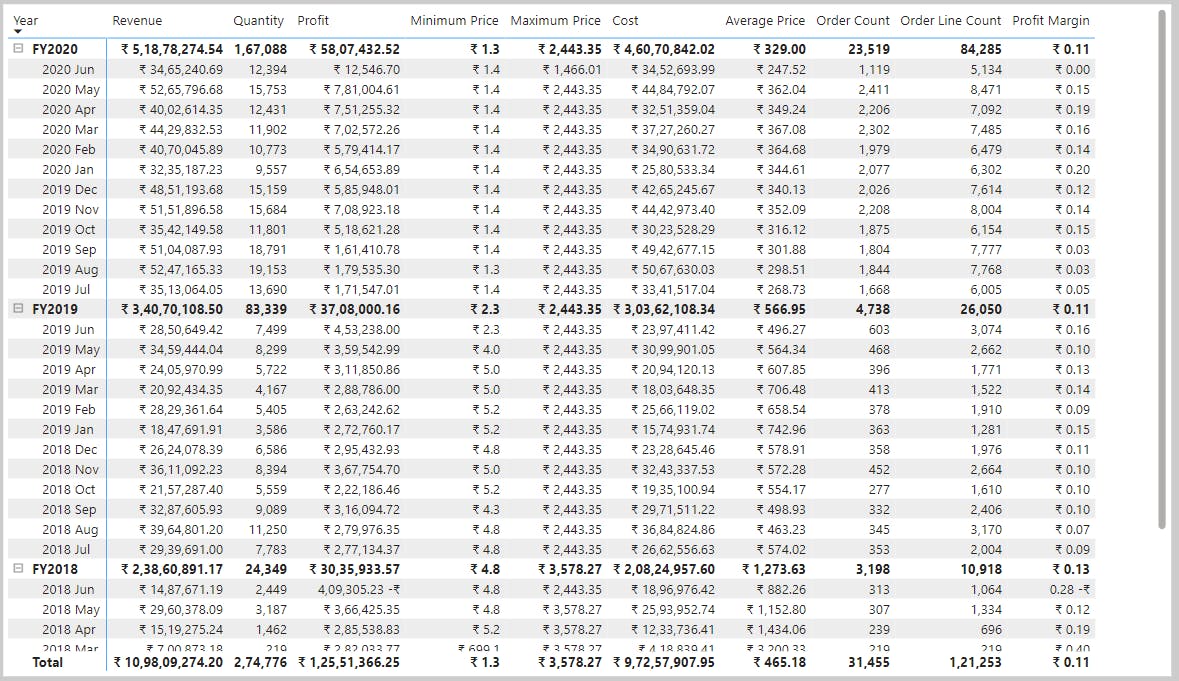

Profit =

SUM('Sales'[Sales]) - SUM('Sales'[Cost])

Profit Margin =

DIVIDE([Profit], SUM('Sales'[Sales]))

xii. Create a many-to-many relationship

xiii. Relate the Targets table

9. Check your knowledge

What does data granularity mean?

The level of detail in your data, meaning that higher granularity means more detailed data.

Data granularity refers to how finely or coarsely your data is divided or aggregated.

What is the difference between a fact table and a dimension table?

Fact tables contain observational data such as sales orders, employees, shipping dates, and so on, while dimension tables contain information about specific entities such as product IDs and dates.

Choose the best answer to explain relationship cardinality?

Cardinality is the measure of unique values in a table.

An example of high cardinality would be a Sales table as it has a high number of unique values.

10. Summary

You have learned about modeling data in Power BI, which includes such topics as creating common date tables, learning about and configuring many-to-many relationships, resolving circular relationships, designing star schemas, and much more.

These skills are crucial to the Power BI practitioner's toolkit so that it is easier to build visuals and hand off your report elements to other teams. With this foundation, you now have the ability to explore the many nuances of the semantic model.

Learning objectives

In this module, you will:

Create common date tables

Configure many-to-many relationships

Resolve circular relationships

Design star schemas

VI. Module 6 Add measures to Power BI Desktop models

| Course | Microsoft Power BI Data Analyst |

| Module 6/17 |

In this module,

you'll learn how to work with implicit and explicit measures.

You'll start by creating simple measures, which summarize a single column or table.

Then, you'll create more complex measures based on other measures in the model.

Additionally, you'll learn about the similarities of, and differences between, a calculated column and a measure.

1. Introduction

Implicit measures are automatic behaviors that allow visuals to summarize model column data.

Explicit measures, also known simply as measures, are calculations that you can add to your model.

a column that's shown with the sigma symbol ( ∑ ) indicates two facts:

It's a numeric column.

It will summarize column values when it is used in a visual (when added to a field well that supports summarization).

Implicit measures allow the report author to start with a default summarization technique and lets them modify it to suit their visual requirements.

i. Summarize non-numeric columns

Non-numeric columns can be summarized.

However, the sigma symbol does not show next to non-numeric columns in the Fields pane because they don't summarize by default.

Text columns allow the following aggregations:

First (alphabetically) Last (alphabetically) Count (Distinct) Count

Date columns allow the following aggregations:

Earliest Latest Count (Distinct) Count

Boolean columns allow the following aggregations:

Count (Distinct) Count

ii. Benefits of implicit measures

Several benefits are associated with implicit measures. Implicit measures are simple concepts to learn and use, and they provide flexibility in the way that report authors visualize model data.

Additionally, they mean less work for you as a data modeler because you don't have to create explicit calculations.

iii. Limitations of implicit measures

The most significant limitation of implicit measures is that they only work for simple scenarios, meaning that they can only summarize column values that use a specific aggregation function. Therefore, in situations when you need to calculate the ratio of each month's sales amount over the yearly sales amount, you'll need to produce an explicit measure by writing a Data Analysis Expressions (DAX) formula to achieve that more sophisticated requirement.

Implicit measures don't work when the model is queried by using Multidimensional Expressions (MDX). This language expects explicit measures and can't summarize column data. It's used when a Power BI semantic model is queried by using Analyze in Excel or when a Power BI paginated report uses a query that is generated by the MDX graphical query designer.

2. Create simple measures

A measure formula must return a scalar or single value.

In tabular modeling, no such concept as a calculated measure exists. The word calculated is used to describe calculated tables and calculated columns. It distinguishes them from tables and columns that originate from Power Query, which doesn't have the concept of an explicit measure.

Measures don't store values in the model. Instead, they're used at query time to return summarizations of model data. Additionally, measures can't reference a table or column directly; they must pass the table or column into a function to produce a summarization.

A simple measure is one that aggregates the values of a single column; it does what implicit measures do automatically.

Measures:

Revenue =

SUM(Sales[Sales Amount])

Cost =

SUM(Sales[Total Product Cost])

Profit =

SUM(Sales[Profit Amount])

Quantity =

SUM(Sales[Order Quantity])

Minimum Price =

MIN(Sales[Unit Price])

Maximum Price =

MAX(Sales[Unit Price])

Average Price =

AVERAGE(Sales[Unit Price])

Order Line Count =

COUNT(Sales[SalesOrderLineKey])

Order Count =

DISTINCTCOUNT('Sales Order'[Sales Order])

Order Line Count =

COUNTROWS(Sales)

3. Create compound measures

When a measure references one or more measures, it's known as a compound measure.

Profit =

[Revenue] - [Cost]

By removing this calculated column, you've optimized the semantic model. Removing this columns results in a decreased semantic model size and shorter data refresh times.

4. Create quick measures

a feature named Quick Measures. This feature helps you to quickly perform common, powerful calculations by generating the DAX expression for you.

5. Compare calculated columns with measures

Regarding similarities between calculated columns and measures, both are:

Calculations that you can add to your semantic model. Defined by using a DAX formula. Referenced in DAX formulas by enclosing their names within square brackets.

The areas where calculated columns and measures differ include:

Purpose - Calculated columns extend a table with a new column, while measures define how to summarize model data.

Evaluation - Calculated columns are evaluated by using row context at data refresh time, while measures are evaluated by using filter context at query time. Filter context is introduced in a later module; it's an important topic to understand and master so that you can achieve complex summarizations.

Storage - Calculated columns (in Import storage mode tables) store a value for each row in the table, but a measure never stores values in the model.

Visual use - Calculated columns (like any column) can be used to filter, group, or summarize (as an implicit measure), whereas measures are designed to summarize.

6. Check your knowledge

Which statement about measures is correct?

Measures can reference other measures. It's known as a compound measure.

- Measures don't need to be added to the semantic model. The concept of an implicit measure allows visuals to summarize column values.

Which DAX function can summarize a table?

The COUNTROWS function summarizes a table by returning the number of rows.

- The SUM function can only summarize a column.

Which of the following statements describing similarity of measures and calculated columns in an Import model is true?

They can achieve summarization of model data. A calculated column can be summarized (implicit measure) and a measure always achieves summarization.

- While measures can be quickly created by using the Quick measures feature, no equivalent feature exists to create calculated columns.

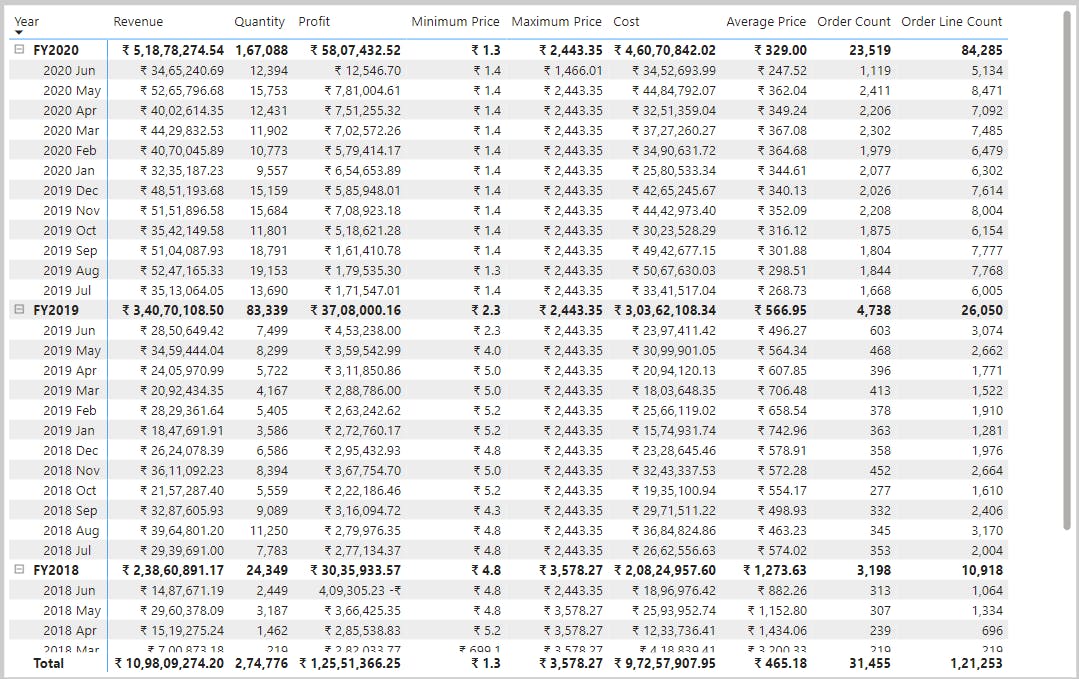

7. Exercise - Create DAX Calculations in Power BI Desktop

In this exercise, you’ll create calculated tables, calculated columns, and simple measures using Data Analysis Expressions (DAX).

In this exercise you'll learn how to:

Create calculated tables

Create calculated columns

Create measures

i. Create Calculated Tables

In the formula bar (which opens directly beneath the ribbon when creating or editing calculations), type Salesperson =, press Shift+Enter, type ‘Salesperson (Performance)’, and then press Enter.

Note: Calculated tables are defined by using a DAX formula that returns a table. It’s important to understand that calculated tables increase the size of the data model because they materialize and store values. They’re recomputed whenever formula dependencies are refreshed, as will be the case for this data model when new (future) date values are loaded into tables.

Unlike Power Query-sourced tables, calculated tables can’t be used to load data from external data sources. They can only transform data based on what has already been loaded into the data model.

ii. Create the Salesperson table

Salesperson = 'Salesperson (Performance)'

iii. Create the Date table

Date =

CALENDARAUTO(6)

The CALENDARAUTO() function returns a single-column table consisting of date values. The “auto” behavior scans all data model date columns to determine the earliest and latest date values stored in the data model. It then creates one row for each date within this range, extending the range in either direction to ensure full years of data is stored.

This function can take a single optional argument that is the last month number of a year. When omitted, the value is 12, meaning that December is the last month of the year. In this case, 6 is entered, meaning that June is the last month of the year.

iv. Create calculated columns

In this task, you’ll add more columns to enable filtering and grouping by different time periods. You’ll also create a calculated column to control the sort order of other columns.

Year =

"FY" & YEAR('Date'[Date]) + IF(MONTH('Date'[Date]) > 6, 1)

A calculated column is created by first entering the column name, followed by the equals symbol (=), followed by a DAX formula that returns a single-value result. The column name can’t already exist in the table.

The formula uses the date’s year value but adds one to the year value when the month is after June. It’s how fiscal years at Adventure Works are calculated.

Quarter =

'Date'[Year] & " Q"

& IF(

MONTH('Date'[Date]) IN {7,8,9},1,

IF(

MONTH('Date'[Date]) IN {10,11,12},2,

IF(

MONTH('Date'[Date]) IN {1,2,3},3,

IF(

MONTH('Date'[Date]) IN {4,5,6},4

)

)

)

)

simpler:

Quarter =

'Date'[Year] & " Q"

& SWITCH(

TRUE(),

MONTH('Date'[Date]) IN {7,8,9}, 1,

MONTH('Date'[Date]) IN {10,11,12}, 2,

MONTH('Date'[Date]) IN {1,2,3}, 3,

MONTH('Date'[Date]) IN {4,5,6}, 4

)

Month =

FORMAT('Date'[Date],"yyyy mmm")

By default, text values sort alphabetically, numbers sort from smallest to largest, and dates sort from earliest to latest.

MonthKey =

(YEAR('Date'[Date]) * 100) + MONTH('Date'[Date])

On the Column Tools contextual ribbon, from inside the Sort group, select Sort by Column, and then select MonthKey.

v. Complete the Date table

In this task, you’ll complete the design of the Date table by hiding a column and creating a hierarchy. You’ll then create relationships to the Sales and Targets tables.

vi. Mark the Date table

On the Table Tools contextual ribbon, from inside the Calendars group, select Mark as Date Table, and then select Mark as Date Table.

vii. Create simple measures

Visible numeric columns allow report authors at report design time to decide how column values will summarize (or not). It can result in inappropriate reporting. Some data modelers don’t like leaving things to chance, however, and choose to hide these columns and instead expose aggregation logic defined in measures. It’s the approach you’ll now take in this lab.

Avg Price =

AVERAGE(Sales[Unit Price])

Note:

It’s not possible to modify the aggregation behavior of a measure.

Median Price =

MEDIAN(Sales[Unit Price])

Min Price =

MIN(Sales[Unit Price])

Max Price =

MAX(Sales[Unit Price])

Orders =

DISTINCTCOUNT(Sales[SalesOrderNumber])

Order Lines =

COUNTROWS(Sales)

viii. Create additional measures

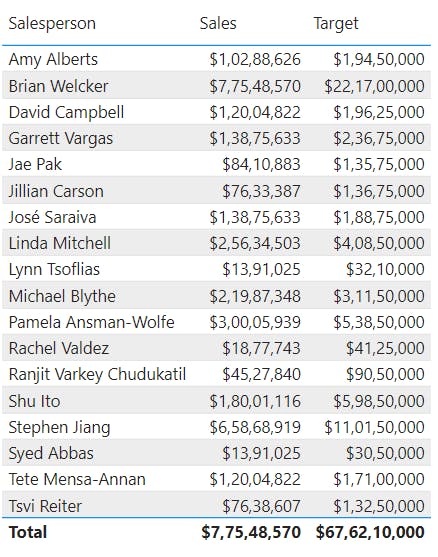

The HASONEVALUE() function tests whether a single value in the Salesperson column is filtered. When true, the expression returns the sum of target amounts (for just that salesperson). When false, BLANK is returned.

Target =

IF(

HASONEVALUE('Salesperson (Performance)'[Salesperson]),

SUM(Targets[TargetAmount])

)

8. Summary

In this module, you learned that Power BI measures are either implicit or explicit. Implicit measures are automatic behaviors that are supported by visuals, while explicit measures use DAX formulas that summarize model data.

Explicit measures are important because they allow you to create complex DAX formulas to achieve the precise calculations that your report visuals need. While you learned to create simple and compound measures in this module, in later modules you'll learn to create more powerful measures by using filter modification functions and iterator functions.

Learning objectives

By the end of this module, you'll be able to:

Determine when to use implicit and explicit measures.

Create simple measures.

Create compound measures.

Create quick measures.

Describe similarities of, and differences between, a calculated column and a measure.

VII. Module 7 Add calculated tables and columns to Power BI Desktop models

| Course | Microsoft Power BI Data Analyst |

| Module 7/17 | Add calculated tables and columns to Power BI Desktop models |

By the end of this module,

you'll be able to add calculated tables and calculated columns to your semantic model.

You'll also be able to describe row context, which is used to evaluated calculated column formulas. Because it's possible to add columns to a table using Power Query,

you'll also learn when it's best to create calculated columns instead of Power Query custom columns.

1. Introduction

You can write a Data Analysis Expressions (DAX) formula to add a calculated table to your model. The formula can duplicate or transform existing model data to produce a new table

A calculated table can't connect to external data; you must use Power Query to accomplish that task.

A calculated table formula must return a table object. The simplest formula can duplicate an existing model table.

Calculated tables have a cost: They increase the model storage size and they can prolong the data refresh time. The reason is because calculated tables recalculate when they have formula dependencies to refreshed tables.

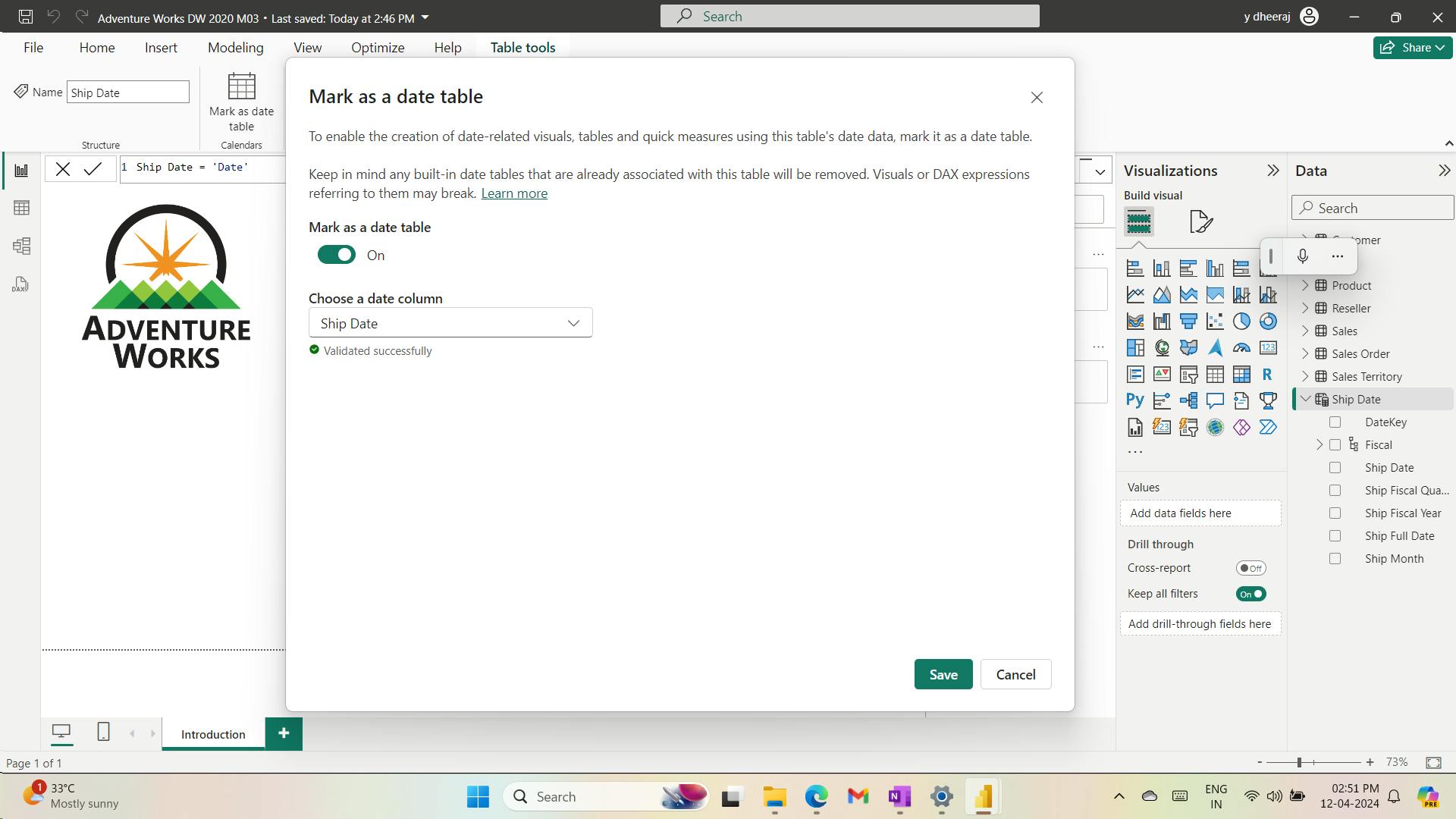

i. Duplicate a table

Data:

Download and open the Adventure Works DW 2020 M03.pbix

Ship Date = 'Date'

Mark the Ship Date table as a date table by using the Ship Date column.

Calculated tables are useful to work in scenarios when multiple relationships between two tables exist, as previously described.

They can also be used to add a date table to your model. Date tables are required to apply special time filters known as time intelligence.

ii. Create a date table

Due Date = CALENDARAUTO(6)

When the table rows and distinct values are the same, it means that the column contains unique values. That factor is important for two reasons: It satisfies the requirements to mark a date table, and it allows this column to be used in a model relationship as the one-side.

2. Create calculated columns

Due Fiscal Year =

"FY"

& YEAR('Due Date'[Due Date])

+ IF(

MONTH('Due Date'[Due Date]) > 6,

1

)

The calculated column definition adds the Due Fiscal Year column to the Due Date table.

The following steps describe how Microsoft Power BI evaluates the calculated column formula:

The addition operator (+) is evaluated before the text concatenation operator (&).

The

YEARDAX function returns the whole number value of the due date year.The

IFDAX function returns the value when the due date month number is 7-12 (July to December); otherwise, it returns BLANK. (For example, because the Adventure Works financial year is July-June, the last six months of the calendar year will use the next calendar year as their financial year.)The year value is added to the value that is returned by the

IFfunction, which is the value one or BLANK. If the value is BLANK, it's implicitly converted to zero (0) to allow the addition to produce the fiscal year value.The literal text value

"FY"concatenated with the fiscal year value, which is implicitly converted to text.

Due Fiscal Quarter =

'Due Date'[Due Fiscal Year] & " Q"

& SWITCH(

TRUE(),

MONTH('Due Date'[Due Date]) IN {1, 2, 3}, 3,

MONTH('Due Date'[Due Date]) IN {4, 5, 6}, 4,

MONTH('Due Date'[Due Date]) IN {7, 8, 9}, 1,

MONTH('Due Date'[Due Date]) IN {10, 11, 12}, 2

)

The calculated column definition adds the Due Fiscal Quarter column to the Due Date table. The IF function returns the quarter number (Quarter 1 is July-September), and the result is concatenated to the Due Fiscal Year column value and the literal text Q.

Due Month =

FORMAT('Due Date'[Due Date], "yyyy mmm")

The calculated column definition adds the Due Month column to the Due Date table. The FORMATDAX function converts the Due Date column value to text by using a format string. In this case, the format string produces a label that describes the year and abbreviated month name.

Due Full Date =

FORMAT('Due Date'[Due Date], "yyyy mmm, dd")

MonthKey =

(YEAR('Due Date'[Due Date]) * 100) + MONTH('Due Date'[Due Date])

The MonthKey calculated column multiplies the due date year by the value 100 and then adds the month number of the due date. It produces a numeric value that can be used to sort the Due Month text values in chronological order.

3. Learn about row context

The formula for a calculated column is evaluated for each table row. Furthermore, it's evaluated within row context, which means the current row.

However, row context doesn't extend beyond the table. If your formula needs to reference columns in other tables, you have two options:

If the tables are related, directly or indirectly, you can use the

RELATEDorRELATEDTABLEDAX function. TheRELATEDfunction retrieves the value at the one-side of the relationship, while theRELATEDretrieves values on the many-side. TheRELATEDTABLEfunction returns a table object.When the tables aren't related, you can use the

LOOKUPVALUEDAX function.

Sales table:

Discount Amount =

(

Sales[Order Quantity]

* RELATED('Product'[List Price])

) - Sales[Sales Amount]

The calculated column definition adds the Discount Amount column to the Sales table. Power BI evaluates the calculated column formula for each row of the Sales table. The values for the Order Quantity and Sales Amount columns are retrieved within row context. However, because the List Price column belongs to the Product table, the RELATED function is required to retrieve the list price value for the sale product.

Row context is used when calculated column formulas are evaluated. It's also used when a class of functions, known as iterator functions, are used. Iterator functions provide you with flexibility to create sophisticated summarizations. Iterator functions are described in a later module.

4. Choose a technique to add a column

There are three techniques that you can use to add columns to a model table:

Add columns to a view or table (as a persisted column), and then source them in Power Query. This option only makes sense when your data source is a relational database and if you have the skills and permissions to do so. However, it's a good option because it supports ease of maintenance and allows reuse of the column logic in other models or reports.

Add custom columns (using M) to Power Query queries.

Add calculated columns (using DAX) to model tables.